Table of Contents

Two years ago, I published my first video about how much of a threat Chat Control is, and I said that we had to fight against it. I'm here today to tell what happened since then.

I'll go through how we got here, what the current agreed-upon legislation entails, and what will happen now. Let's start!

2021: The ePrivacy Derogation & PhotoDNA

This story begins in 2021.

The EU notices a significant increase in child sexual abuse material. In fact, just between 2020 and 2021, online sexual exploitation cases rose tenfold, and child sexual abuse videos rose by 40%.

Now, it's unclear whether this was due to an increase in the usage of child sexual material or whether we got better at identifying the existing traffic. Patrick Breyer, who was a member of the European Parliament and who is vocally against Chat Controls, believes that the data does not indicate a real increase in child sexual abuse videos being trafficked.

Regardless, the European Union decided to quickly approve a temporary legislation to allow chat, message, and email service providers to check messages for "illegal depictions of minors and attempted initiation of contacts with minors".

This temporary legislation was later incorporated into the ePrivacy Derogation, and it was set to expire on the 3rd of August 2024. Please note that this directive is voluntary, meaning that email and message providers can choose to opt in or not. Moreover, this type of scanning was not legal in the EU prior to this regulation.

Many services, such as Discord, Facebook, Messenger, and Gmail, began looking at how to implement this directive.

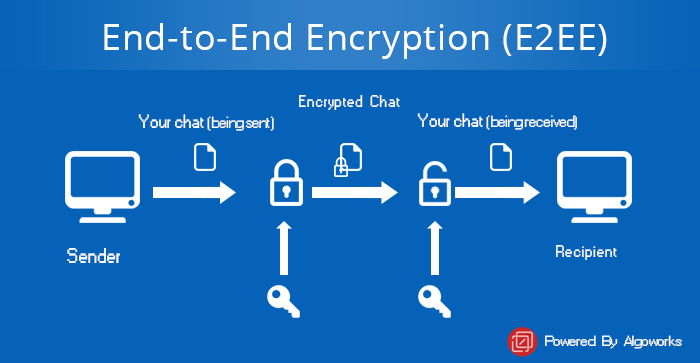

All of these services are not end-to-end encrypted, which means that, as an example, Discord can read all of your messages freely. This makes the job a bit easier, as they have access to the messages they're meant to check. Other applications, such as WhatsApp and Signal, are end-to-end encrypted, which means that they keep your messages encrypted from your device to the device of the person you're texting. Therefore, they don't have direct access to your messages and, unsurprisingly, none of these applications opted to track user messages through this directive.

Now, even if you do have access to your users' messages, how do you scan them for child sexual abuse material? For obvious reasons, sharing around a large database of child sexual abuse material to check the message against is quite impractical.

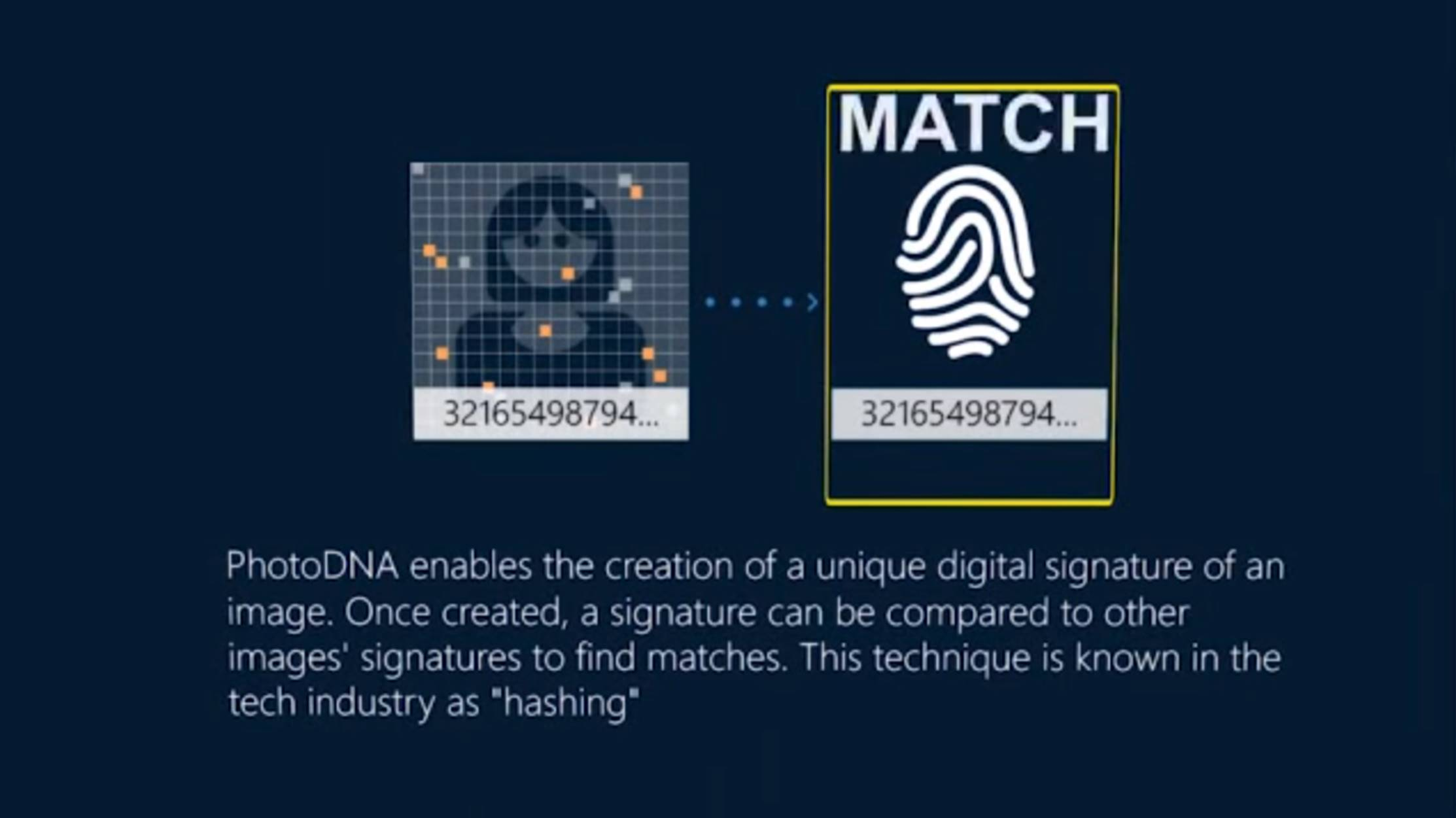

A good solution is the PhotoDNA tool by Microsoft. It's a simple tool (called a hashing function) that takes an image and transforms it into a string of text (called a hash).

It's an easy process, whereas going back to the original image, only given the string of text, is impossible. This way, every time a child's sexual image is found, only its hash is retained. And, when you want to check an image against the database, you simply take its hash and check if you've seen it before. And, yes, Discord does this for all images you send.

One important bit of information is that these hashes are not unique: multiple images could have the same hash. This means that you could end up with false positives: safe images that have the same hash as a pedopornographic image. This is called a collision.

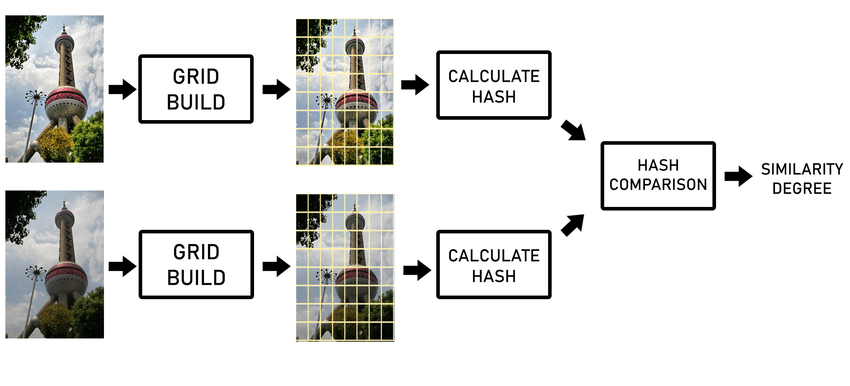

Another important bit of information is that these hashing functions usually give very different results for similar images, as a way to make it harder to get to the original image only knowing the hash, and to make collisions more rare. However, this would make PhotoDNA completely useless: it would be enough to change just one pixel to get an entirely new hash.

Because of this, PhotoDNA uses what's called a "perceptual hash", which gives the same (or a similar) hash to similar images; as a consequence, it's also easier to end up with edge cases such as collisions, and it's easier for a malicious actor to generate innocuous images with the same hash as bad images.

This is where the problems start. Yes, I have yet to mention Chat Control once, and we've already got some serious privacy issues.

There is an open letter by hundreds of university teachers clearly stating that, even after twenty years of research, no "safe" perceptual hash function exists. Quite the opposite: all such functions that we know of today have significant drawbacks.

Firstly, it's always possible to make small adjustments to an image and end up with a completely different hash, meaning that bad actors can always go unnoticed if they put in the effort.

On top of that, and even worse, it's possible to create an image that looks completely normal and yet hash the same hash as a child pornography image, meaning that we can generate images that we know will turn out to be false positives.

This isn't great news, as false positives aren't always handled gracefully. As an example, a man was banned from his Google account for sending nude pictures of his son to the doctor: apparently, nobody double-checked the context of such images. He only found out when the police showed up at his door.

Both of these attacks have been achieved successfully against PhotoDNA (and Apple's NeuralHash, which is a different implementation of the same idea). But fear not!

There's a second solution that's becoming more common: detection of child sexual abuse material through AI. The drawbacks here are fairly obvious: in order to be accurate and to consider the context of the image, you'll need a somewhat powerful model, which would be tasked to analyze every single message sent. That's impossible!

Therefore, we have to fallback to much smaller and unreliable models, which will raise false positives more frequently. Again, consider the number of messages currently being sent every day: even just a small chance to become a false positive will mean that most flagged messages will be false positives.

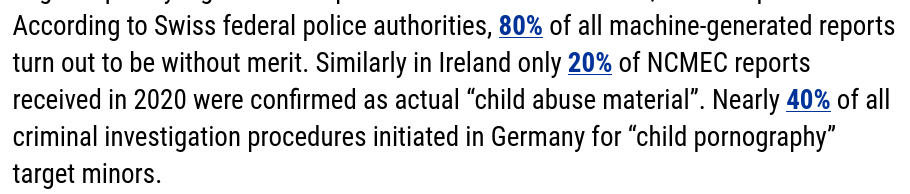

As an example, in Ireland, only 20.3% of all reports received by the Irish police forces (after the internal human checks from companies) were found to be actual exploitation material. The Swiss Federal Police also claims that 80% of the reports they receive (usually based on hashing) are "criminally irrelevant".

And remember that, whenever a false positive is found, the police forces receive your message and as much information about you as possible, to identify you!

I'm not claiming here that these efforts are entirely useless and should be dismantled, but it's important to point out that even the ePrivacy Derogation that we live in was quite ineffective in its goal.

2022: Commission's take on Chat Control

In 2022, the European Commission decided that it would like to extend this regulation beyond its original scope and make it mandatory. In order to follow the steps that eventually made the Chat Control proposal win, I need to give you a quick context of how the EU works.

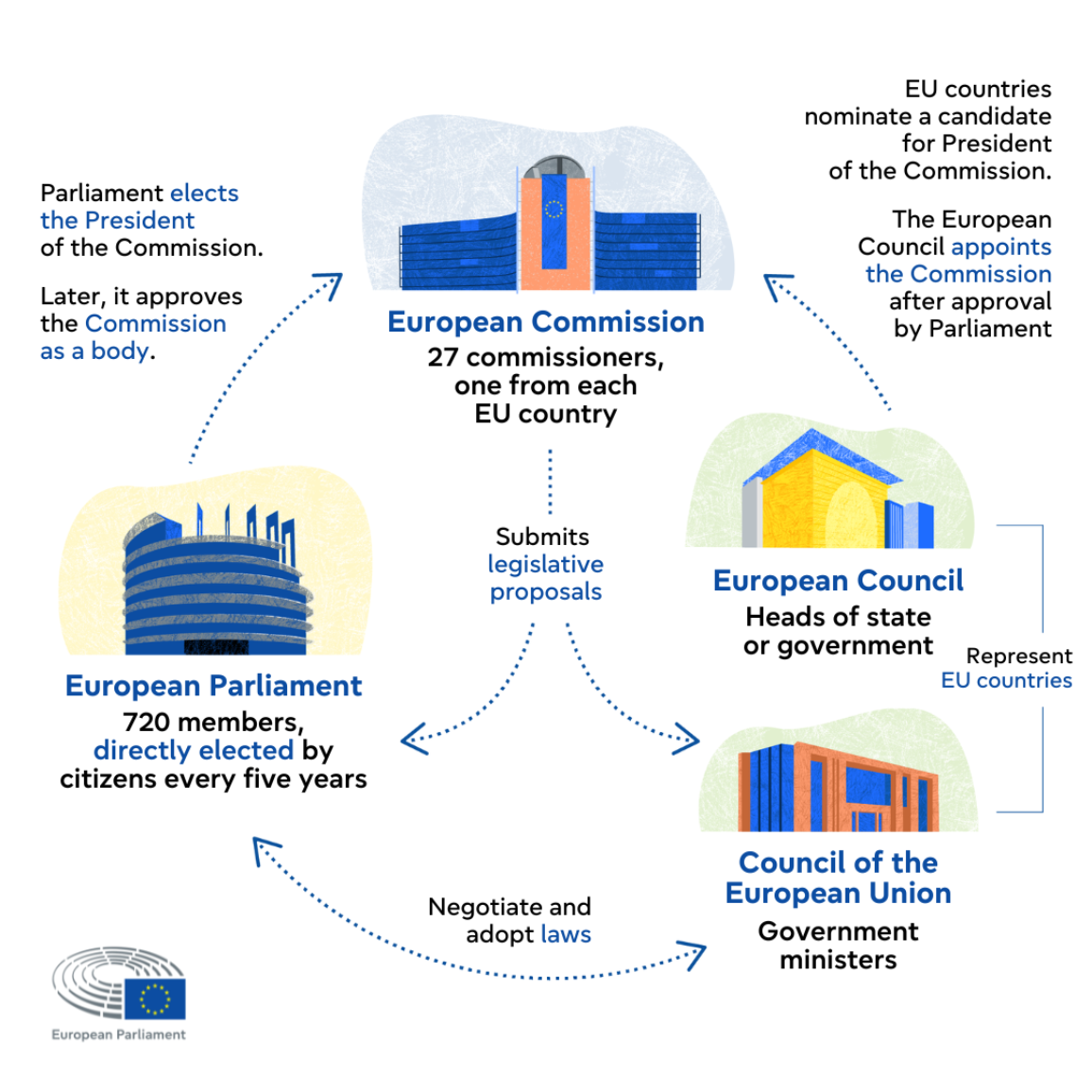

To make things as easy to understand as possible, the EU develops three different legislations at the same time in three different branches: the Council, the Parliament, and the Commission.

The Council is a symbolic head of state, composed of the heads of state of EU member states. The Parliament is akin to local parliaments, and it's directly elected by us citizens during the European Elections. The Commission is the executive branch of the EU, akin to a local government, and it's composed of 27 commissioners, including the President of the European Commission, Ursula Von Der Leyen!

When each of these branches has agreed upon their version of the text of the same legislation, we get to see a "trilogue": they negotiate with each other to pick the final wording of the text.

In May of 2022, the European Commission shared its draft of Chat Control. This was the starting point for the legislation, and it's one that sparked much controversy. As we head for the trilogue, this is still the text that the Commission uses as a reference; therefore, we should be aware of what it proposes.

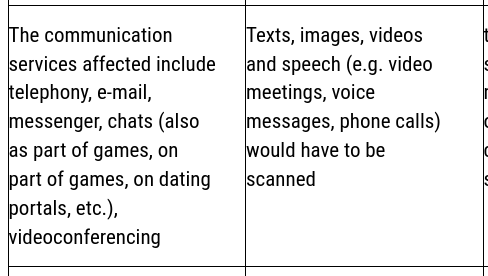

Firstly, all communication (telephony, e-mail, messenger, chats, and videoconferencing) now has to be checked for child sexual abuse material. This is similar to the ePrivacy Derogation, but it would be mandatory and would now last forever.

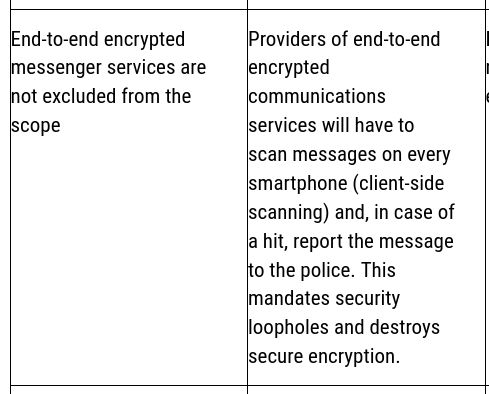

Even worse, it would be mandatory for end-to-end encrypted messages as well. As I mentioned previously, no end-to-end message platform has implemented the optional ePrivacy Derogation; how is the Commission expecting them to?

The idea is: instead of checking messages when they reach the servers, we check the messages directly on your device, before they get encrypted. This way, everybody is happy: you send the message, it gets checked for child porn directly on your device, and if it's not, it gets encrypted end-to-end, and you still have your privacy.

If, however, you are sending child sexual abuse material, then your message gets sent to to server without encryption, so that it can be used as evidence against you when e.g. WhatsApp contacts the police.

However, everybody is not happy. This raises countless issues.

Firstly, as I mentioned previously, both hashing and AI checks can be bypassed and - even worse - a bad actor can construct a false positive that will get your message sent without encryption, and potentially get you in trouble as well.

Secondly, both of these checks will cause strain on your device: when using AI, you'll have to be running the model locally on your device, and it has to run for every message you send.

But even hash checking will cause issues: if you want to do the check entirely on the device, then you'll have to download the database of all hashes of all child sexual abuse images. I do have the fear that it would quickly grow to a large size as the database grows.

You could make the hash database available on the internet, but that also feels like a great security risk to me. Suppose that a malign actor manages to redirect your request for the hash database to another hash database that's under his control. He'd then be able to choose which text and images would be recognized as child porn, and he could instead insert, say, a cat picture. And, if you send that cat picture, your phone will think it's child porn, and it will send it unencrypted, which will make it visible for the malign actor. This effectively breaks end-to-end encryption!

The same tactic can be employed by whoever owns the hashing database. There's no way to know what content is in the database after it has been added: given a hash, I can't know what image generated it. This means that if a malign actor manages to put an image or text there, any message containing that image or text will suddenly be sent unencrypted.

And please note that I don't think I'm being too negative in assuming there will be malicious actors, even within the team that will be tasked with working on the hash database. Many EU governments are taking some worringly authoritarian stances, and we should not trust them to maintain end-to-end encryption if they are given the chance to break it.

Do you remember the open letter by university teachers I mentioned earlier? Here's what they have to say about scanning messages on your device:

As scientists, we do not expect that it will be feasible in the next 10 to 20 years to develop a solution that can run on users' devices without leaking illegal information and that can detect known content in a reliable way.

The Commission's proposal contains even stronger requests: these checks should also look for unknown child sexual material, as in: child porn images or videos that aren't in the database. This means that the usage of AI or ML tools to detect child sexual abuse material (from text to images) would be mandatory, not optional.

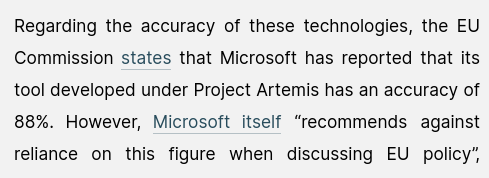

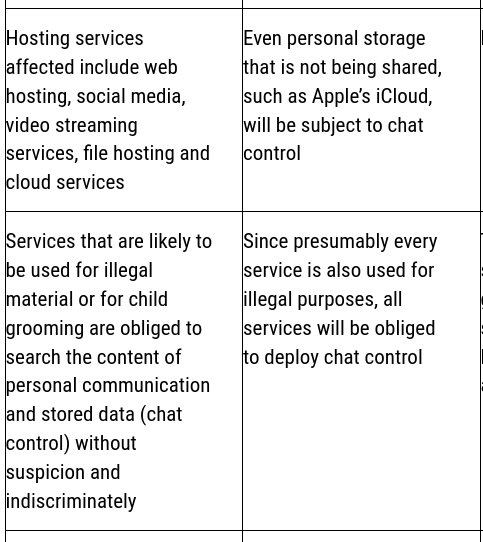

Microsoft has developed such a tool, and they claim that it has an 88% accuracy, meaning that out of one hundred conversations flagged as grooming, only 12 are false positives, and Microsoft itself recommends against reliance on this figure when discussing EU policy.

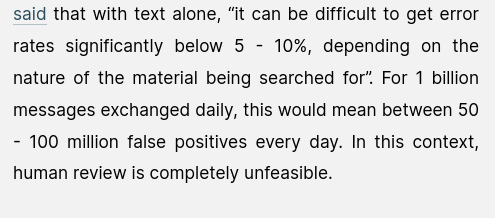

Researchers expect about 50 to 100 million false positives every day when using these tools, which indeed make human review completely impossible!

On top of that, all of these checks would have to apply to hosting services, including web hosting, social media, video streaming services, file hosting, and cloud services. This includes e.g. Google Drive and Google Photo, which already implement these tools. However, it also includes, as an example, all Nextcloud instances. And this applies to all hosted files, even if they are not shared with others.

It's not over: all communication services "that can be used for child grooming" (thus all) must verify the age of their users. Similar to how some countries (cough cough, Italy!) are rolling out mandatory ID checks for pornography, the Commission expects similar ID checks to access or download any messaging app.

This has obvious privacy drawbacks: as an example, you'd have to verify your ID even to sign up to Signal. Whistleblowers, human rights defenders, and marginalized groups would then have harder access to the full anonymity that they need.

If you are under 16 years of age, the Commission proposal is that you should not have access to any messaging application. This includes games with chats. This particular aspect is certainly debatable, though I feel like excluding all children under 16 from any messaging and social network apps is overly paternalistic and authoritarian for my tastes.

The Commission proposal was not met with great excitement. A representative reaction is the one by Signal, which is one of the most important privacy-oriented messaging app.

Their reaction was simple: if this proposal is accepted, we will leave the EU and cease operations within it; there's no way they'd scan all user messages, effectively breaking end-to-end encryption.

2023: Parliament's take on Chat Control

In November 2023, the European Parliament adopted a negotiating mandate for the draft law. This is how they develop their own text, which will then be discussed during the trilogue negotiations (which, by the way, happen behind closed doors).

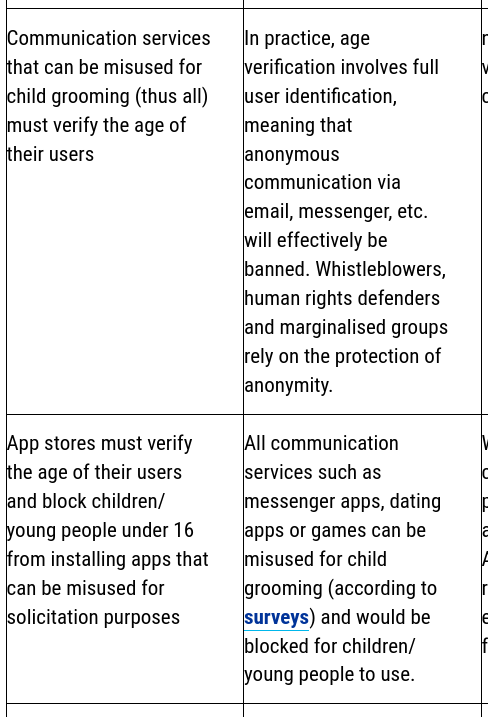

This was the result of a year of work by a group called LIBE ( the Committee on Civil Liberties, Justice and Home Affairs) and one called IMCO. These groups met many times in order to develop the draft that was eventually voted upon and accepted.

Firstly, the Parliament's mandate draft excludes end-to-end encrypted message applications from the scope of the legislation. This would ensure that applications like WhatsApp and Signal are safe and that anonymity is preserved for them.

The draft also removes the scanning of phone calls and text messages; therefore, only e-mail messenger chat and videoconferencing services are included.

Instead of scanning being mandatory for all users, the Parliament asked to only scan specific individuals and groups reasonably suspected of being linked to child sexual abuse material.

The Parliament's mandate does not include any mandatory age verification for users of messaging apps, nor does it mandate the use of ML/AI tools to stop grooming. App stores, instead of asking for an ID, should not make "a reasonable effort to ensure parental consent" for users below 16.

Overall, this is a much more agreeable proposal, and it can be seen as an extension of the ePrivacy Derogation indefinitely. It means living with PhotoDNA-like checks, but not mandatory, not breaking end-to-end encryption, and without age verifications.

This sounds like a dream; however, Patrick Breyer warns that:

Beware: This is only the Parliament’s negotiating mandate, which usually only partially prevails [in the trilogue negotiations].

Nonetheless, I feel like it's important here to remind everyone that, as flawed as it might be, the European Union is nonetheless a pretty great achievement that has produced many pieces of legislation that directly benefit the Open Source world. I find it particularly heartwarming that the body that's directly elected by the citizens, the Parliament, was clearly against most of Chat Control's Commission proposal.

Thus, at the end of 2023, we're left with a dystopian proposal from the Commission and a reasonable proposal from the Parliament. The Council is the only missing body, and it's quite clear that its draft might be decisive.

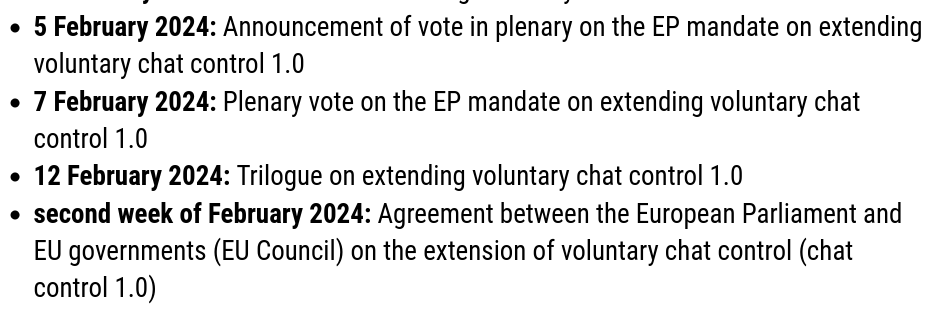

2024: back to the ePrivacy Derogation

Heading into 2024, it's worth noting that the EU noticed that the ePrivacy Derogation was going to end in August. We risked having that directive expire as Chat Control was still being discussed, creating some sort of gray area. Therefore, they quickly decided to extend its effect until April 2026. Please keep this in mind, as it will be relevant later!

2025: Council's take on Chat Control

It's important to know that the European Council Presidency (which gets to chair meetings of the council, determines its agendas, and sets a work program) rotates every six months.

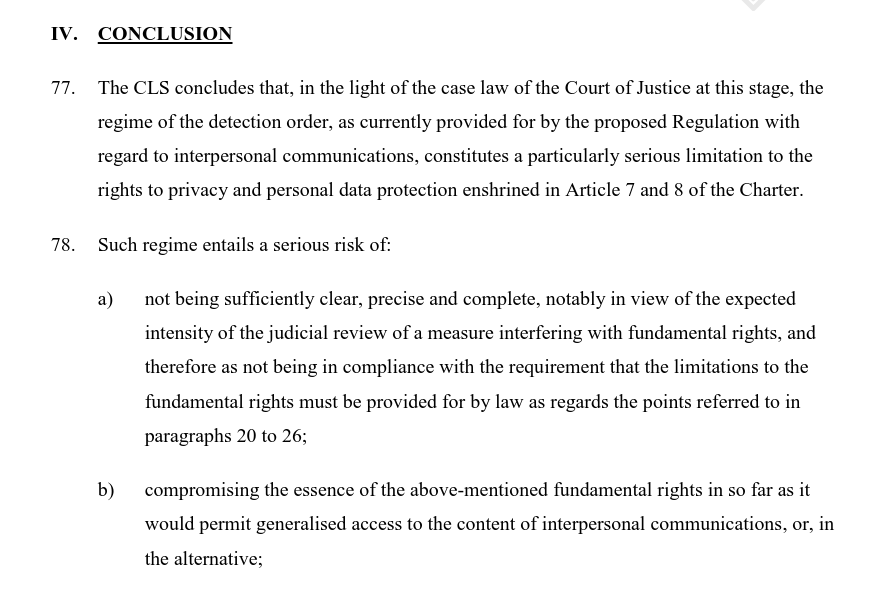

The Council also has a Legal Service group, which reviewed the Commission proposal and found many problems with it. The document is quite hard to read, but it states that it compromises the essence of privacy and personal data protection rights, and it requires too much data to be processed without distinction to all persons using that specific service, regardless of whether they are suspected of abuse.

Nonetheless, the presidencies of the Council decided to try to propose drafts that were quite similar to the Commission's; all of them were shot down. By the end of 2024, it was clear that there were two blocs of countries fighting over the legislation.

On one side, we have countries such as Ireland and Spain, which support the mandatory scanning for all messages, including end-to-end encrypted ones. On the other side, we have countries like Germany and the Netherlands, which oppose such tools.

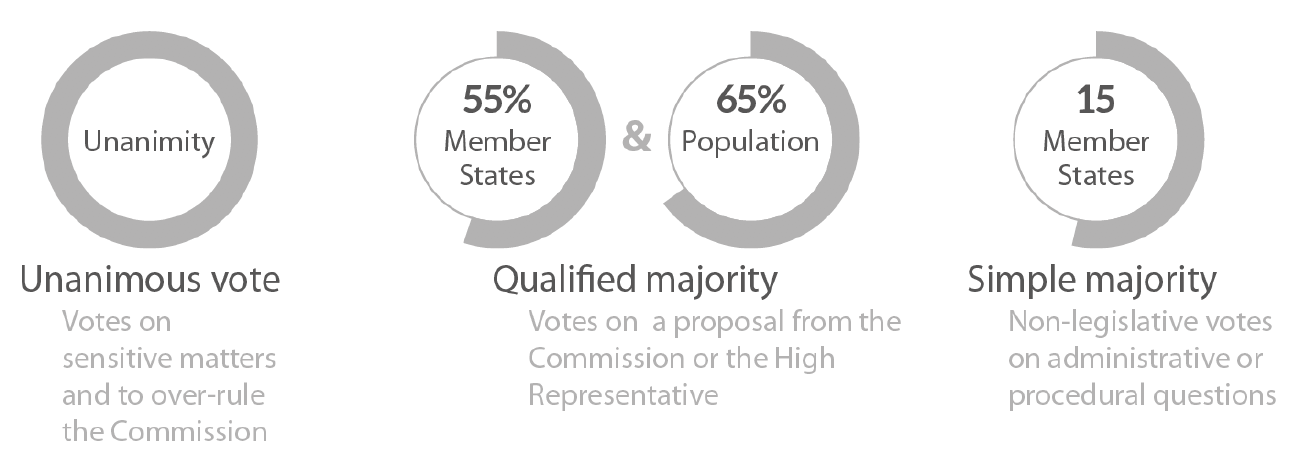

So far, the countries against it or undecided have managed to be a "blocking minority". What's that? Well, it's time for another "how the EU works" pill of knowledge: for legislation such as Chat Control to pass in the Council, there needs to be agreement by 15 out of 27 countries representing at least 65% of the population of the EU. This is called "qualified majority".

Effectively, this means that even a minority of countries can block a law, as long as they represent more than 35% of the EU population. That's called a blocking minority.

In January 2025, Poland took over the Council presidency and decided to solve this Chat Control issue once and for all. Therefore, they drafted a compromise text that would remove the most controversial aspects of the legislation.

Namely, Poland's proposal would also only consider message scanning to be optional instead of mandatory, and it also explicitly rules out end-to-end encryption. It's nonetheless not a perfect proposal: there's a review clause that might make detection mandatory three years after the law is passed. Companies are also required to work on better scanning technologies.

Indeed, this seems to be a compromise: though the worrying parts of Chat Control are removed, the proposal hints at them being brought back in a few years.

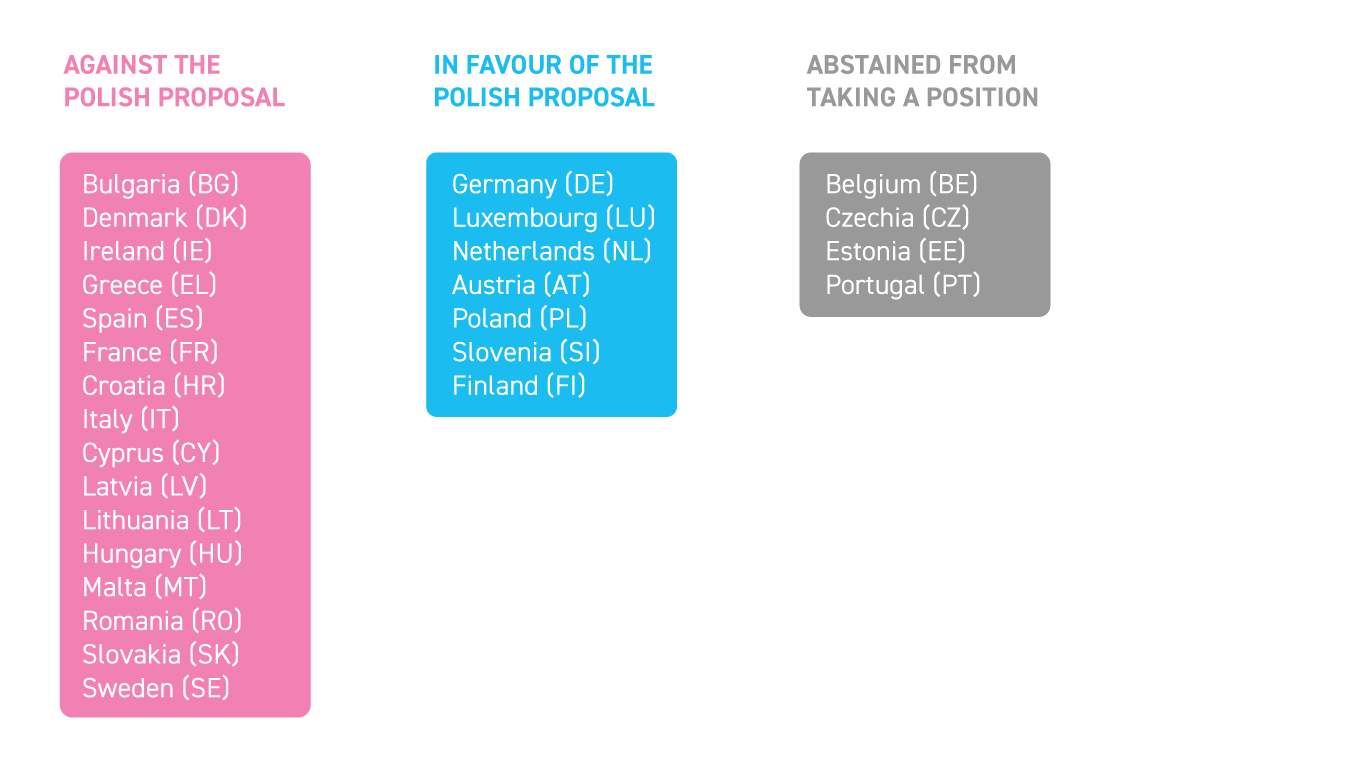

Nonetheless, the vote on the Polish proposal allowed us to see the above-mentioned blocks of countries more clearly. Namely, the only votes in favor of this proposal were from Germany, Luxembourg, the Netherlands, Austria, Poland (duh), Slovenia, and Finland. The votes against were 16, including Ireland, Greece, Spain, Italy, and more. Obviously, no qualified majority was reached.

However, the countries that voted in favor of the Polish proposal represent 42.43% of the population, meaning that they are indeed a blocking minority: Chat Control could not move forward unless either Germany or the Netherlands had a change of mind.

Then, in July 2025, Denmark took over the presidency and decided it was time to really, really solve this Chat Control issue once and for all. They even said it was a priority of their presidency and very quickly pushed out a compromise text.

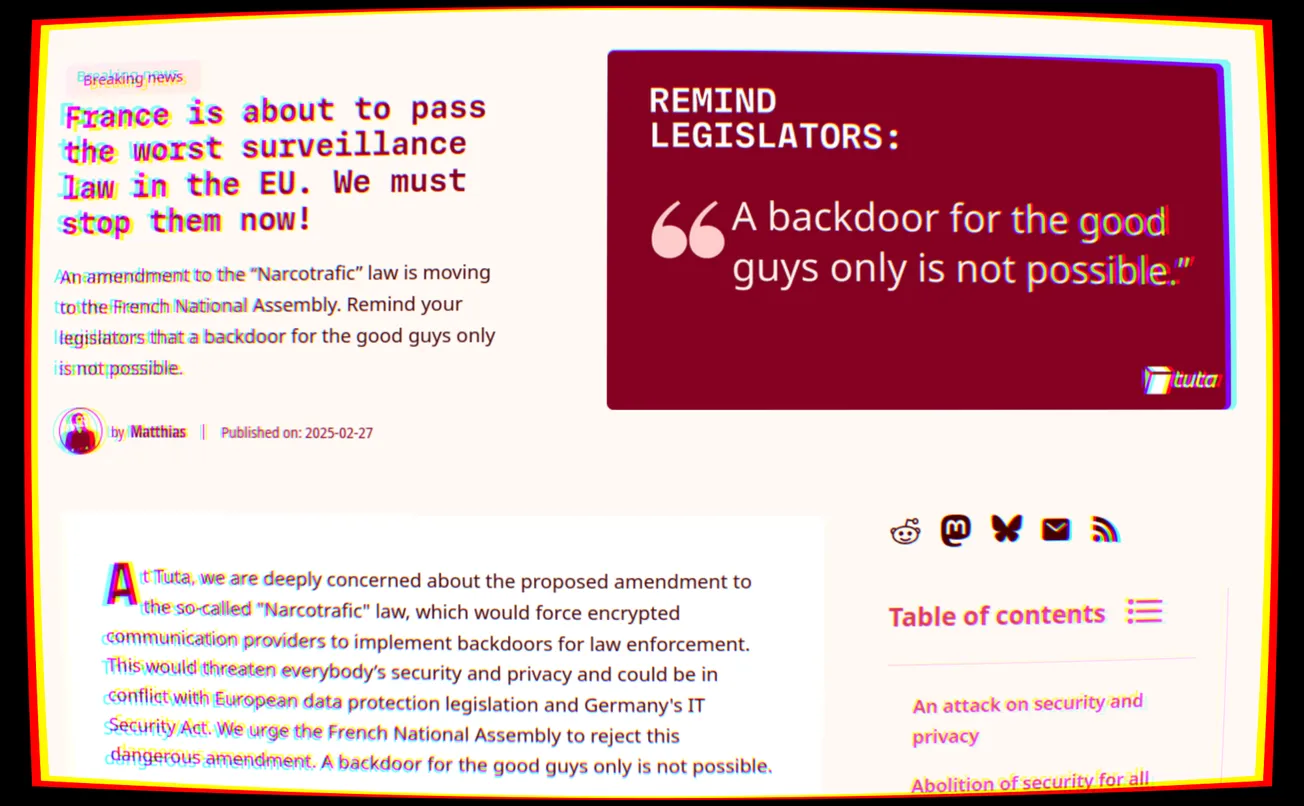

The Danish compromise text, according to the EDRi (an European Digital Rights association), trashed all the work from the Poland version and went back to the Commission proposal.

The main differences from the Commission proposals are:

- The Danish compromise would not scan for text, but only for photos and video. Scanning text for grooming would initially be out of scope, but there's another review clause that might add it back three years after the passing of the law. (This is taken from the compromise proposal of the Belgian presidency).

- The Danish compromise would apply to end-to-end encryption only through client-side scanning. Users would be able to opt out, and they would not be able to send images or videos (This is taken from the compromise proposal of the Hungarian presidency).

This proposal sparked even more outrage, with Patrick Breyer (yes, his life is dedicated to fighting Chat Control only) calling it one of the worst proposals so far, even raising questions on whether they were really trying to solve the issue. Indeed, this version managed to lose the support of Denmark's own government.

It therefore came as quite a surprise when Denmark proposed a quite different compromise in October, which was quickly accepted by a qualified majority.

The new Danish compromise would keep the monitoring on an opt-in basis instead of mandatory, safeguarding end-to-end encryption. It also excludes telephony and text messages and adds search engines to the list. Overall, it's not the worst compromise that we could've gotten, but it's not the best either.

It has a few core issues still. Firstly, according to - you guessed it! - Patrick Breyer, providers are obliged to take all appropriate risk mitigation measures to prevent abuse on their platforms. This could be seen as a loophole to force companies into scanning every private message again, whilst claiming it is for "risk mitigation".

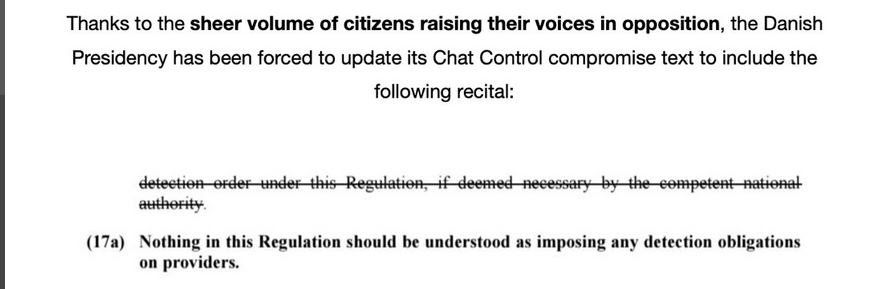

EDIT: I missed this in my original reporting, but the outrage towards this particular wording has worked: the Danish presidency updated the text to unambiguously state that no detection is mandatory. This is great news, as it only leaves open complaints about the ineffectiveness of the voluntary checks.

Furthermore, this proposal allows companies to monitor text messages between users as well, not just images and videos, through AI tools. Though it's good that it's not mandatory, there's still a significant worry of false positives creating issues.

Finally, the Council mandate's draft still requires ID or biometric checks to set up accounts on messaging platforms, to verify the user's age. If implemented incorrectly, this risks abolishing anonymous communication online, as you'd need to share your ID to make an account on any chat application, even a supposedly anonymous one.

What now?

If I taught you anything, you should now know that the trilogues are happening, intending to unify the three proposed legislations into one. One is dystopian, one is pretty good, and one is a compromise that we might be happy about.

The lead negotiator for the Parliament side, Javier Zarzalejos, said that these negotiations are urgent, and the same has been said by Cyprus, which represents the Council. Indeed, there's a hard timeline on all of this: April 2026, when the ePrivacy Derogation expires for good.

In order not to make the current child sexual abuse monitoring tools (such as PhotoDNA) illegal, Chat Control has to be ready by then. This leaves us only a few months of leeway to change things, as negotiations happen behind closed doors.

At this point, the most significant steps of the process have already happened, and the drafts have been decided upon. We're only waiting to know how they will be joined together.

That said, we can still pressure your European Members of Parliament to fight for us. This, and some public outcry about the Commission's proposal, will give the Parliament some extra leeway in the final version closer to their text.

Personally, I have recently visited an event hosted by one of the Members of Parliament that I voted for, Brando Benifei, and he was so kind that he shared a few minutes for a message on Chat Control.