Table of Contents

One of the strong points of Linux has always been how solid the experience of installing and managing software is. Contrarily to what happens in the Windows and macOS world, software on Linux is obtained through something called a package manager, a piece of software that manages any piece of software the user installs, as well as its dependencies, automatically.

Windows and macOS: life without good package managers

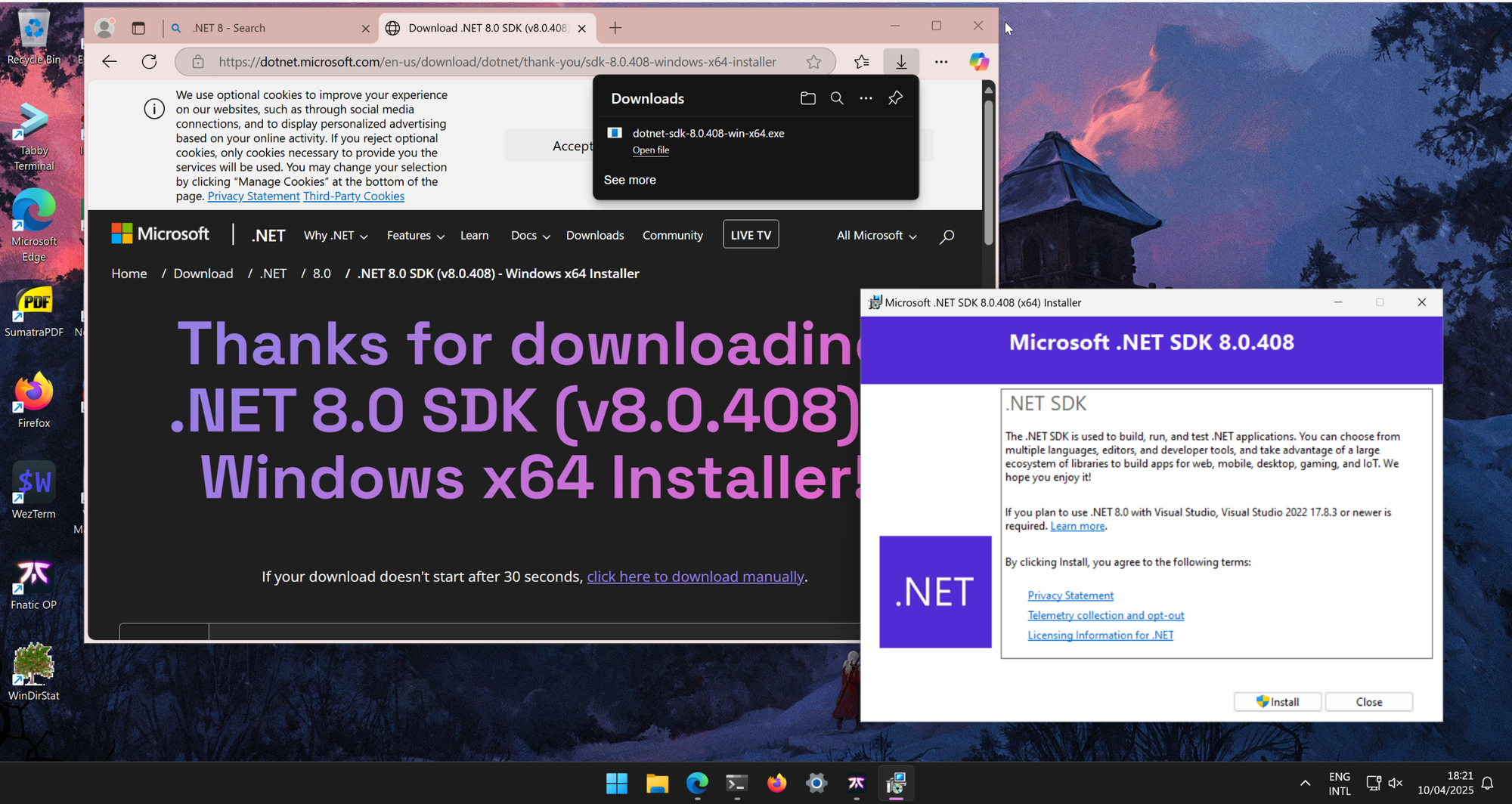

On commercial operating systems like Windows and macOS, the traditional way to obtain a piece of software is to use a web browser to navigate to some webpage to download and run an “installer”. The installer is an executable that runs a series of scripts to fundamentally extract a zip in some system folders somewhere, add a few environment variables, add registry keys and who knows what else to make the software work.

This way of doing things is archaic and, frankly, painful. Imagine if you were to set up a brand-new machine, and you had to install every piece of software you use this way: it would probably take you a few days of work just to get everything ready. Just imagine setting up an entire development environment like this!

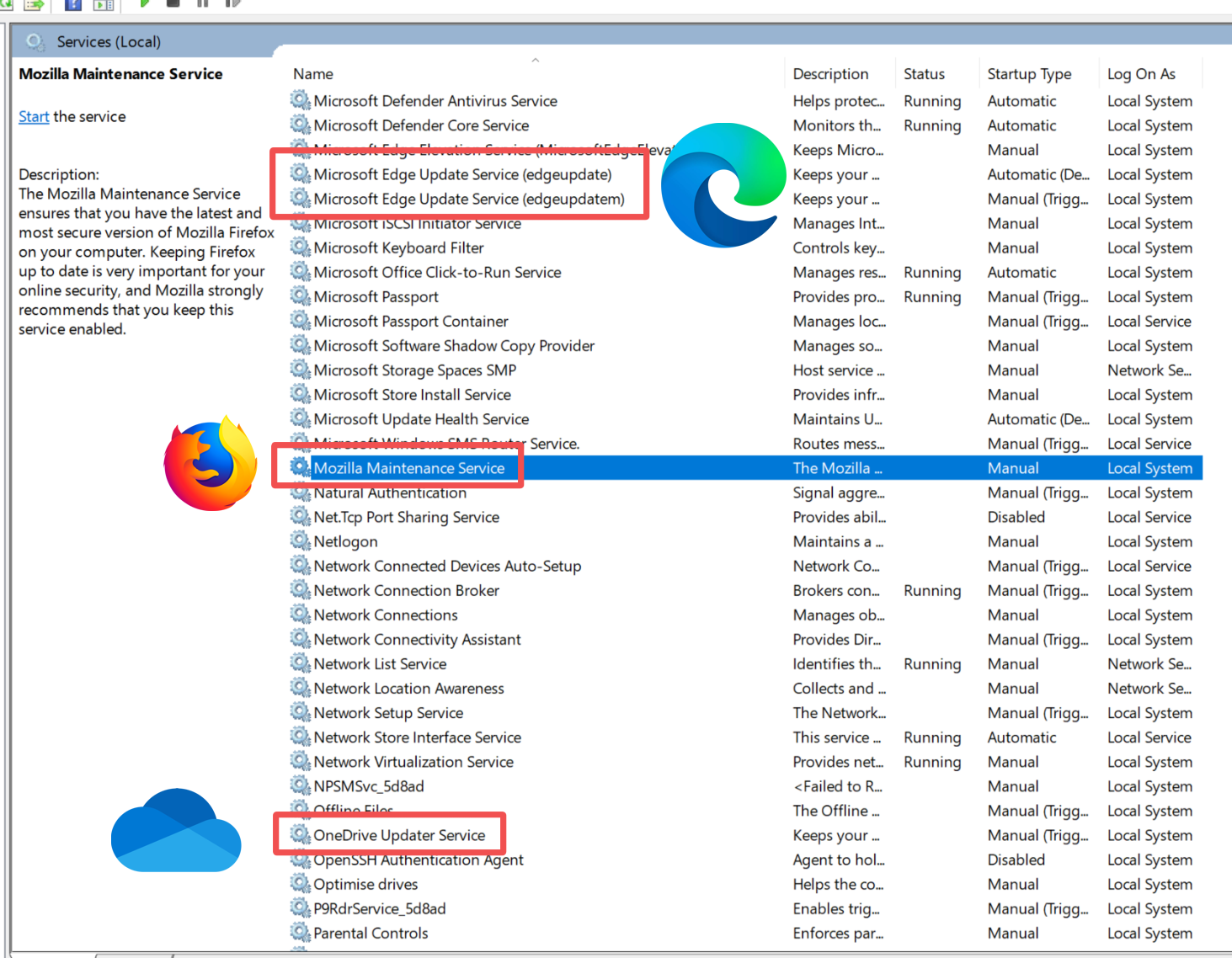

In addition to these faults, it is not a good idea to install anything this way. Firstly, you make yourself vulnerable to various kinds of attacks, like man-in-the-middle attacks, or a malicious actor managing to replace the executable the website serves with a version that was patched with a malicious payload. Software updates are also problematic: they need to be handled by the program itself, which often leaves your computer full of background processes that only exist to routinely check for updates to a lot of programs in the background.

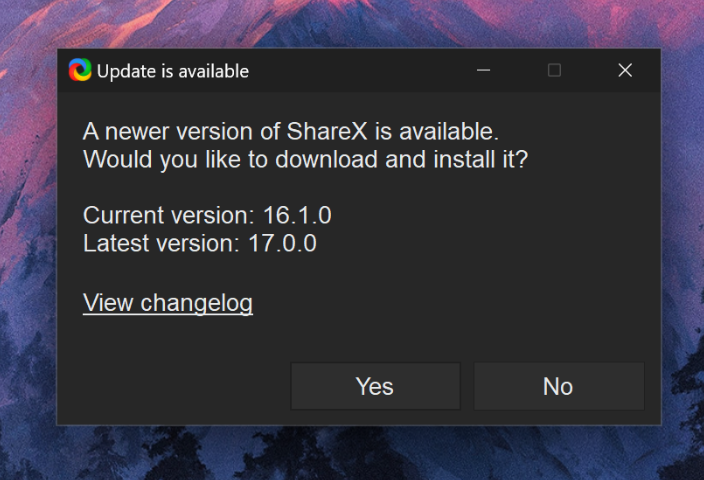

If a program does not register a background helper service just to be able to update itself, it needs to check for updates after it has started up, leaving you with the task of accepting the update and… busy waiting. It's probably a good time for a coffee break.

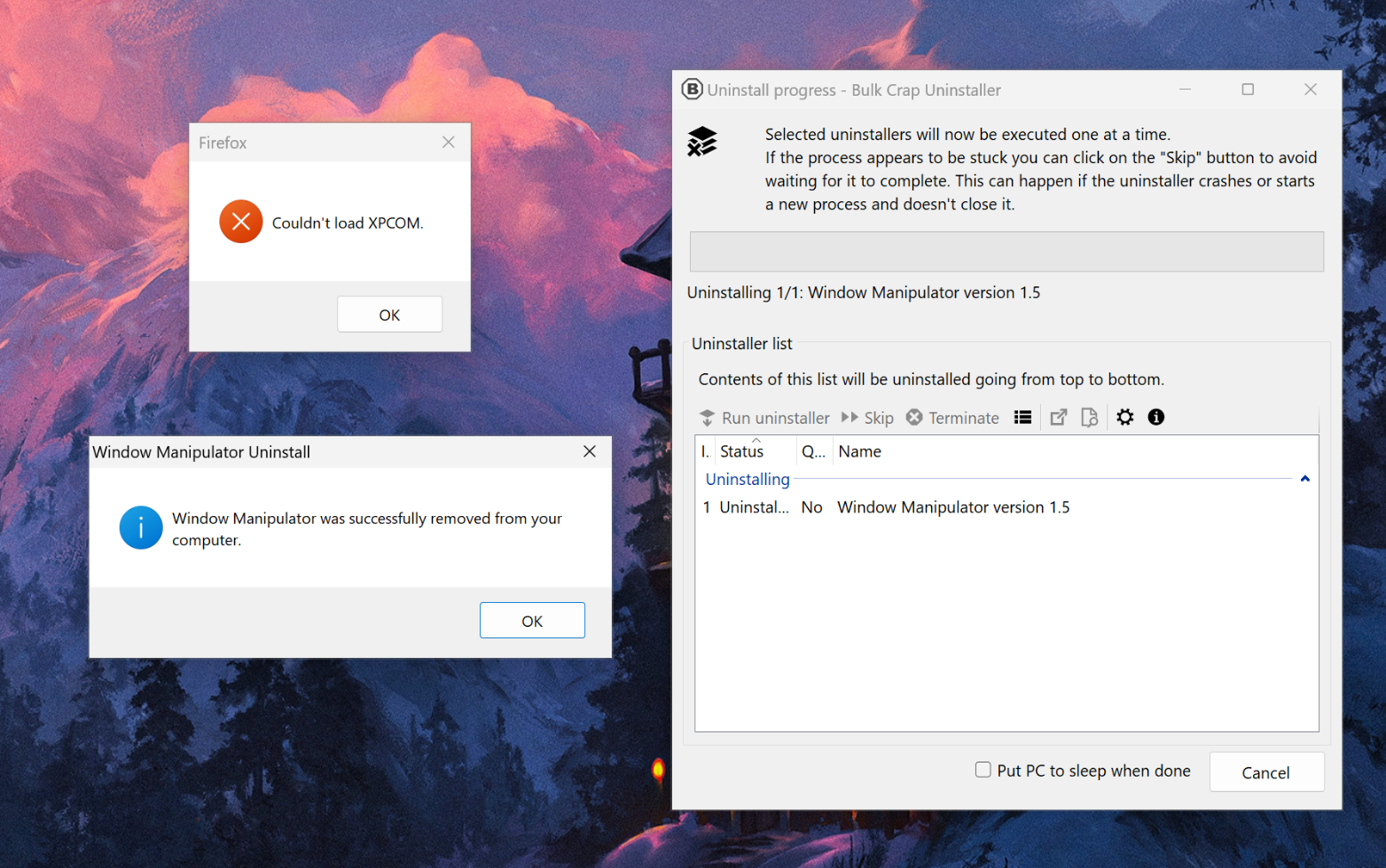

Not only does this situation make it so that every single application you add to your machine adds mental load, but, in most cases, it also adds resource load, and it is not trivial to completely remove from your machine. Installer and uninstaller scripts bundled inside of applications are private, and they are not managed by the OS. The cases where a developer took care of doing proper cleanup in their uninstaller script are pretty rare: often, there is no real way to ensure an application you had installed in the past is fully gone from your computer, leaving all kinds of traces behind, like non-working context menu items. These unclean removals cannot be fixed by anyone without a Windows system administration background. It's that hard. This is why, in Windows land, third-party uninstallers that try to look for leftovers, or download and run community-made uninstall scripts to get a bunch of programs to uninstall correctly, like Bulk Crap Uninstaller, are popular. This piece of software is a life-saver on Windows, but it, quite frankly, should not exist.

Microsoft has attempted to solve this problem with winget, an attempt at a “package manager” for Windows. On the surface, this looks good: you can open the CLI and install a program with winget install x, remove it with winget remove x, and keep everything up to date with winget update. Right… Right?

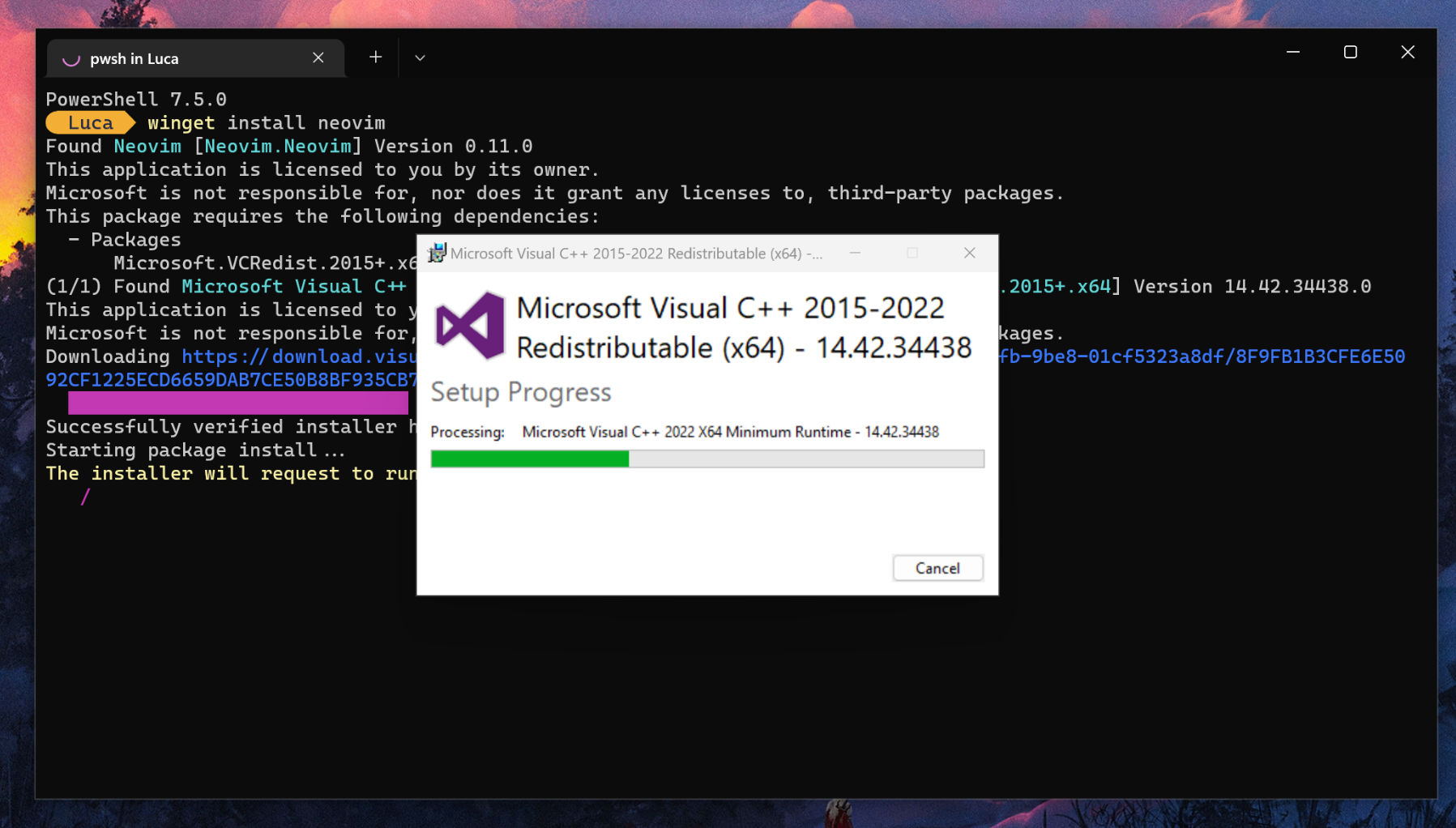

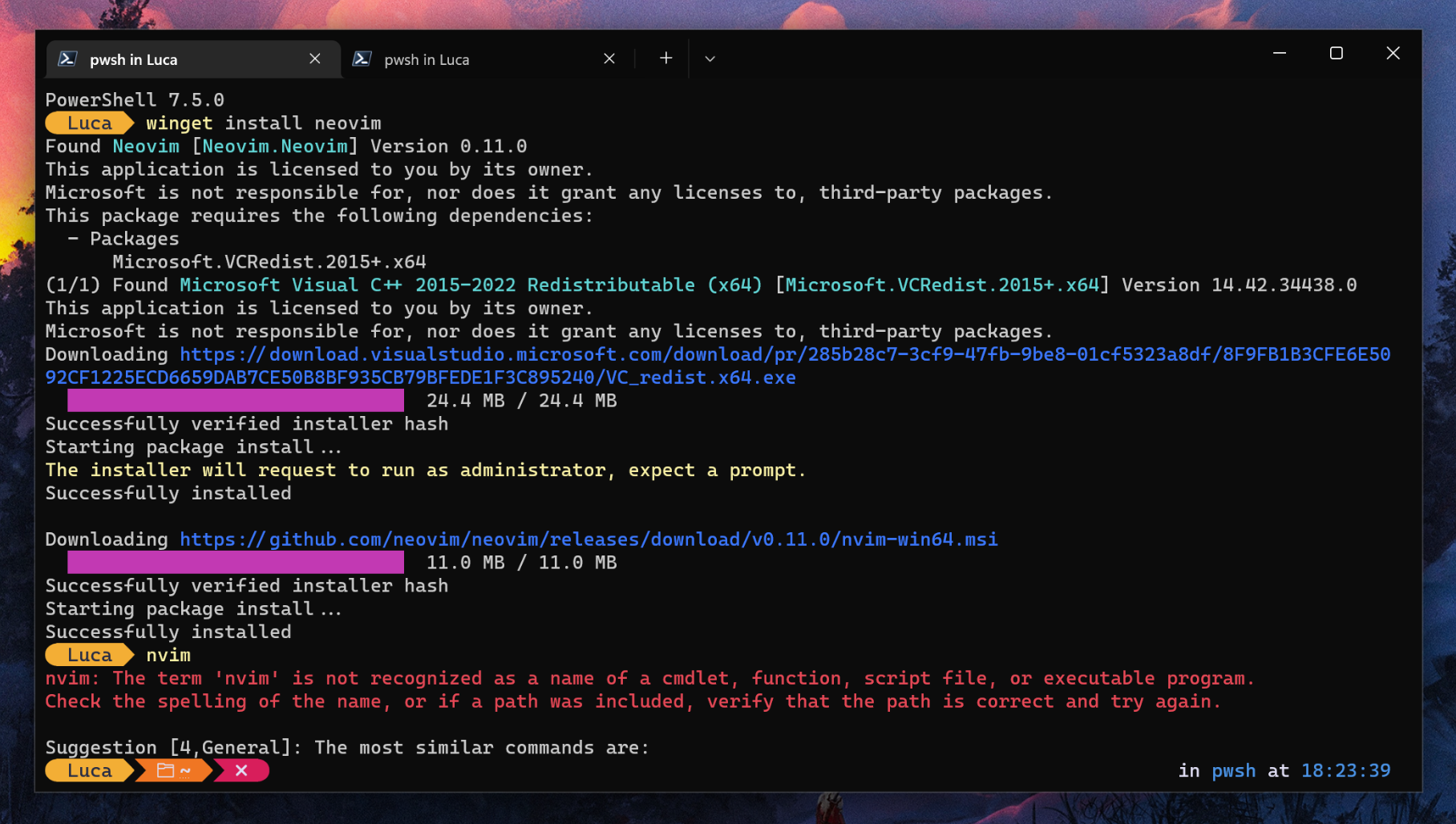

No, that couldn't be further from the truth. Despite what it claims to be, winget is not a package manager, it is merely a thin wrapper that downloads and runs the same installer and uninstaller scripts that you can download from a web browser. It comes with the same pitfalls, and I honestly can't say it works properly. As a test, I tried to use winget to install my favorite text editor, Neovim, and then run it. The fact that there is no package to manage, and the OS is not handling the installation of this program, is painfully evident by the output of the command: winget goes on to download and run the installers for a bunch of dependencies, running the installation scripts in unattended mode. However, the graphical windows for the various installed dependencies still show up, making it obvious that all winget is doing is run the regular setup scripts under the hood.

It does not solve anything, really: it adds some convenience, but it is merely an illusion. As a matter of fact, after installing Neovim, there was actually no way to run it! Nothing worked, not even spawning another terminal to reload the shell. On a proper package manager, this should never happen.

PATH manually?!The situation is a little better on macOS. You can install Homebrew, a package manager that allows you to much more easily manage a development environment. Sadly, though, Homebrew has its limits: it cannot, and it will not, update system components, and it does not contain every program under the sun. It is mostly useful for the purpose of setting up a development or DevOps environment, but it still does not free you from having to install most software manually.

Linux: life is easier with a proper package manager

On Linux, things are radically different. Indeed, on most Linux distros, every single component that makes up the operating system is part of a package management system. In some cases, the package manager itself is the component that bootstraps the installation!

The beauty of Linux distros is that pretty much every piece of software you will use is provisioned by a package manager, from the Linux kernel to high-level applications. Obtaining new software is, in turn, very straightforward: in most cases, software gets obtained by one single centralized repository, maintained by a trusted entity. There is no need to browse the web to find an executable: you can just use your package manager to search for any piece of software you need and, finally, install it!

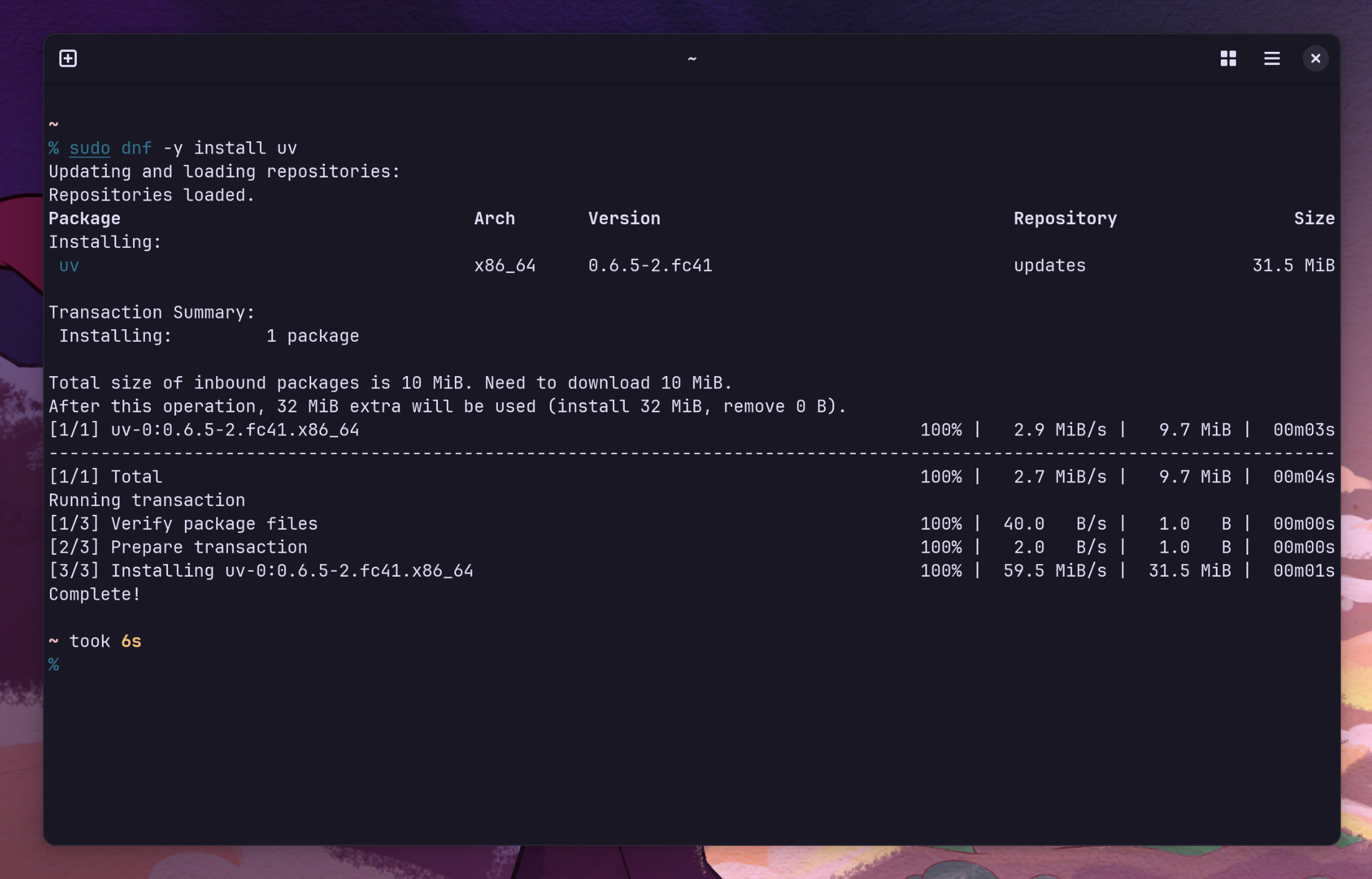

dnf package manager on FedoraContrarily to other package managers that are widely used on other operating systems, though, the package manager does not stop at installing the packages, but it actually does what it says on the tin: it can properly manage all the installed packages. Uninstalling a package completely is easy, and, aside from the files the program itself may have created in the user's home directory, it completely removes all traces of a program. There is no mess to clean up: when a package gets deleted, it's gone for good.

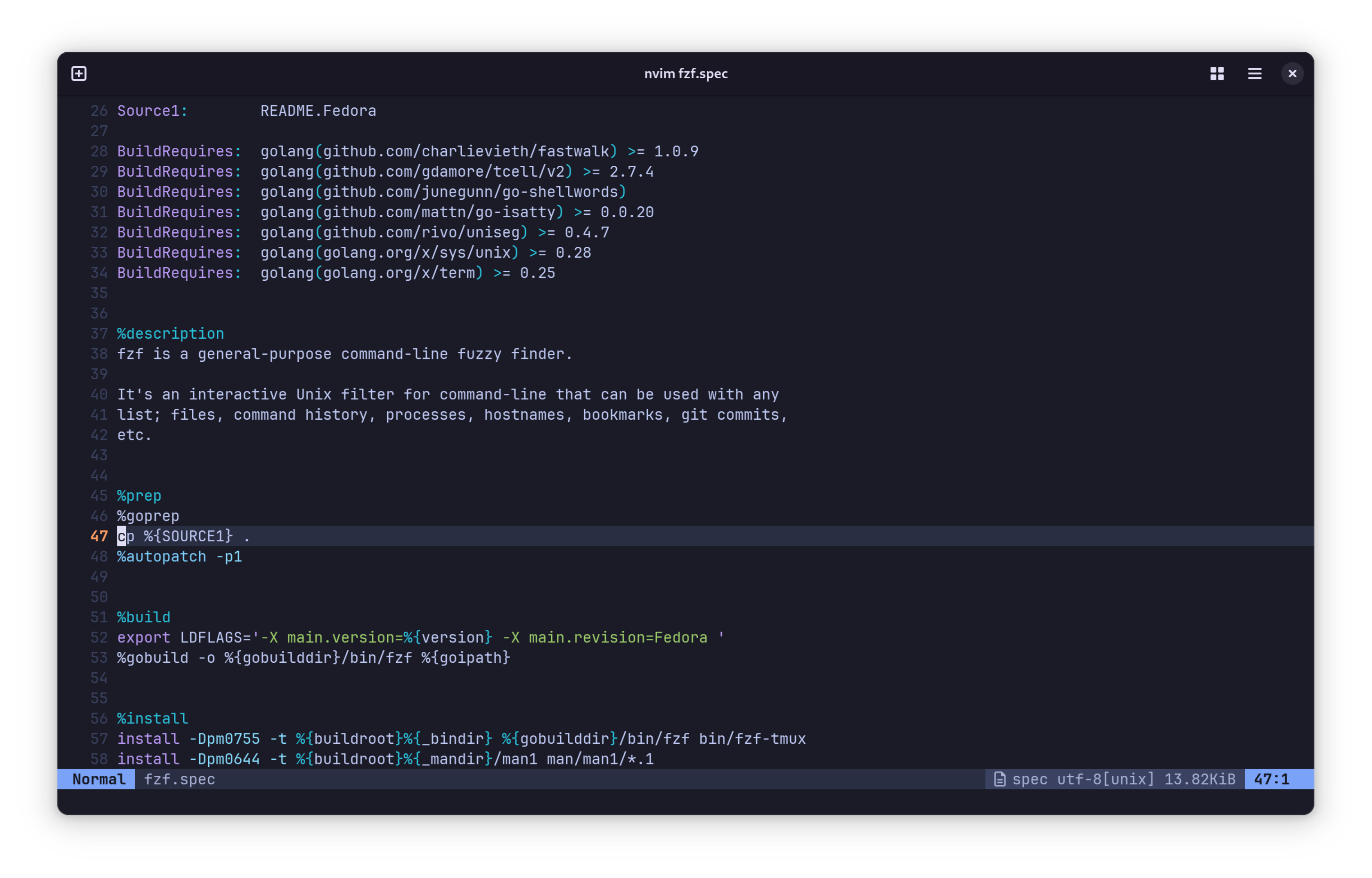

Rather than just running installer scripts, package managers handle packages. A package is an archive that contains all the files that a component, such as a piece of software or a font, is made of, as well as other identification files to go with it. Packages are built from a specification file that acts as a blueprint and contains instructions on how to build the package, allowing even complex deployments to be automated. Additionally, the package manager has a data store where information about packages are kept. It knows where all the files that make up a package are, so, it also knows what to remove.

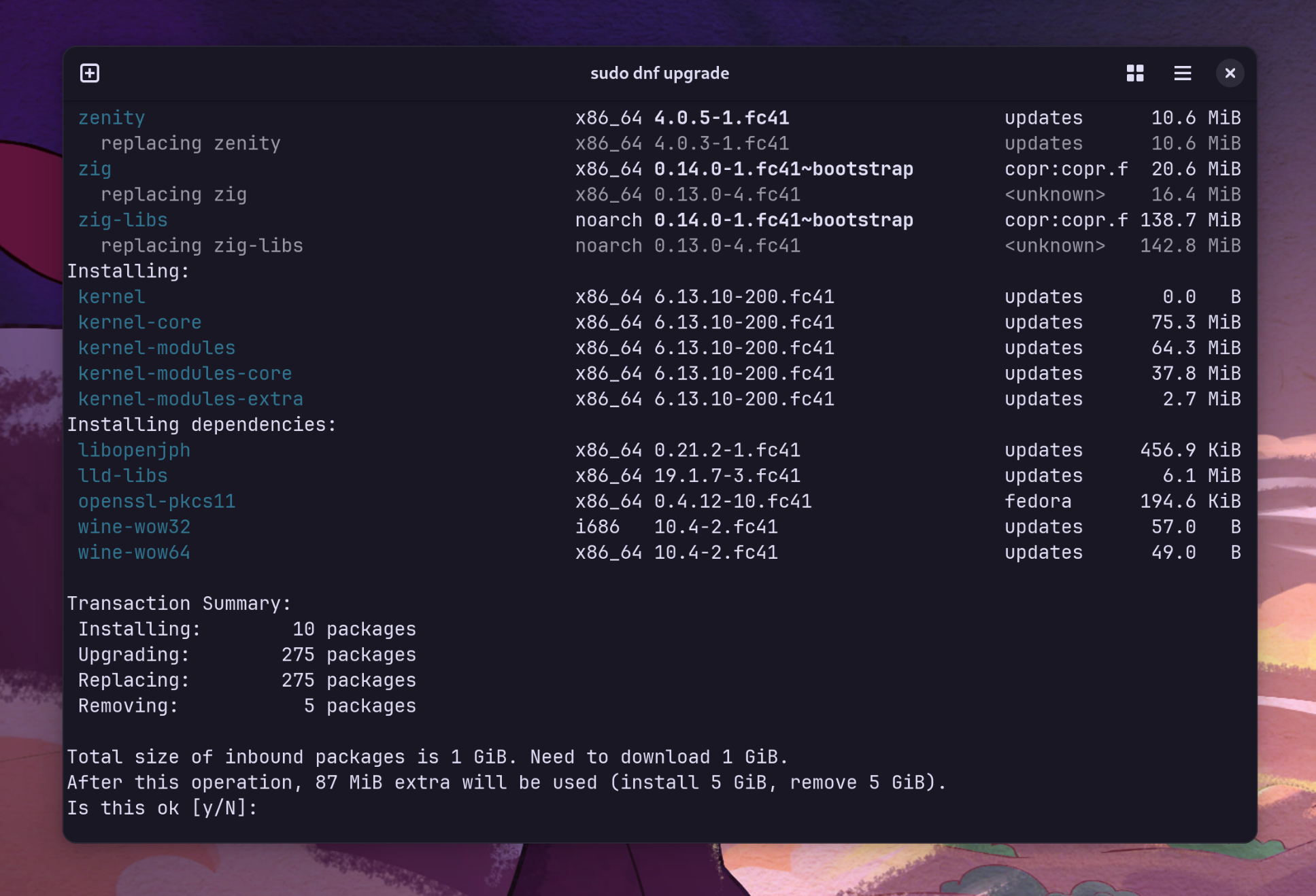

spec file looks like. This is, specifically, the spec file for fzf on Fedora.Much in the same way, package managers provide a central place to upgrade every single piece of software on the system, from the base operating system components to your web browser. A command is all it takes, and your system is completely current. The system is also far more lightweight as a result: there is no need to run heavyweight daemons just to keep software up to date, nor do developers need to implement their own separate update logic. The package manager takes care of it all.

dnf upgradeTypically, every Linux distro (or, often, families of Linux distros) has their own dedicated package manager. Arguably, the most popular package manager that comes to mind is apt, which ships with Debian and Ubuntu systems. Fedora-like systems, such as RHEL and AlmaLinux, use the dnf package manager. openSUSE and SLES use zypper, while Arch Linux uses the simpler pacman. All of these package managers have slightly different sets of features, and they may have different design philosophies, but the core principle is the same: manage the Linux filesystem as a set of organized components.

It does not stop there, though. In the modern Linux ecosystems, these classical package managers are only one of the many kinds of package management solutions that exist.

The problem with traditional package managers

Traditional packages are great, but they have several limitations. One of them is that they are tied to a specific distro version: since packages depend on each other in a graph structure, it can be pretty challenging to distribute a package for various distributions. While package managers typically support adding third-party sources, called repositories, keeping these packages up to date for multiple versions of multiple distributions requires a lot of time and effort. This makes targeting Linux systems pretty hard. In the past, this created a situation where not every distro had access to pre-packaged versions of every packaged piece of software. Linus Torvalds made some great points on this back in 2014, at DebConf 14, stating this situation as why Linux was struggling to break through the desktop market share, and why he thought Google's chromeOS would have a better shot.

With this model, the software that enjoys wide adoption on the Linux desktop is limited to the programs that get packaged officially by distribution package maintainers, making it to the official repos. From a developer's standpoint, this is a hard gate to get through. From a package maintainer's perspective, every new package adds to the workload. Since package maintainers mostly work on a voluntary basis, it is often not practical to add a lot of packages to the mix.

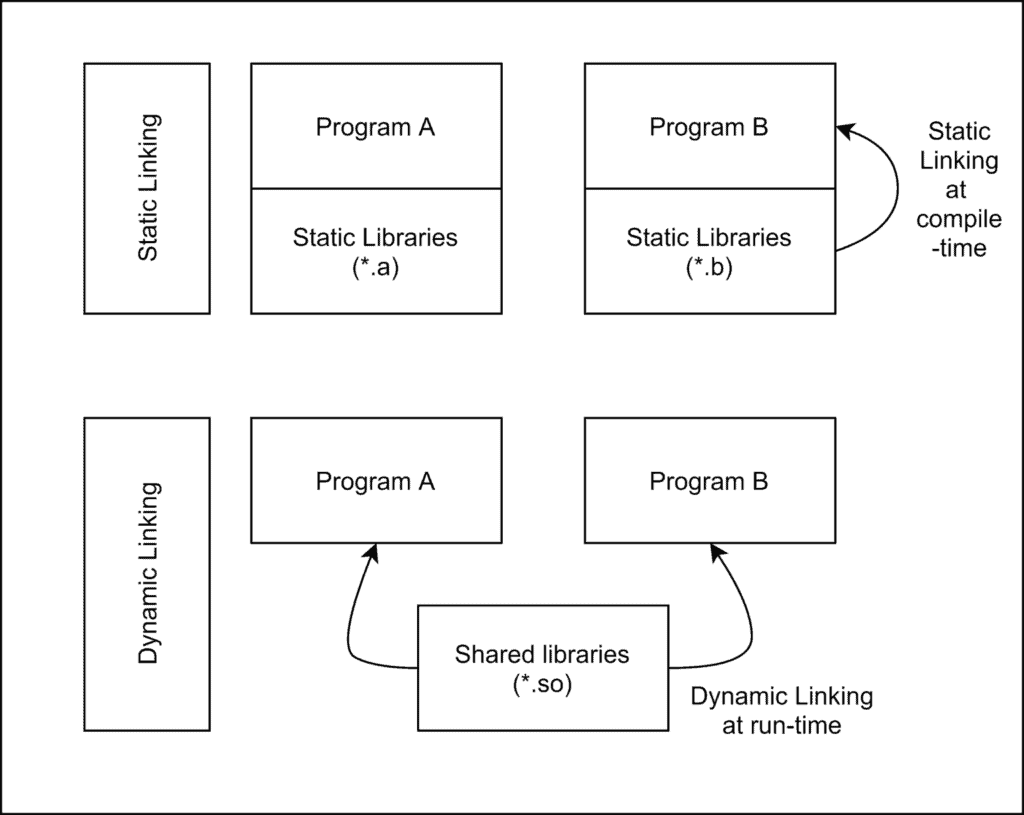

Developers worked around this in various ways. A popular way was shipping a "portable" installation through a .tar.gz package, but it often didn't work properly. The program was dynamically linked, and the .so libraries were shipped in a subdirectory. This, however, was not enough to guarantee true cross-distro compatibility: it was not feasible to include every library under the sun, and this did not protect you from behavioural changes from libc version differences. Another popular option was shipping a statically linked binary, which means that the linking with any external library is done at compile time, rather than loading the libraries from the system. Even this is not a silver bullet though, and it has its problems. One particular implication that is shared across both approaches is that, if you want to make a piece of software distributed this way truly cross-compatible, you need to include everything it may possibly depend on in your build, including a libc implementation. At that point, it even starts to make little sense to use dynamic linking: no system libraries are safe to fall back to, and you have to ship everything your program needs to use anyway. This creates software releases that are hard to package and extremely heavyweight, as each of them must ship a good subset of your currently installed Linux userspace to truly work everywhere. Developers have to pick their davourite compromise between package size and likelihood their deliverable will be executable on a random system, which seldom works out.

The rise of universal package managers

This is why container-based package management solutions exist.

Flatpak came up under the name of xdg-app in 2015. The core idea of Flatpak is simple: create a common runtime that applications can run on, irrespectively of the Linux distro they are running on. This is sort of similar to the idea of Java and the JVM: "Write once, run everywhere". Differences between different environments get abstracted by only having to package the runtime itself for each specific distribution, and then just run applications on top of that shared runtime.

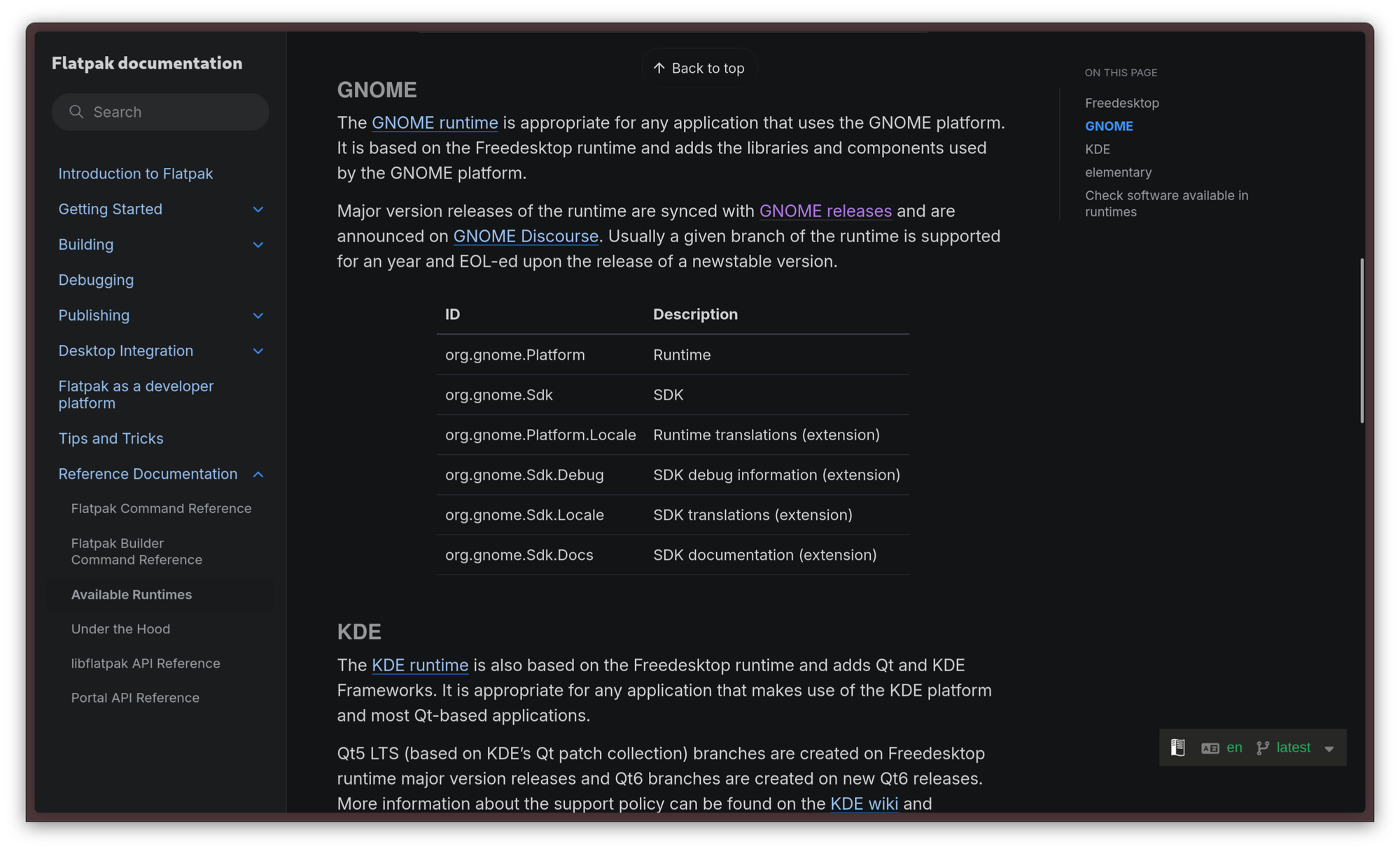

The use of shared runtimes fixes the problems with big Tar archives and statically-linked binaries: a package mainainer does not necessarily need to provide every single library themselves, but they can bind their package to a Freedesktop Runtime. A Runtime is a set of libraries and dependencies that are pinned to a specific version. To upgrade these dependencies, the package needs to upgrade to a future version of this runtime. Due to this, dynamic linking can be used safely, as a packager can expect a whole set of libraries will be available in the runtime they choose, and they will be binary equal on every target system, no matter the Linux distro. Several runtimes are available. Typically, a package can target a runtime from a desktop environment - specific "world", picking between GNOME, KDE, Elementary and more. For example, an application built with GTK will use the GNOME runtime, while an application built with Qt will use the KDE runtime.

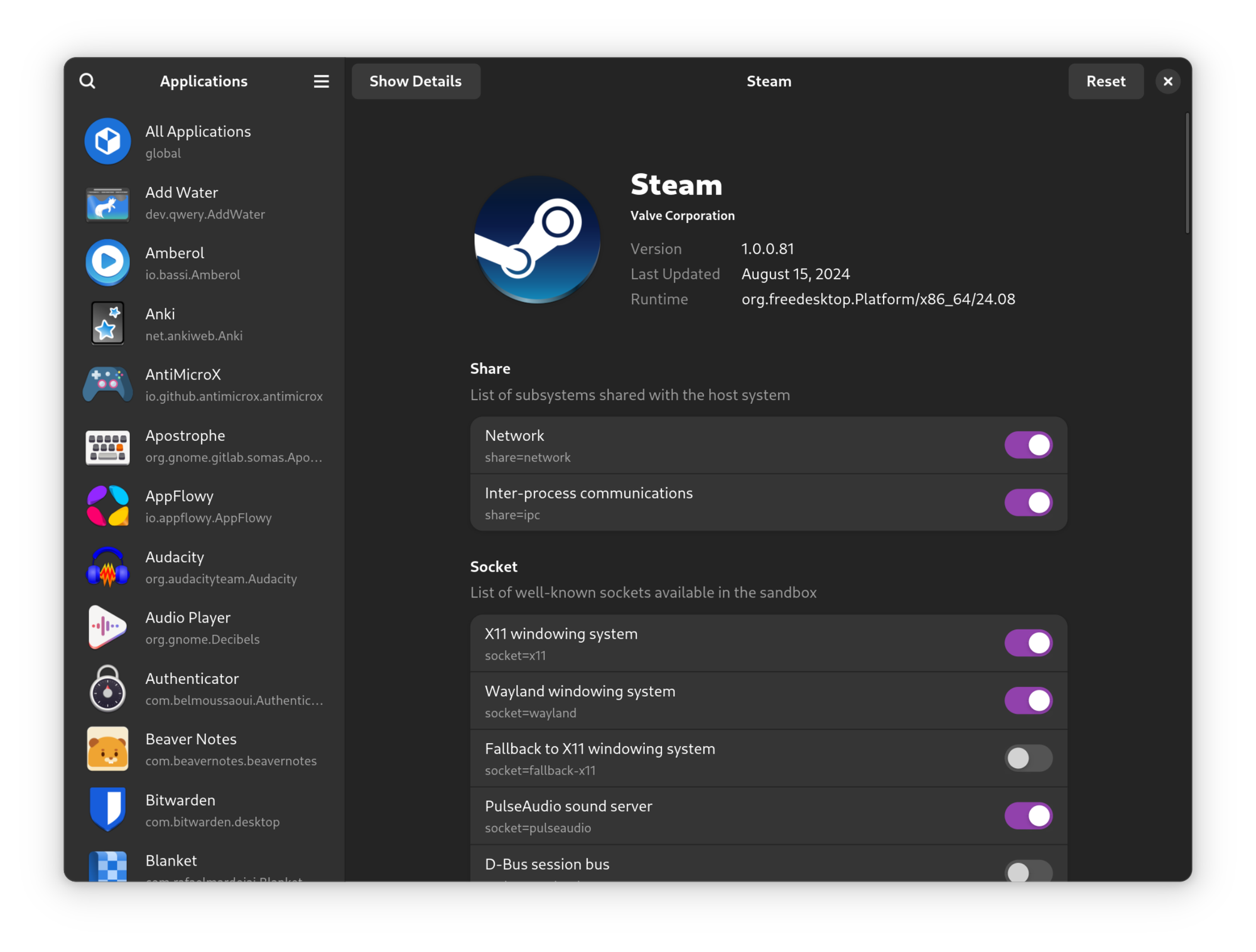

Flatpaks are also much better for security. Not only do they abstract the underlying system libraries through runtimes, they also provide a layer of isolation and sandboxing through bubblewrap. This gives applications a permission model. This is great for proprietary software in particular: since you cannot trust any piece of non-free software that you cannot audit, you can limit what it can do on your system. For example, by default, the Steam package on Flatpak does not allow Steam to access all your files, but only a subset to allow things like custom banners and the built-in music player to work. The permission model is quite thorough, so it is possible to configure the sandbox rather well. The initial configuration is made by the packager, but the user can refine it with local configurations. Flatseal is a very popular graphical applications that can be used to configure these permissions.

Flatpak supports multiple repositories, but the most popular one that everyone uses is Flathub. Nowadays, pretty much every single application under the sun can be found on Flathub, and they also have a very appealing home page!

Flatpak is part of the GNOME project. Its adoption started in the Fedora ecosystem, but it has since become an industry standard across most distributions. It is preinstalled on most Linux distros and, when it is not, it can typically be installed from the default repos.

In Ubuntu land, however, the industry standard is Snap. Born from the ashes of mobile operating system Ubuntu Touch, Snap is Canonical's take on a similar solution. The way it works is somewhat similar to Flatpak, although the implementation is very different under the hood. The first difference, and the source of most on the criticism of Snap, is the fact that it is limited to Caonincal's Snapcraft repository, and it does not support multiple repos, like another package manager would. The different implementation also makes the Snap binary, snapd, depend on systemd for basic functionality and AppArmor for full isolation support, which is a barrier to getting Snap working on some Linux distributions, although basic support is possible for every Linux distro that uses systemd as init.

Ubuntu is one of those cases where the appeal of having a way to distribute software other than through the same repositories that base system components are pulled from is immediately visibile. Ubuntu LTS is one of the most widely used solutions on the desktop, especially in corporate environments, because it is ideal for use cases where one does not necessarily want to access the shiny new things immediately, but they prefer a stabler base, with fewer surprises, that can be used without dangerous major version bumps to major system components for several years. One of the main problems with Ubuntu LTS in the distant past had been that, since the libraries that ship in the repos are tied to what the older LTS system components require, user will only have access to increasingly outdated major versions of several applications. Snap solves that: you can keep a stable, predictable Ubuntu LTS base, taking advantage of its predictability for software where you expect not to be rug-pulled, like compilers, while all the applications that you want to use the latest versions of are installed through Snap, isolated from the base system.

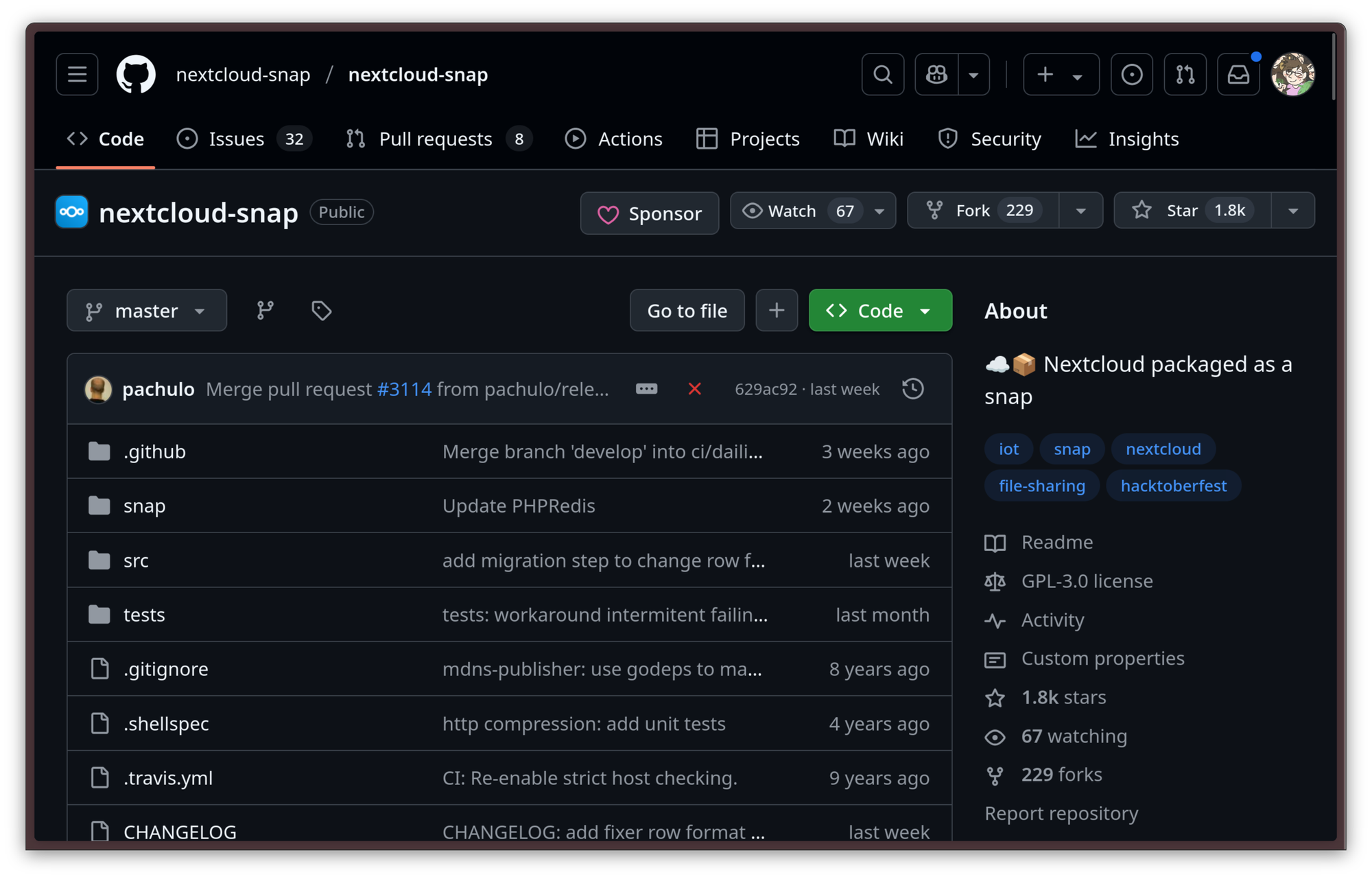

Although it is often subject of criticism, Snap is not worse than Flatpak, it is actually much different. Rather than focusing on just targeting graphical desktop applications, Snap is significantly more versatile: any choice in software comes with a trade-off, at the end of the day. Unlike Flatpak, Snap is also suitable for the installation of CLI applications and the deployment of cloud services. For any service that is available on the Snap Store, snap lowers the barrier to self-hosting an instance of it on a server in important ways: anecdotally, the absolutely easiest and most straightforward way to get started with Google Drive and Suite alternative Nextcloud on your own server is to choose Ubuntu Server and install the service from Snap with one single command.

It gets crazier, though. Thanks to Ubuntu Core, a minimal and immutable version of Ubuntu built on top of Snaps, snapd can directly target entire embedded / IoT devices. For this use case, one very important feature of Snap is its ability to perform automatic updates and perform them atomically: either the system update gets applied successfully, or it does not get applied at all. This is a very comfortable solution to provision a fleet of embedded devices in a production chain or even on the end-user market: you can rest assured that you can keep those devices up to date safely, reducing the amount of issues and customer claims to just a few edge cases, at worst.

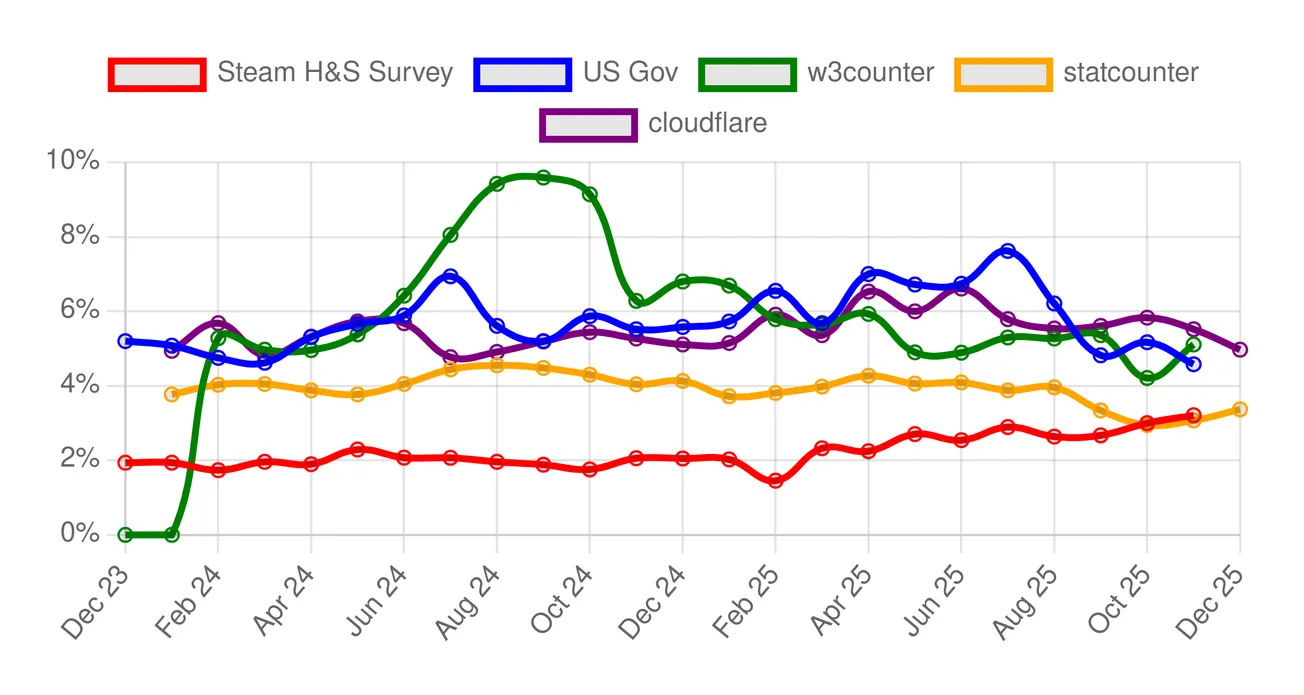

It doesn't matter though. The gist of it is that most Linux distributions you install will ship with some kind of universal, container-based package management solution that you can use immediately. There is clearly a big shift in this direction, as more and more users are adopting this paradigm.

However, that doesn't mean container-based package managers are the end-all-be-all. Several other alternative solutions exist.

Nix doing things its way

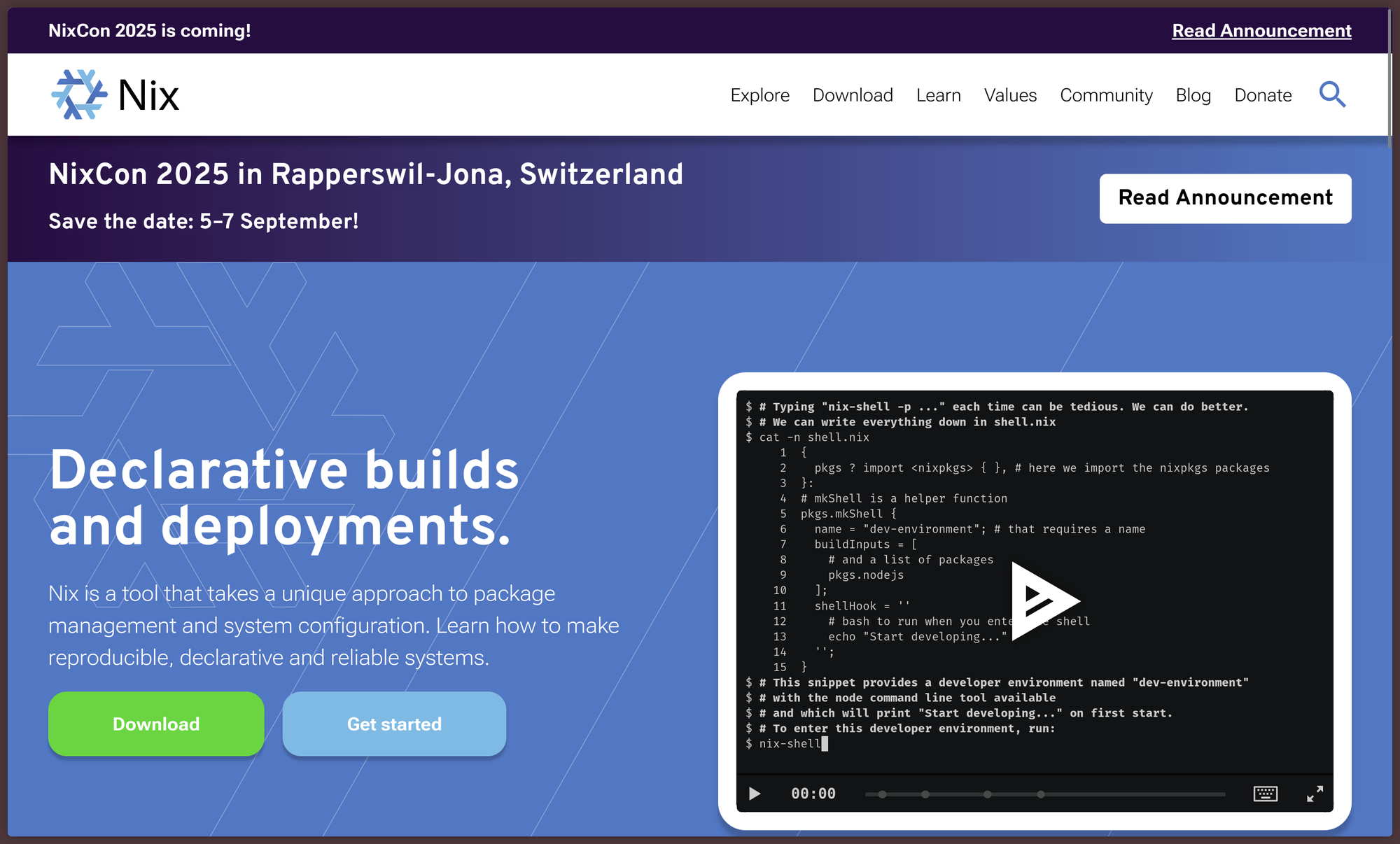

One of them is Nix. Nix is a very innovative package manager that works very differently than other package managers and it brings a lot to the table. It is so different to other approaches that none of the skills from traditional package management are transferrable, but the reward for learning it is very appealing.

The main difference is that, while most other package managers are imperative, Nix is declarative.

An imperative package manager mostly runs commands. To install Neovim on apt, you would open a shell and run sudo apt install neovim. To remove it, once again, you open a shell and run sudo apt purge neovim. The way you interact with the package manager is by telling it what to do action by action.

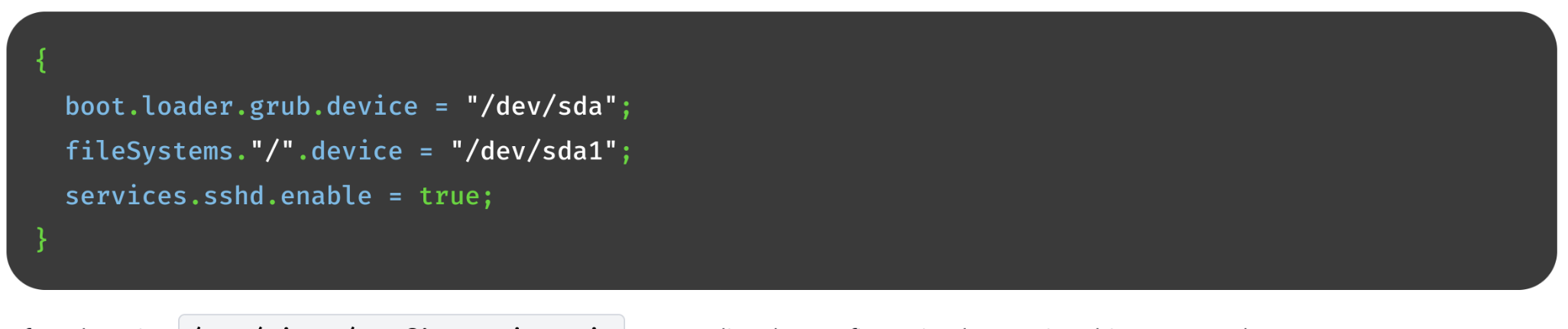

Nix is declarative. With Nix, you don't have to install every package manually. What you do is provide a file, that Nix reads, where you declare all your installed packages, and how they are installed. When you sync Nix with your configuration file, Nix puts the system in the state that is described in the configuration file. This is further extended if you use NixOS, a Linux distro that is deeply integrated with the Nix package manager, to the point of letting it configure system components like the bootloader as well.

configuration.nix exampleNix can also install and provide multiple versions of packages, do atomic installs and removals like Flatpak and Snap, and it allows users to reliably reproduce the same state coming from any other state, which is also used for safe rollbacks. Nix allows users to define different environments, with different pinned versions of packages, which makes it particularly useful for software development: let's say you are working on two projects that require two different versions of gcc and other tools to compile and work. Nix would solve this problem in an elegant way. And, most importantly, it would do this without containers. If you are interested in learning more about it, I'll leave you with Nix: How it works.

Package management on Linux is great

Package management on Linux is great and varied. In fact, it is so varied that I had to cherry pick just a few notable examples, because there are many more incredibly interesting and clever package management solutions that I did not even mention. This is a good thing, though: there is no shortage of good package managers on Linux, and development around them is progressing steadily.

Even if commercial operating systems are finally beginning to get a taste of how using a package manager can positively impact user experience, the quality and the usefulness of those package managers is still one of the things that still make Linux clearly shine as a computing platform over almost every other alternative.