Table of Contents

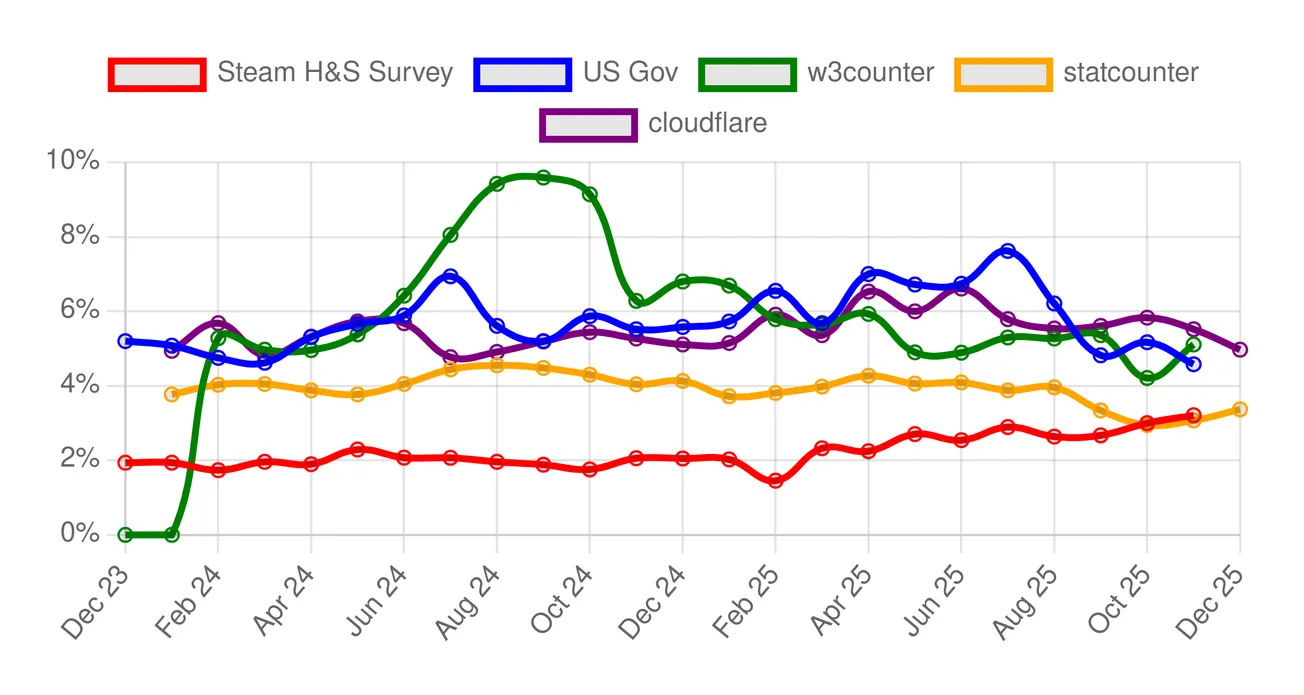

In the big year of 2025, more and more of the Linux world has finally moved on to using a Wayland graphical session on their desktops, rather than a classical X11 server. The transition has been proceeding quickly as of late, though that was not always the case: for a much longer time, several people simply couldn't leave X11 behind, because no Wayland compositor out there had quite reached feature parity with the X server yet.

Let us take a step back, though. What is Wayland, and what is this X Server that came before it?

The Early Days: X.Org

For the more seasoned of our readers, X.Org is already a familiar name.

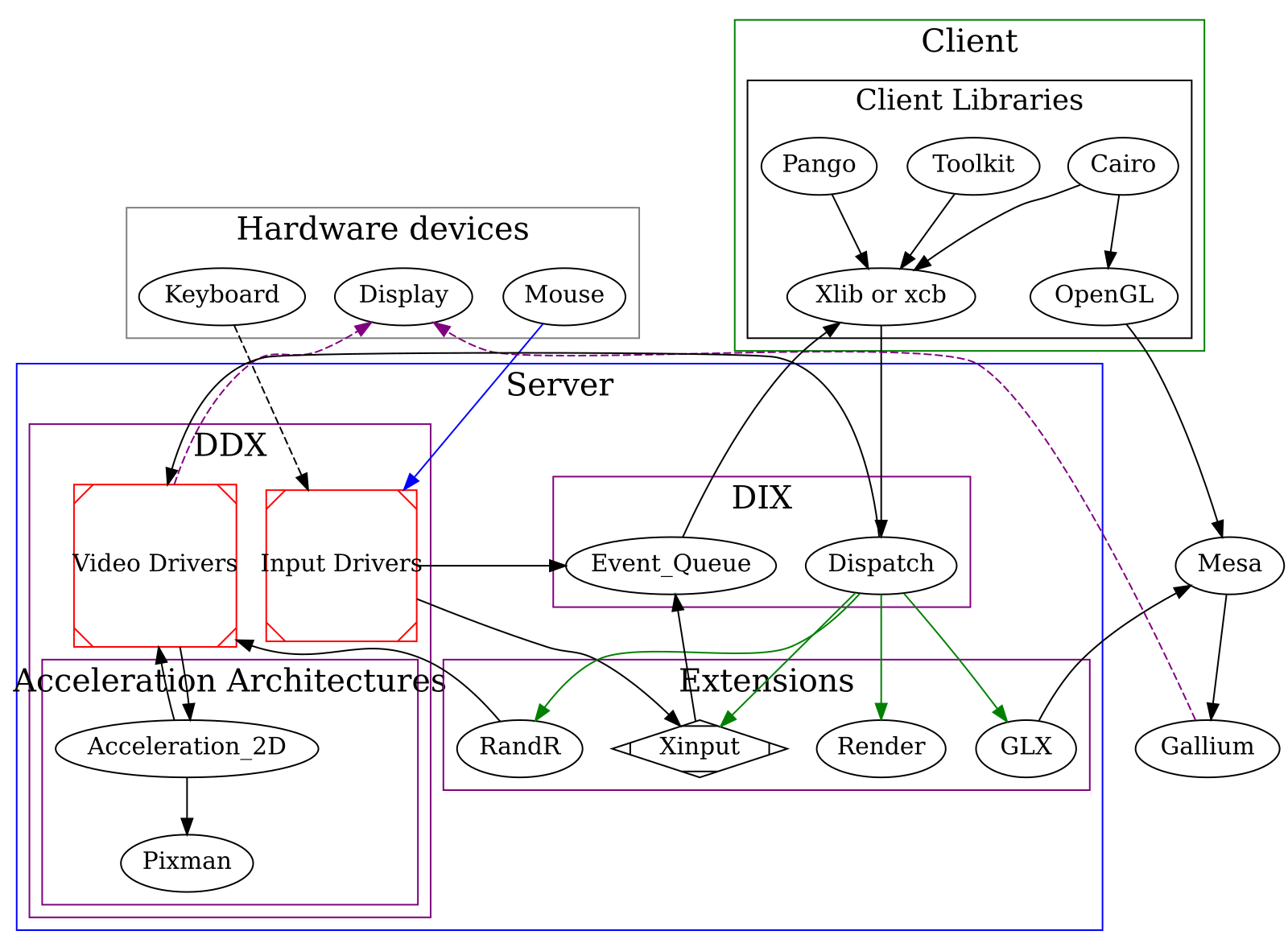

X.Org is a FOSS implementation of the X Window System — also known as X11. X11 was the windowing system that had been used both in UNIX systems and in the Linux userspace as a display server — the piece of software that is used to manage windows, talk with the Kernel Modesetting to create frames to push to the GPU, and take inputs from the various input devices, such as mice and keyboards. There were many implementations of this standard across several operating systems, including some commercial ones. Linux chose to adopt the Xorg server, a completely free and open source implementation.

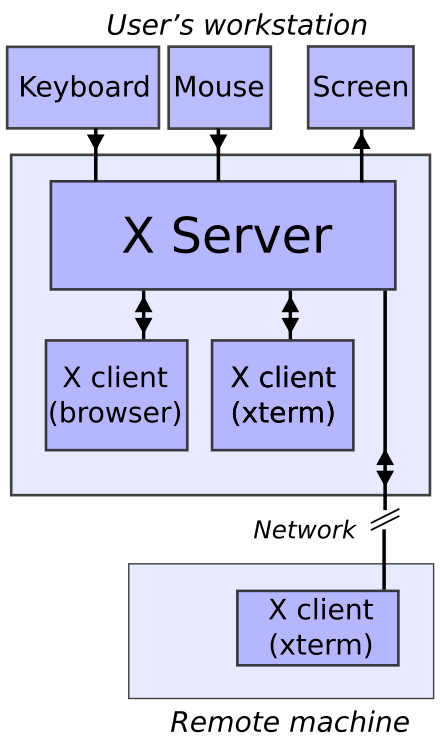

Due to how computing used to work back then, the X window system had a client-server architecture. What this means is one process — xfree86 — was instantiated as the server, and applications, the clients, and the various input/output peripherals — keyboards, mice, monitors — would connect to the server over a networking protocol.

Back in the day, it used to make sense. The concept of personal computing was not as widespread as it is today, and, in most organizations, all actual computing happened on remote servers, while users used thin-client machines that would remotely connect to the server. It was critical that X windows could be streamed over a network protocol.

Limitations of the X window protocol

Over time, however, while computing changed profoundly, the X window system did not. As the days went by, Xorg was more and more unfit for modern computing needs, as the limitations due to its decades-old design began to be increasingly harder to ignore.

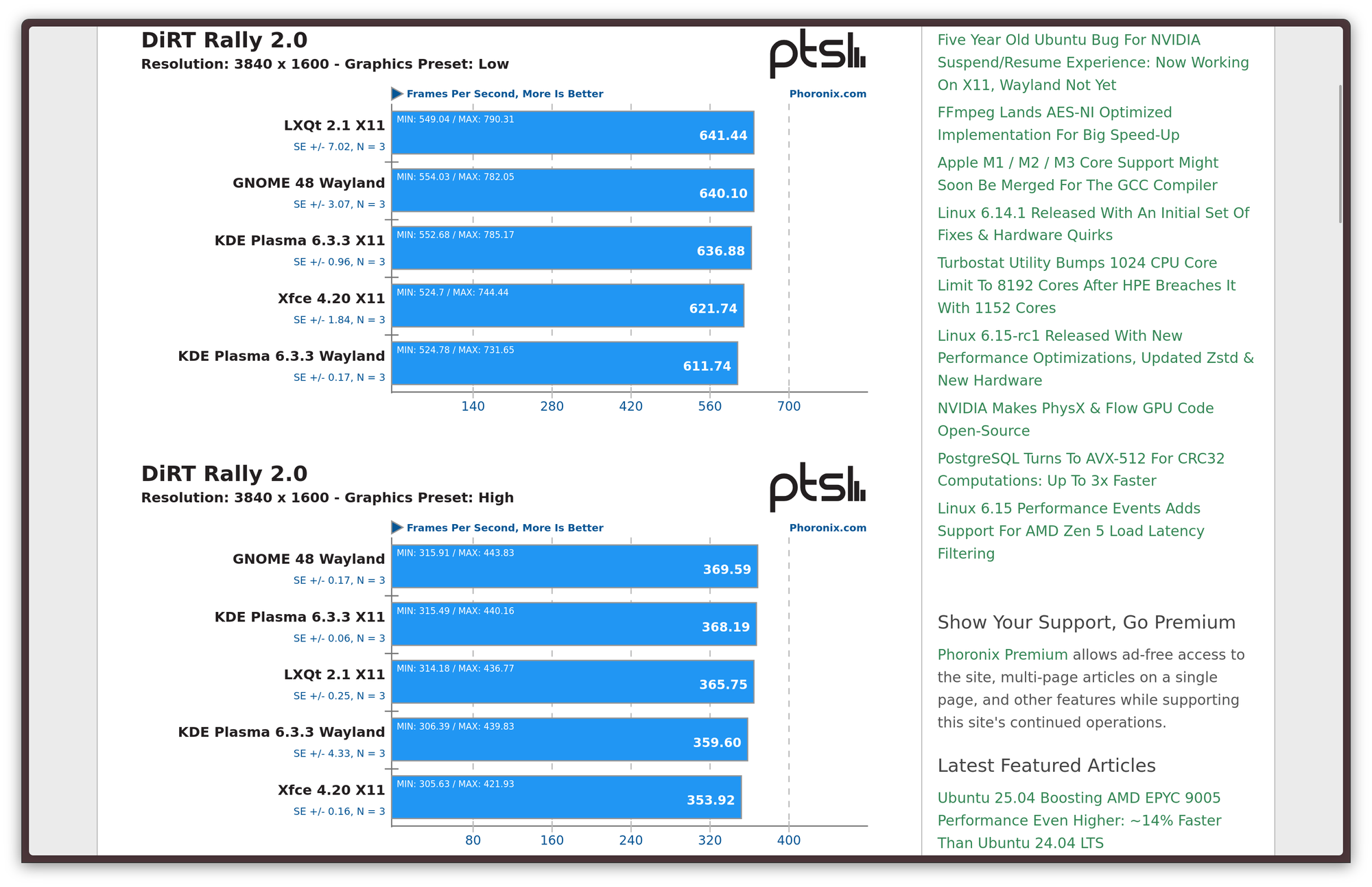

Firstly, its chatty networked architecture was introducing too much performance overhead just to support a use case that is mostly superseded at this point. This also caused several users to perceive latency — especially in cases where a compositor was running on top of the session — which created its own fair share of issues with tasks that require low latency, such as gaming.

Secondly, the way Xorg works internally would cause screen tearing artifacts, cases where information from multiple frames were pushed to the display at the same time, creating a “disconnect” between various parts of the screen. For a very long time, the main way to mitigate this artifact was running an X session with a graphical compositor on top. This would mitigate this issue and allow nicer eye-candy effects, with the side effect of adding a fair bit of latency.

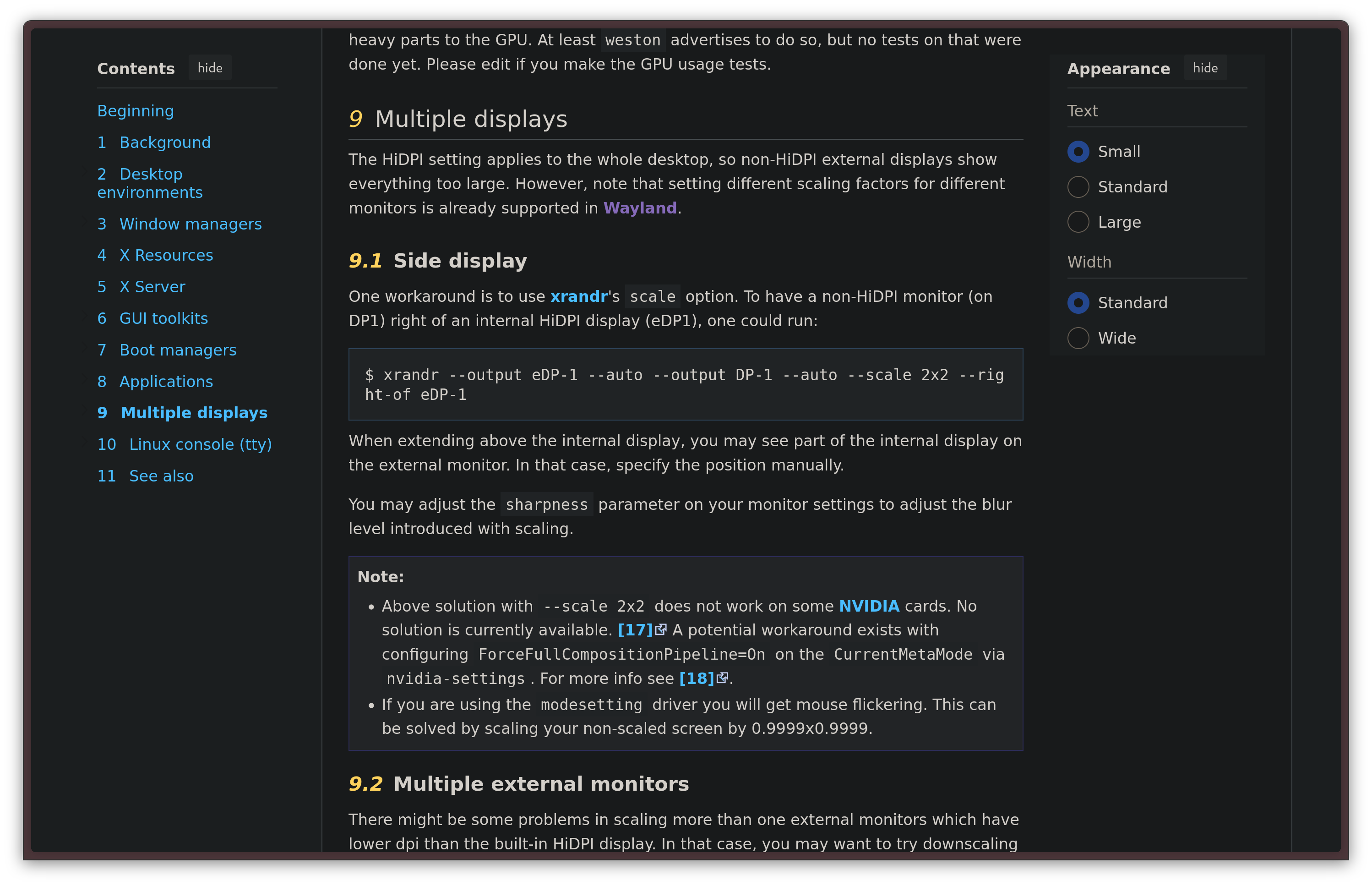

Among its various issues, one notable fault of Xorg is in the HiDPI design, or lack thereof. As the years went by, HiDPI displays — monitors with a very high resolution in a rather small physical size — got more and more common. Any use case related to HiDPI on Xorg is known to be painful, but where it fails completely is in mixed dpi use cases — which means, whenever multiple monitors with different levels of scaling are needed. Xorg treats all connected displays as a single surface, making per-monitor adjustments impossible. The same is also true for refresh rate differences: if you have a 60 Hz and a 144 Hz monitor connected in your setup, dragons lie ahead.

Another area where Xorg was lackluster is security. The X server has absolutely no concept of security: clients have visibility on anything that happens in the server, not only the information they should care about. A window could completely stall the entire X server, record the screen or take screenshots, take exclusive control of the keyboard or silently act as a keylogger.

Finally, Xorg is extremely hard to maintain, which has made adding features to it, or even just performing basic maintenance tasks, unsustainable in the long run. As the years went by, the Linux desktop kept falling behind Windows and macOS for anything related to HiDPI, touch screen support, smooth touchpad gestures, and anything touched by the limitations of Xorg. The writing was on the world: in order to have any hope of ever bringing the video presentation stack of the Linux desktop on par with better-funded commercial solutions, it was high time for a good old rewrite.

The new era: Wayland

This is why, back in 2008, work on Wayland had started. Wayland is a presentation protocol that seeks to replace X11, reinventing the solution with a completely different design, seeking to never repeat the mistakes that were made in X11.

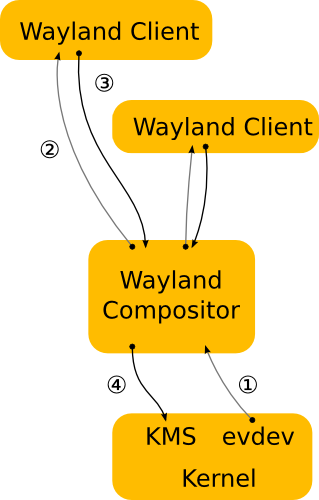

From the start, the architecture is fundamentally different. Unlike the X Window System, Wayland no longer uses a client-server architecture: it is, rather, a protocol that specifies how a Wayland compositor and the windows that will be drawn inside it — the Wayland clients — should communicate.

The main new concept in Wayland is the fact that there is no longer a separation between the Display Server, the Window Manager and the Compositor: all of those components are now fused in one single monolith, the Wayland Compositor. The compositor will take on the task of communicating directly with graphical kernel interfaces such as the Kernel Mode Setting itself, without a middle man.

Things also change from the perspective of the windows. Wayland clients are much more restricted, and all they know is what the Wayland compositor tells them. The main philosophy behind this design decision is the wish to never again force a client to make assumptions on the environment it was running inside to work properly — something that did happen all the time with the X Window System, causing a lot of mishaps. A permission system has also been implemented, like the one you have on your phone, making it so that a client has to ask before doing things like recording your screen.

This approach has both advantages and drawbacks. The gains are pretty obvious. First off, there is a clear performance advancement to be gained: it's the oldest concept in low-level software design — modular architectures have neat logical separation, but monoliths tend to be faster. Much faster: this is the same reason why the Linux kernel is monolithic, and why the Windows/NT kernel also became much more monolithic as time went on. In the specific case of X11, though, the main bottleneck was the fact that communications between components were running through a network protocol — even if it was running locally — and that it was very chatty. By eliminating this communication and cutting out the middle man, there is a potential to improve performance and latency greatly. We also get advantages in security: since a window is now running in a more confined environment, privacy is now better safeguarded.

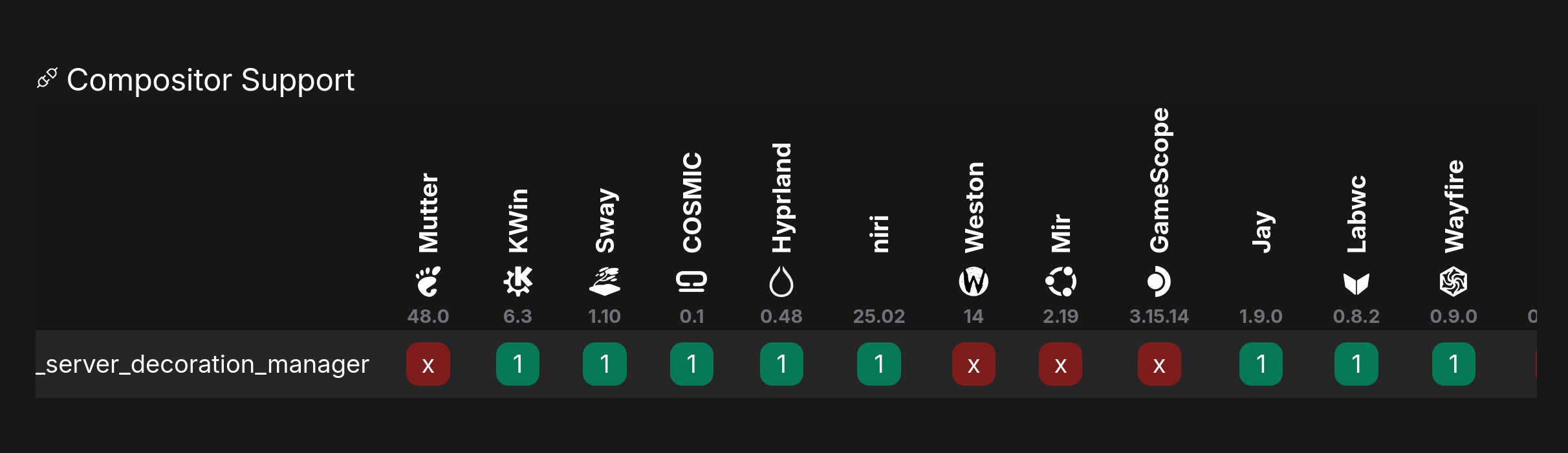

To balance out these advancements, we also get some drawbacks. For starters, since each compositor is a monolith, different desktop environments have to work on their own compositors from scratch, replicating a lot of effort several times. This also creates a huge issue with fragmentation: while the Wayland spec includes many protocols, not all of them are implemented by all Wayland compositors under the sun, or even by the most relevant ones. Sometimes, this is due to the fact that a protocol that is challenging to implement does not yet have a finished implementation ready for one compositor or another. Sometimes, it is due to divergences in philosophy and opinion between projects, which leave them the freedom to not implement a protocol at all, so long as it's an optional one. Weston itself, the reference Wayland compositor, only supports a pretty minimal set of protocols, creating a situation where the consensus on whether a feature or another should be implemented by everyone unclear.

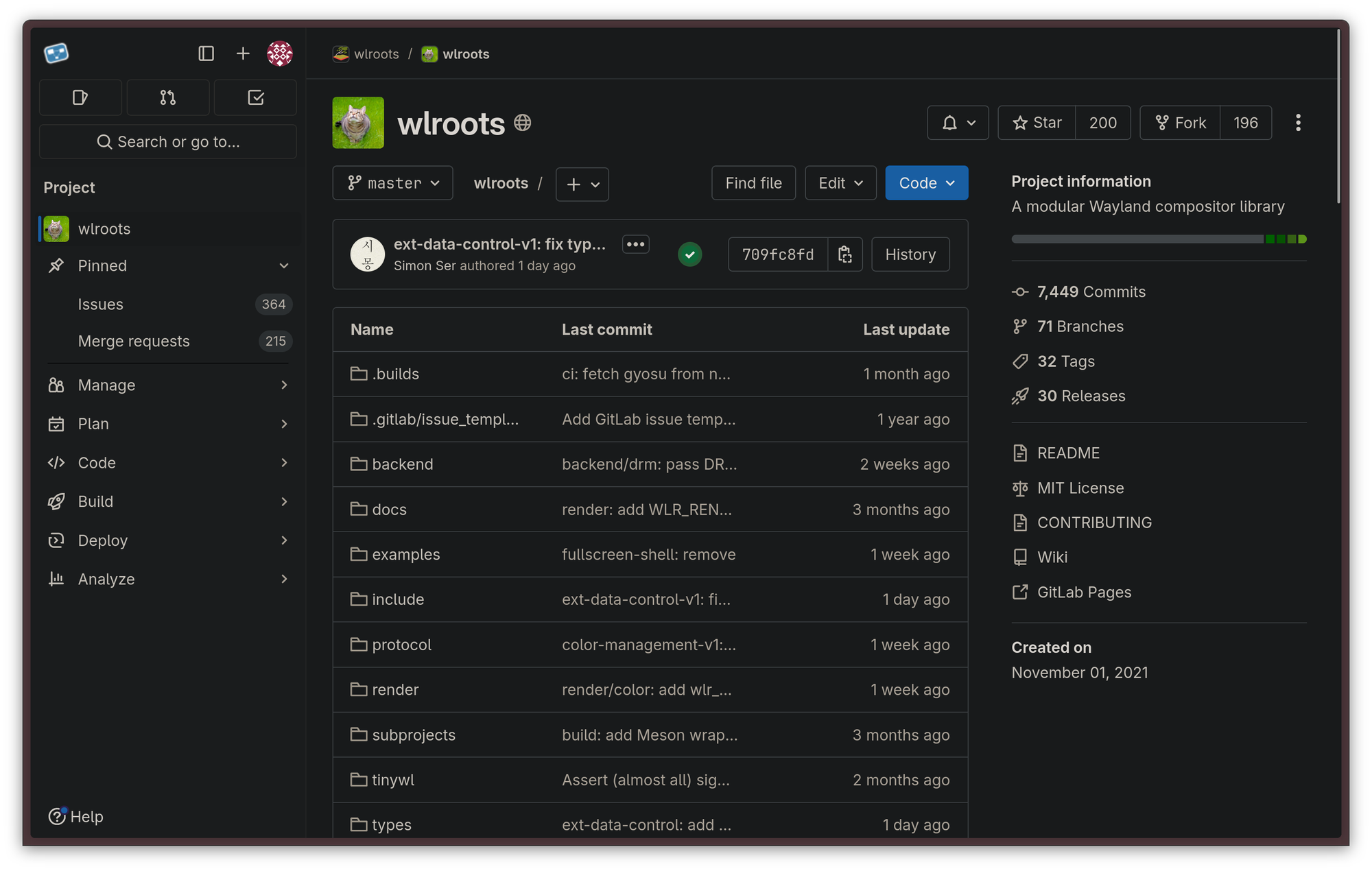

It also used to be harder for a smaller indie to get going with their own Wayland compositor. However, this has been greatly alleviated by projects like Drew Devault's wlroots, a library that allows anybody to build their own Wayland compositor on top of it. Notably, wlroots has been the base for other well-known projects, like Valve's gamescope compositor which is used for the Steam Deck gaming mode among other things, Drew's own sway compositor, a dwm port called dwl, or niri, a sway alternative that a lot of people love.

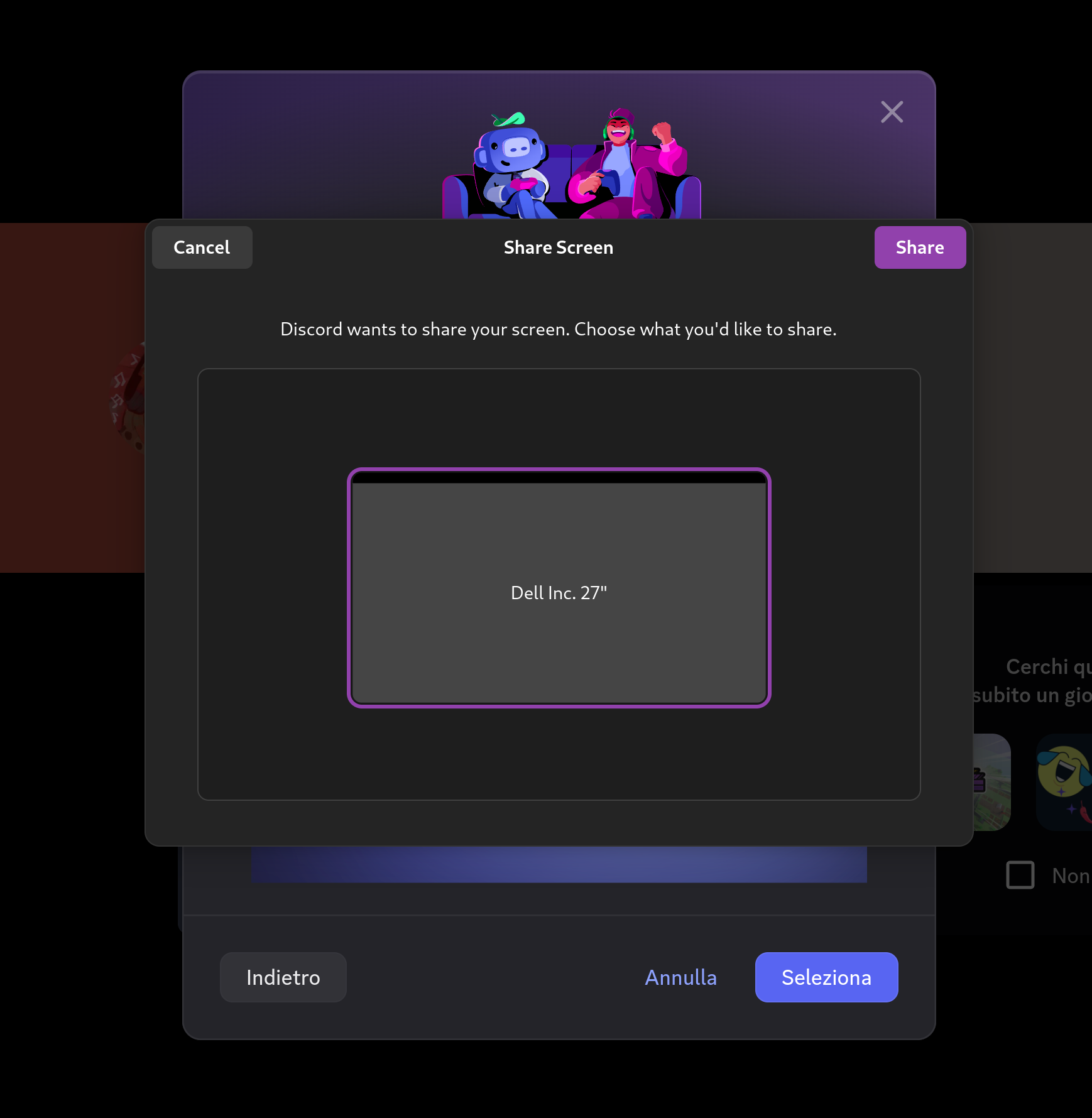

For a long time, Wayland had significant problems that stopped a lot of people from using it: from slowdown in video games due to its default vertical sync, to broken Wacom tablet input, to problematic screen sharing, to the security model sometimes getting in the way of some quality-of-life feature that used to exist on X11. However, during the past few years, development around Wayland has skyrocketed, steadily killing more and more of these limitations, while improving over the original X11 implementation in the process. Development is still going great, and there are a lot of exciting things coming to the Wayland ecosystem planned for the short to medium term.

Wayland's innovations

The swift boost in adoption of Wayland compositors as of late does not come in a vacuum. It mostly owes its success to the fact that new Wayland protocols have become part of the standard, closing gaps with X11 that prevented users to switch over. Let us now take a look at some of the main innovations Wayland is going through now. We will see some protocols which are now part of the standard, and that are either in the process of getting supported by clients, or there is development around them still. For this, I would like to thank Phoronix, which has put out a nice roundup of everything that has been going on in Wayland for the past few months, and some of the advancements discussed below were found there.

Better fractional scaling with fractional-scale-v1

One of the main problems in Wayland sessions was that, since Wayland primarily deals with integer numbers, there was no way to get a window to define its own scaling level. The way Wayland compositors would work around this was render the window at integer scaling at a larger resolution and then downscaling it back to the native resolution. However, this hack was very computationally expensive, while creating visual artifacts and making everything slightly soft and blurry.

This was solved by the fractional-scale-v1 protocol. Now, if a client supports it, the compositor can tell the client what the actual, fractional scale factor is, and allow the client to handle the scaling internally by itself, eliminating both the computational cost and the visual artifacts.

Low-latency competitive gaming thanks to tearing_control_v1

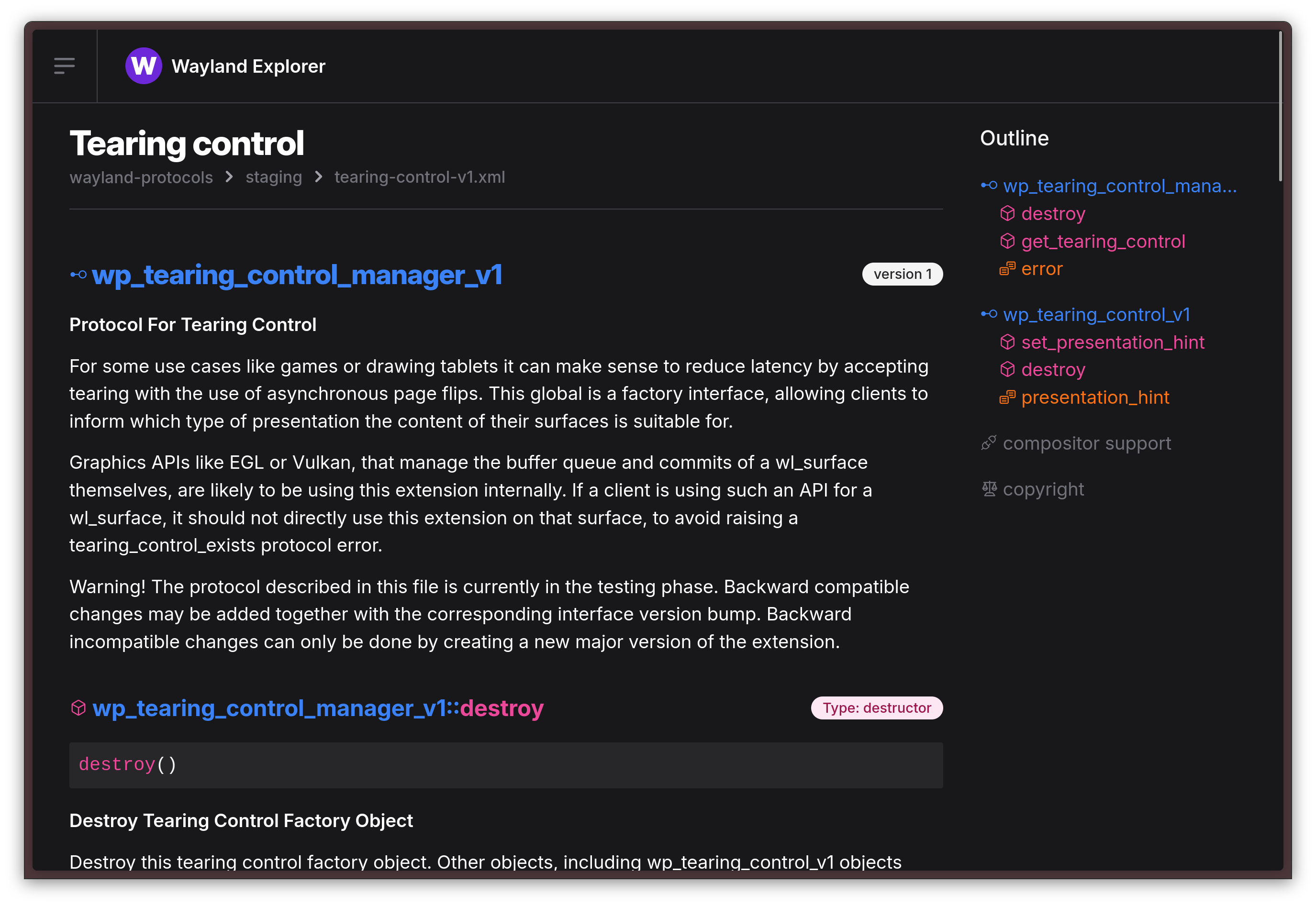

Remember how we said that Wayland has eliminated screen tearing? One of the ways it has done that is by enforcing a policy where every frame is perfect. This means that the entire compositor is forced to wait for vertical synchronization (VSync). Hardcore gamers already know that VSync usually means bad news: the cost of eliminating tearing artifacts is high and, very often, input latency is introduced. Unfortunately, enforcing vertical sync from the compositor side can negatively affect very fast-paced games where every frame matters.

There is ongoing work to fix this situation thanks to the tearing_control_v1 Wayland protocol, currently merged in staging state. This protocol would allow a client that requires it to disable VSync for itself.

However, not all compositors currently implement this, and it seems like further kernel and compositor patches on compositors that do support it is required to make this feature work properly.

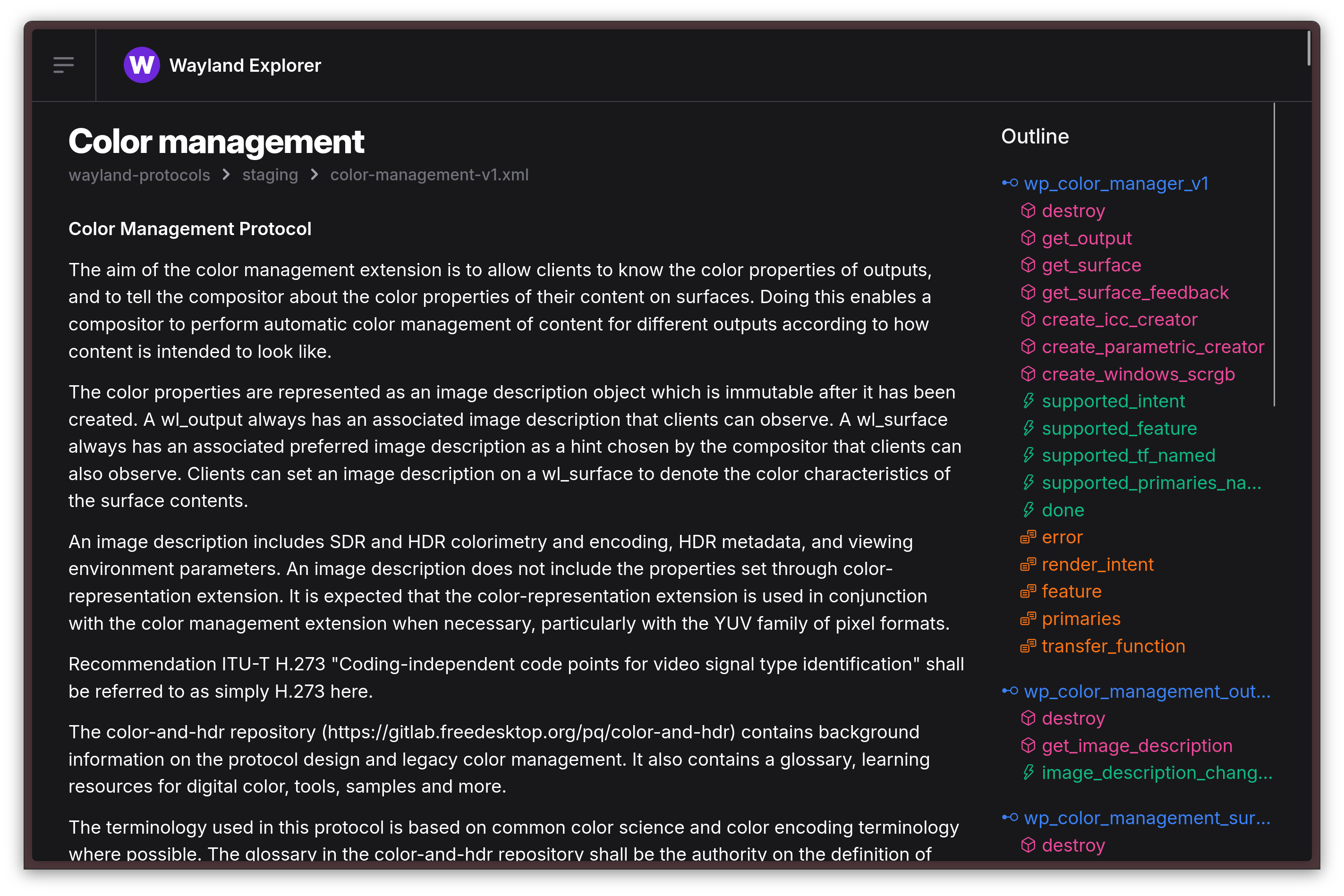

Professional color work and support for an HDR monitor near you with Color Management Protocol

One pretty bad, long-standing limitation of not just Wayland, but the Linux desktop itself, has always been the lack of valid options to do proper color management and drive HDR (High Dynamic Range) displays without resorting to running them in SDR mode - clamping their color gamut to the more limited sRGB color space.

The recently merged Wayland color management protocol, as part of Wayland Protocols 1.41, is an important step in that direction. Like the Tearing protocol, though, this protocol is still a staging protocol: this means it's being tested, and further development is necessary to be able to actually use it.

Hopefully, this means that color-critical work will soon be more viable on Linux workstations, and that an HDR monitor or TV near you will be able to be used to its full capabilities when hooked up to your Linux box!

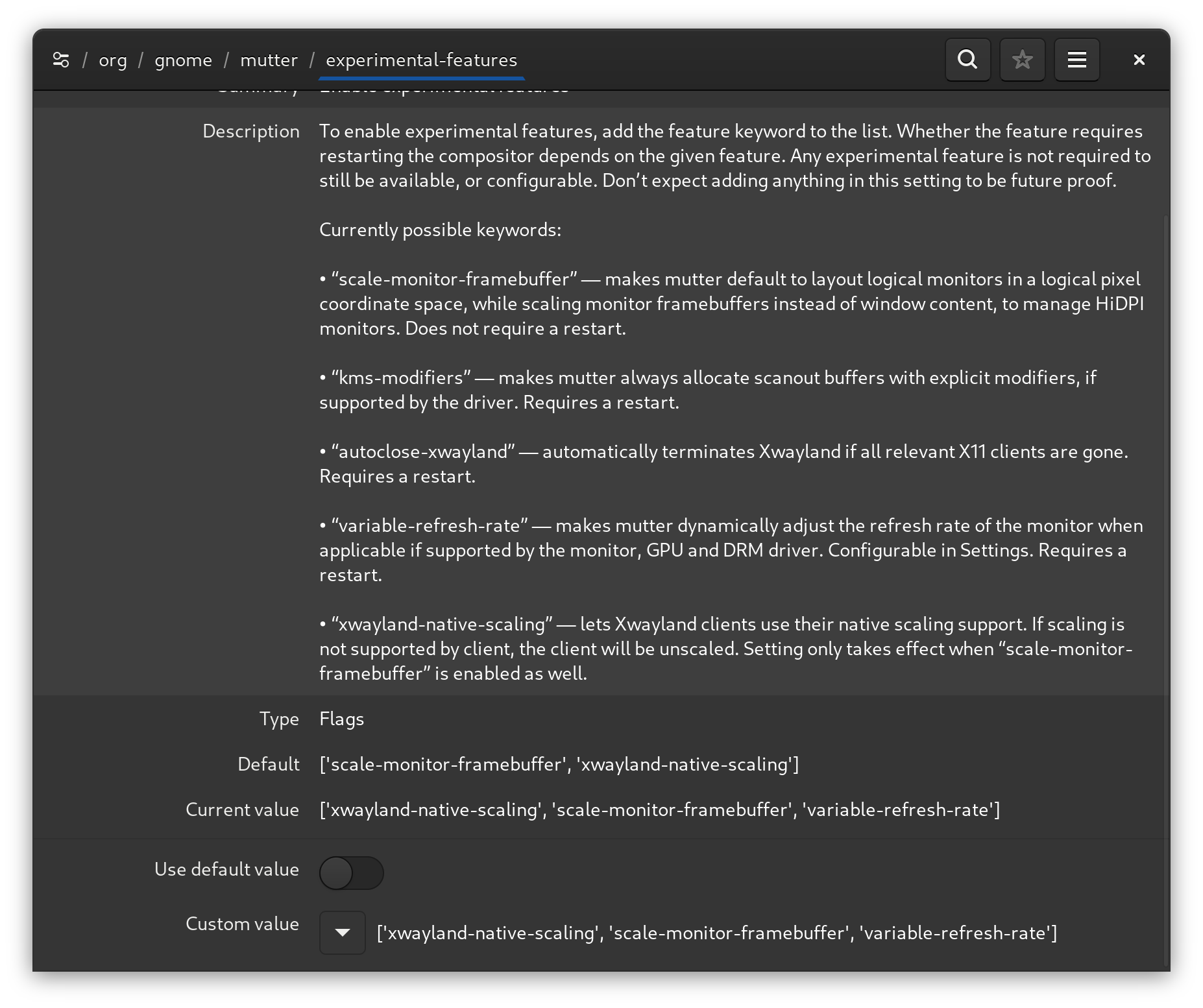

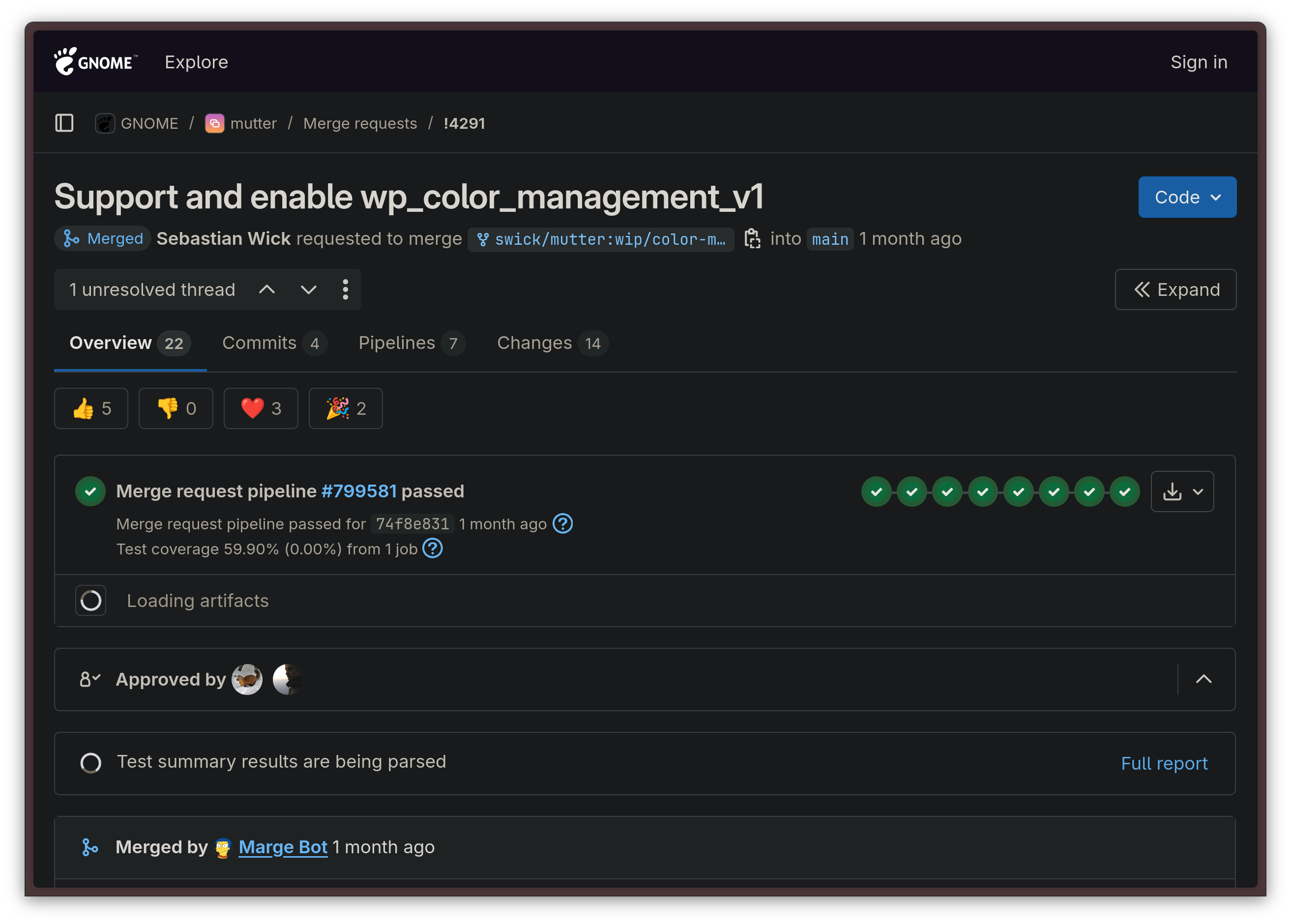

This highly-anticipated protocol is already gaining a lot of interest, but it will be a while until all compositors have implemented it. HDR is an uniquely painful and challenging task, so do expect adoption to be slow. However, support for it is ready and it was recently merged in GNOME's Mutter compositor and Hyprland already, which makes me faithful support for further compositors will follow suit soon.

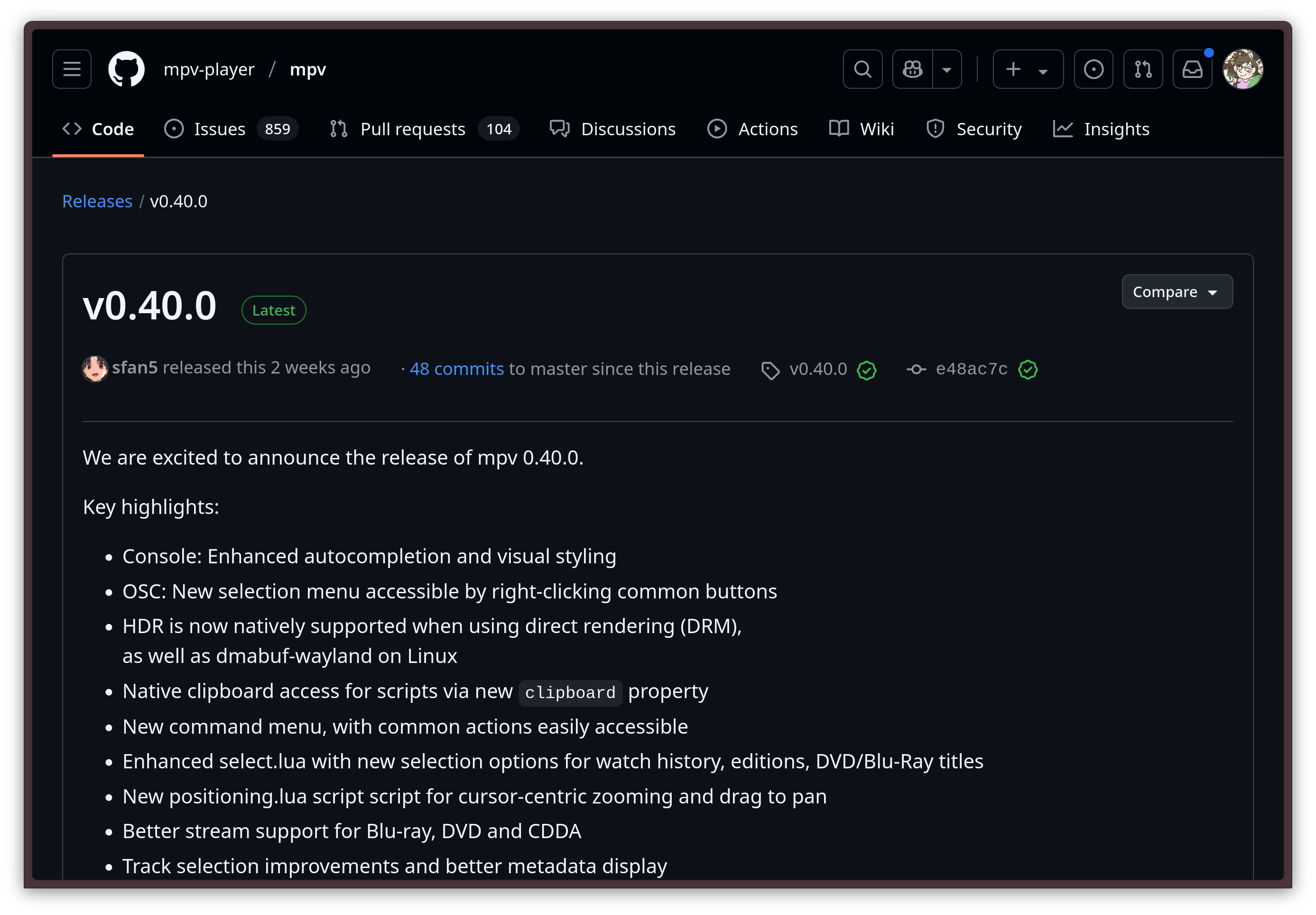

Adoption for this protocol has already started on the client side, too. Industry-standard video player mpv 0.40.0 has juuust released a couple weeks ago with support for HDR on Linux through this protocol!

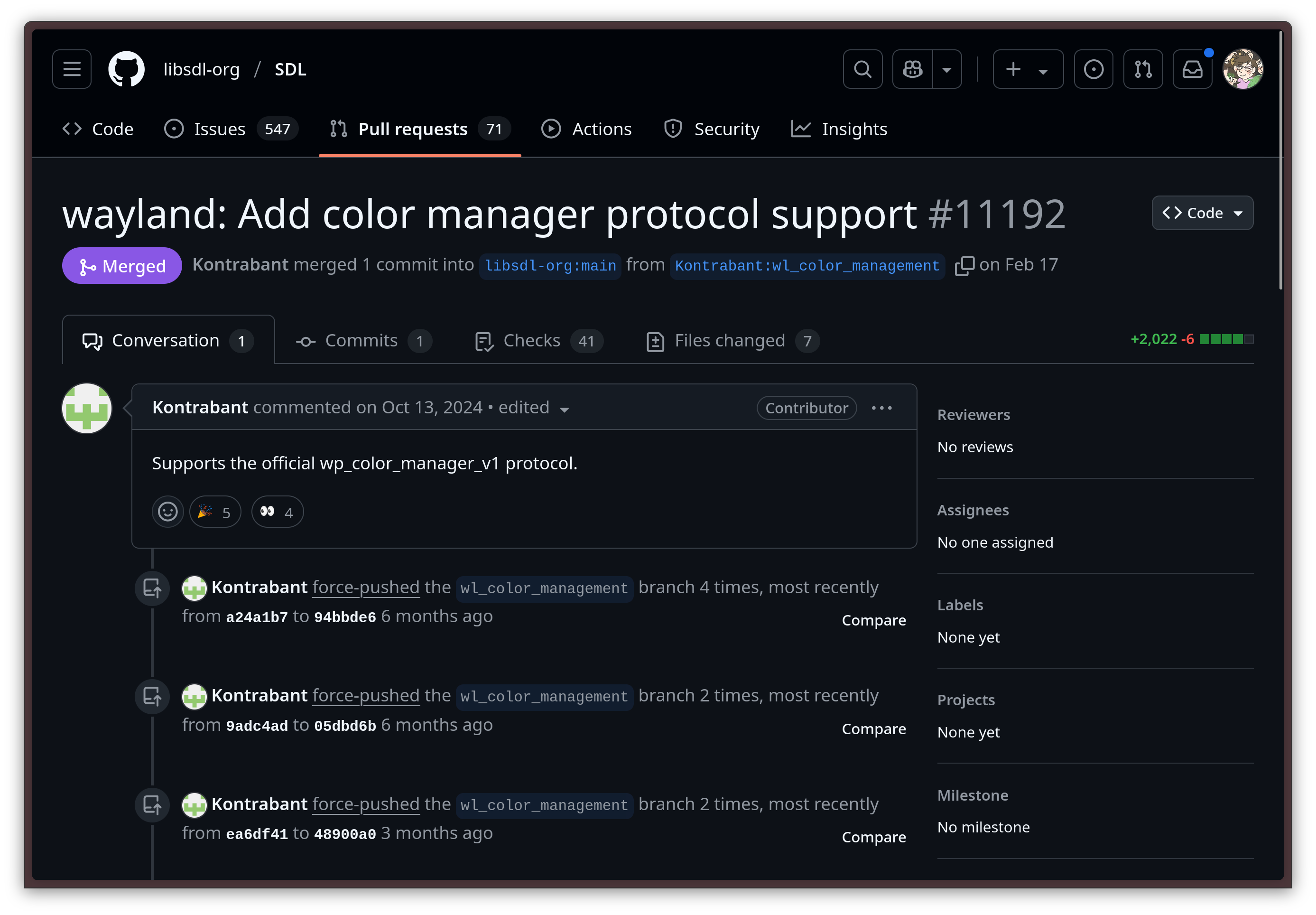

mpv is on board as well!Video rendering library SDL has also committed support for it, which is excellent news, since a plethora of games and graphical applications are based on SDL. This means that the amount of applications that could potentially have HDR support in the very near future might be much higher than we think.

Control AMD Vari-Bright policies right from the DE with power saving vs. power accuracy support

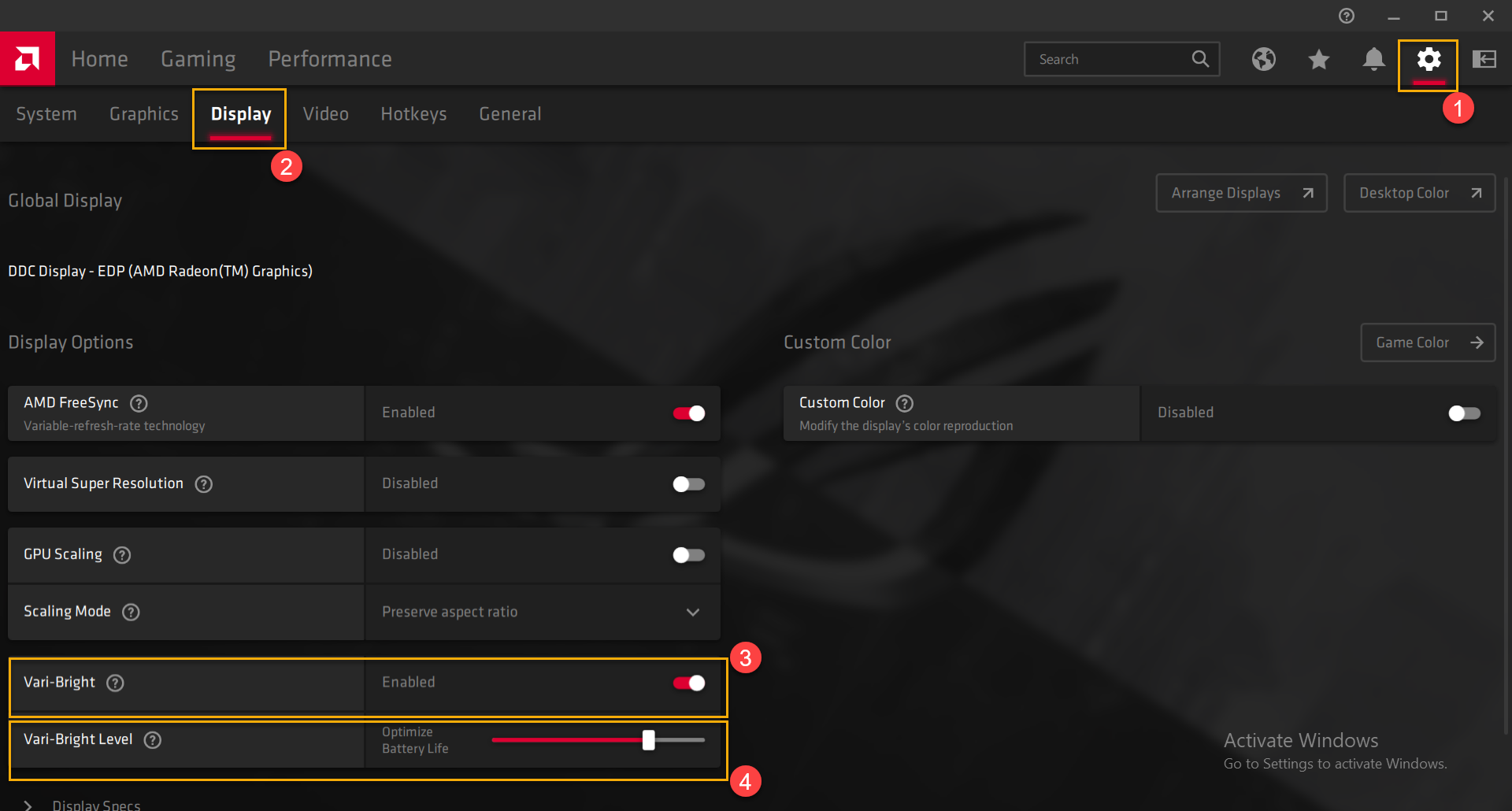

Linux 6.12 has paved the way for graphics drivers development to introduce optional power-saving modes that rely on altering the brightness and contrast of the integrated eDP panel in laptops automatically. So far, the one implementation that is already live for end users is AMD's Adaptive Backlight Management (ABM) functionality, which is the Linux equivalent of the better-known "AMD Vari-Bright" feature from their Windows drivers.

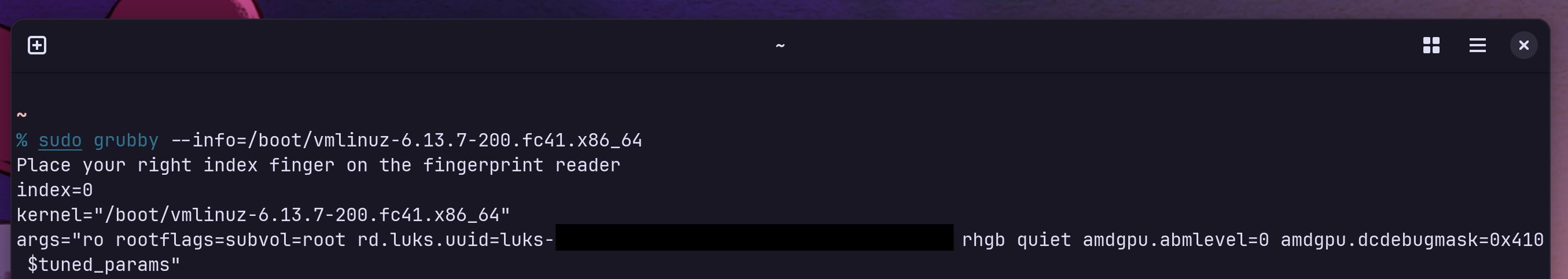

Currently, this feature is on by deafult. Not everybody liked it though - I certainly didn't: when it is on, your laptop's screen becomes very washed out when not plugged in to AC or using the Performance power profile, in an attempt to save battery by dynamically tuning the contrast ratio in a way that makes everything, including darker scenes, more visible on a lower backlight brightness level. There is currently no easy way to disable it: one must either do it through TuneD / PPD, or by passing the kernel cmdline argument amdgpu.abmlevel=0, which fixes the ABM level to the default, accurate one; or amdgpu.abmlevel=-1, which disables the feature altogether.

In the Plasma Wayland Protocols 1.16.0 release, KDE Plasma devs have finally put an end to this, allowing the user to choose their preferred policy - color accuracy or power saving - through the GUI.

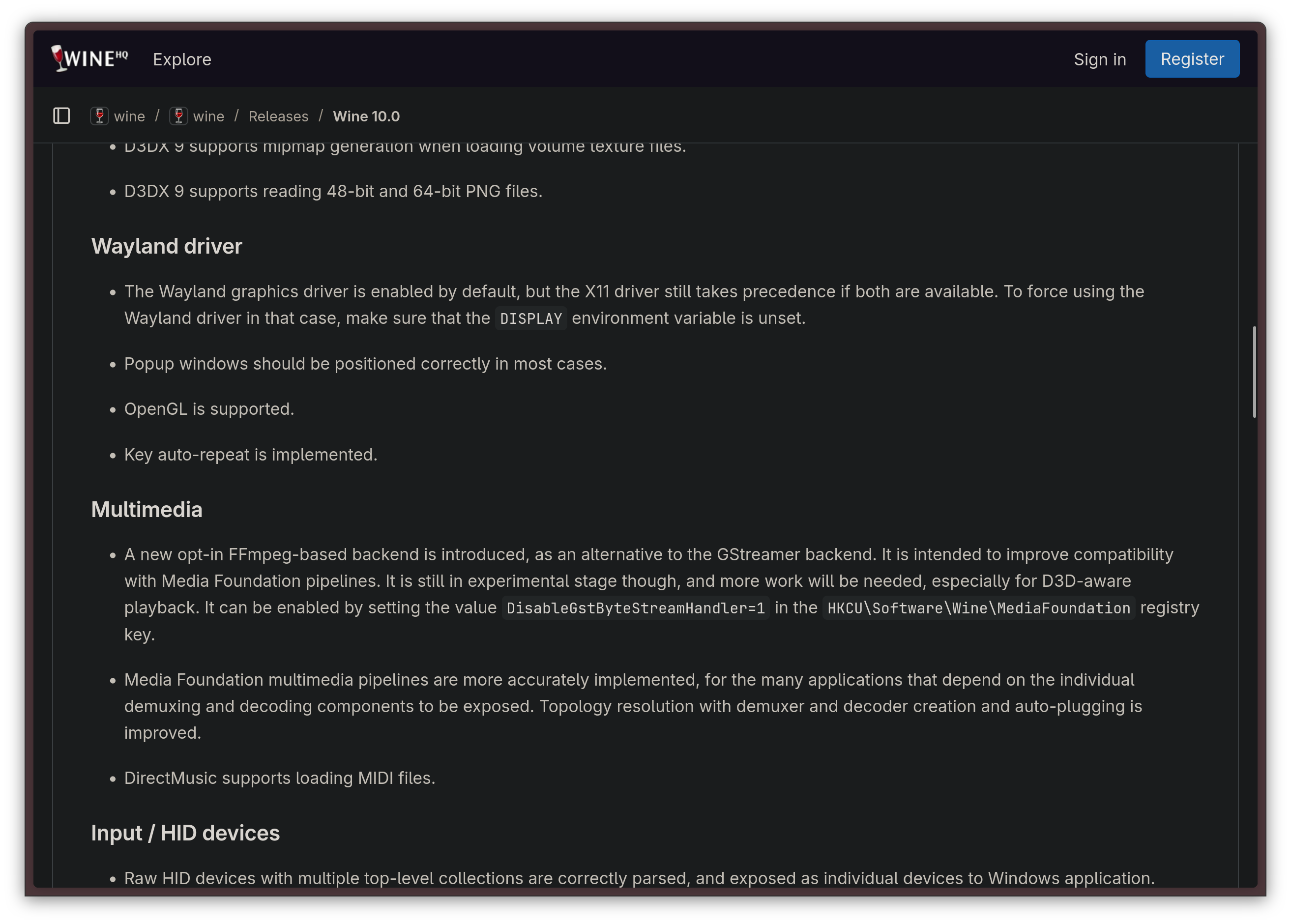

Wine 10 releases with Wayland support

This year, the tenth version of Wine finally launched. For those who do not yet know, Wine is a compatibility layer that allows Win32 applications that were written for Windows systems to run on Linux. Wine contributors did indeed leave something special for this iconic release: a Wayland driver!

While the feature is still experimental, this change is quite pivotal, since Wine had been one of the only applications that did not support Wayland at all.

We can now look forward to more development on this front, and it might just be huge: since most Steam games run through Proton, a Wine implementation, and pretty much all Steam games that have a Linux version can be made run through Proton instead, this change might mean that, one day, we might be able to get gaming to work fully natively on Wayland, without having to use the XWayland compatibility layer for it.

Wine 10 also comes with better HiDPI support, which should make productivity workloads a little less painful on modern laptops, when you absolutely must use some Windows-only software to carry out a task.

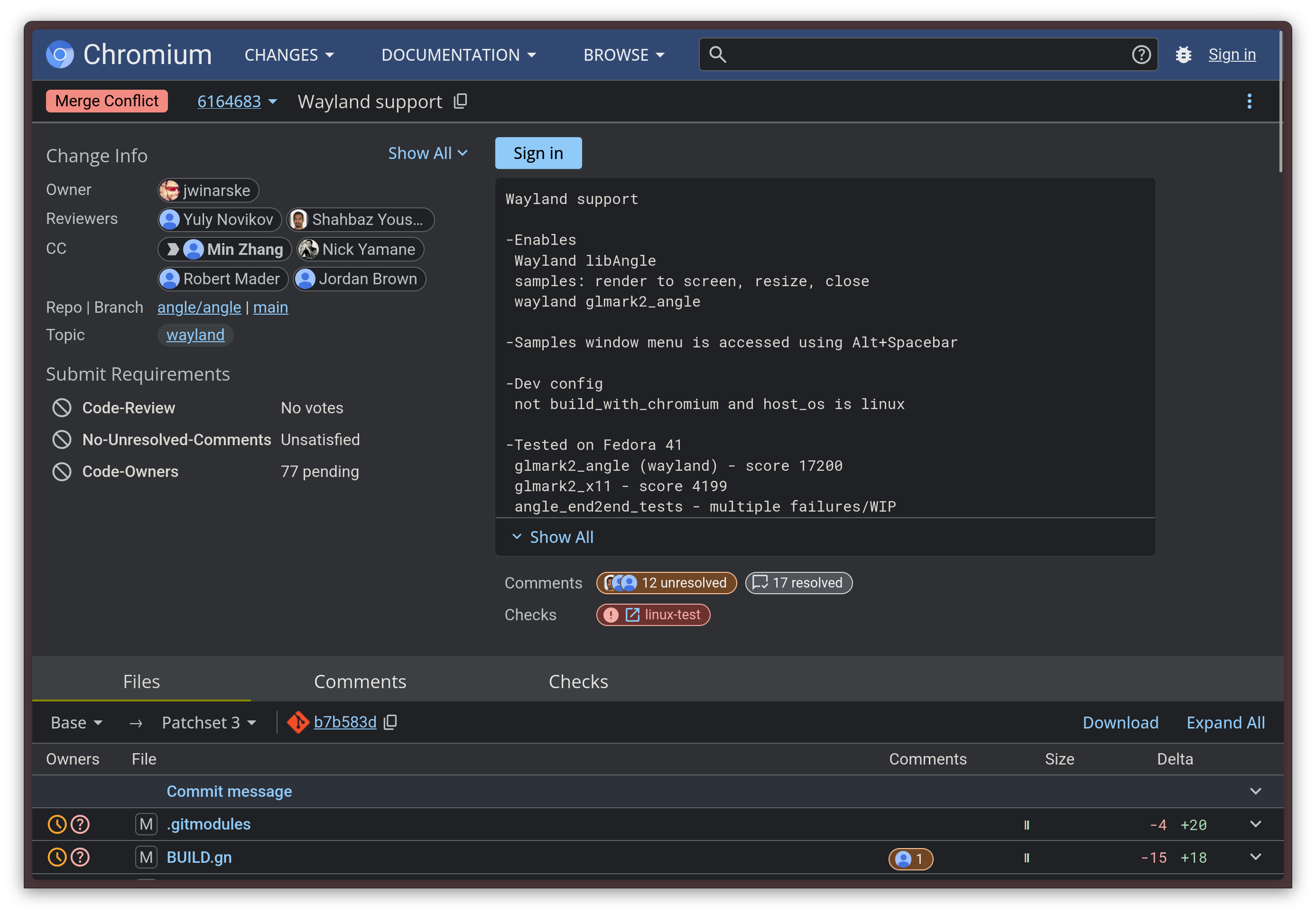

Something's moving for Wayland on CEF!

There is now, at last, initial Wayland support on the Chromium Embedded Framework (CEF). This is a very important advancement, because it is what was holding a lot of important applications, like Spotify and Steam, from moving to a native Wayland session. Right now, Steam works through the XWayland compatibility layer, mostly because it relies on CEF for all the several embedded web views.

We should be cross even more applications off the XWayland list in the near future.

Wayland-exclusive DEs are finally a reality

You know it's serious when new desktop environments do not even support X11 at all, not even as a fallback option. This has been the case for System76's Cosmic desktop since its inception, but it seems like the trend does not end there.

For example, Budgie, a GNOME fork that is famous for being the default desktop in Solus (ah, the good old days...), has just released its first release where Wayland is the only supported session.

KDE Plasma's Wayland support has also gotten so mature that it is now considered the default. On top of that, the contributors of KWin - Plasma's compositor - have decided to split the codebases of kwin_x11 and kwin_wayland, keeping the latter the main one, and maintaining the former until Plasma 7. This is a powerful signal: it means KDE is serious on Wayland being the default and the preferred session, and they are also slowly preparing to move on. If you need to use X11 still, I wouldn't worry too much: Plasma 7 is likely still very far away, and the X11 session will still be fully supported through the entire Plasma 6 lifecycle. However, you should slowly be preparing to move: support for X11 on most major DE's has its days counted. Just to give a proper idea, even Xfce is preparing a Wayland port.

A bright future

While there is no doubt that the migration to Wayland was a bit of a bloodbath for the early adopters, things are going much smoother now. The Wayland ecosystem also has a healthy timeline ahead, and there is plenty to look out for in the future.