Table of Contents

This is the fifth week of me being back at recording videos, and I think it's time for me to tell you how I do these articles and videos. I have a few things I'm particularly proud of showing off, by the way.

Let's start with scriptwriting.

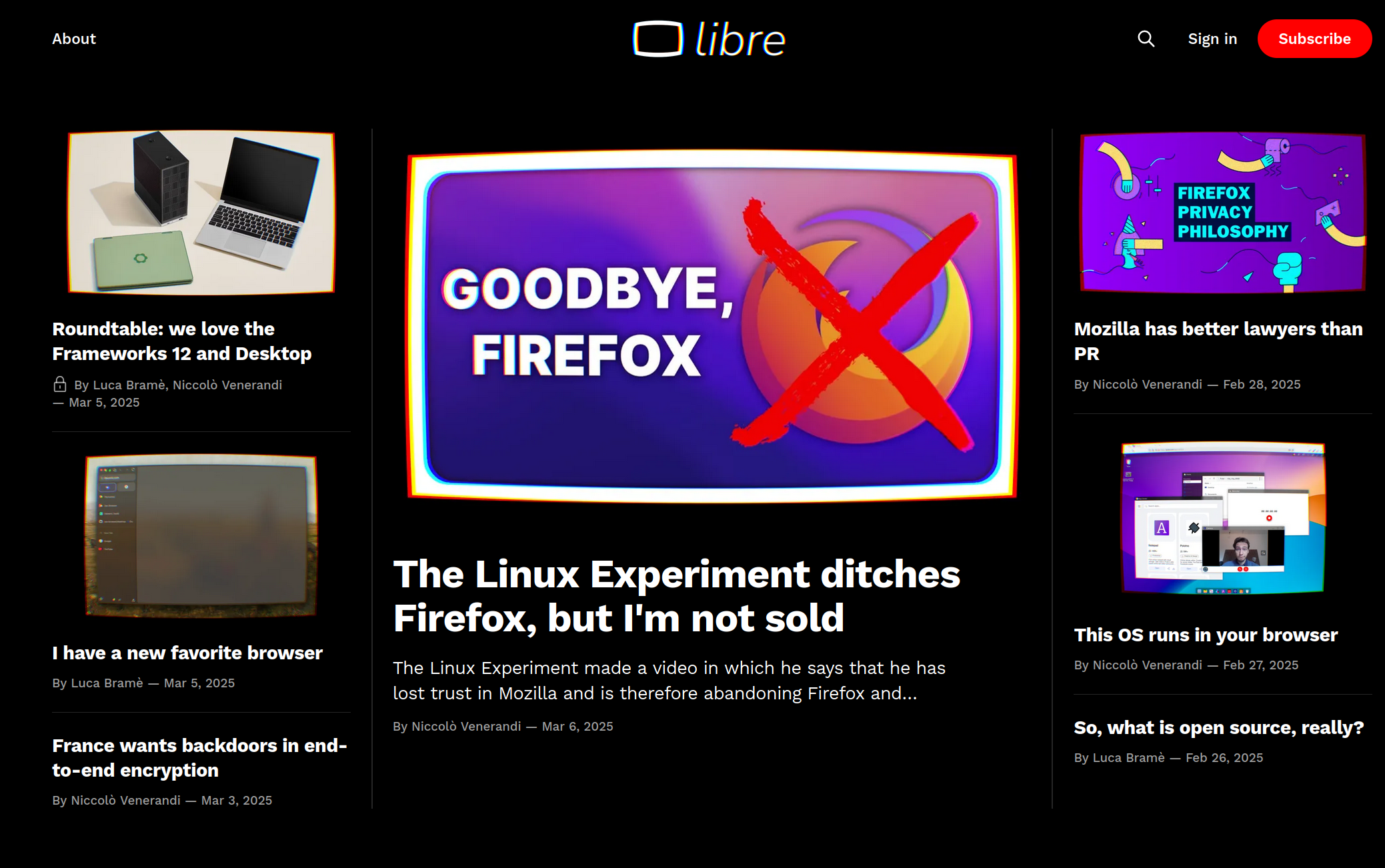

I write all scripts using Ghost, the open-source blogging platform, and all of them are also published as their own standalone articles on the website thelibre [dot] news. Some people prefer reading them instead of watching videos, so this makes sure everyone is happy.

As soon as I'm done writing a script I'll publish it, which means you can read the videos before they're published on the website.

You can also check the author of a particular script on the website; lots of them are written by my (really good) collaborator, Luca. He has a formal education in computer science, whereas I'm missing two exams before getting a Maths bachelor, so he's the one that knows more about technical stuff.

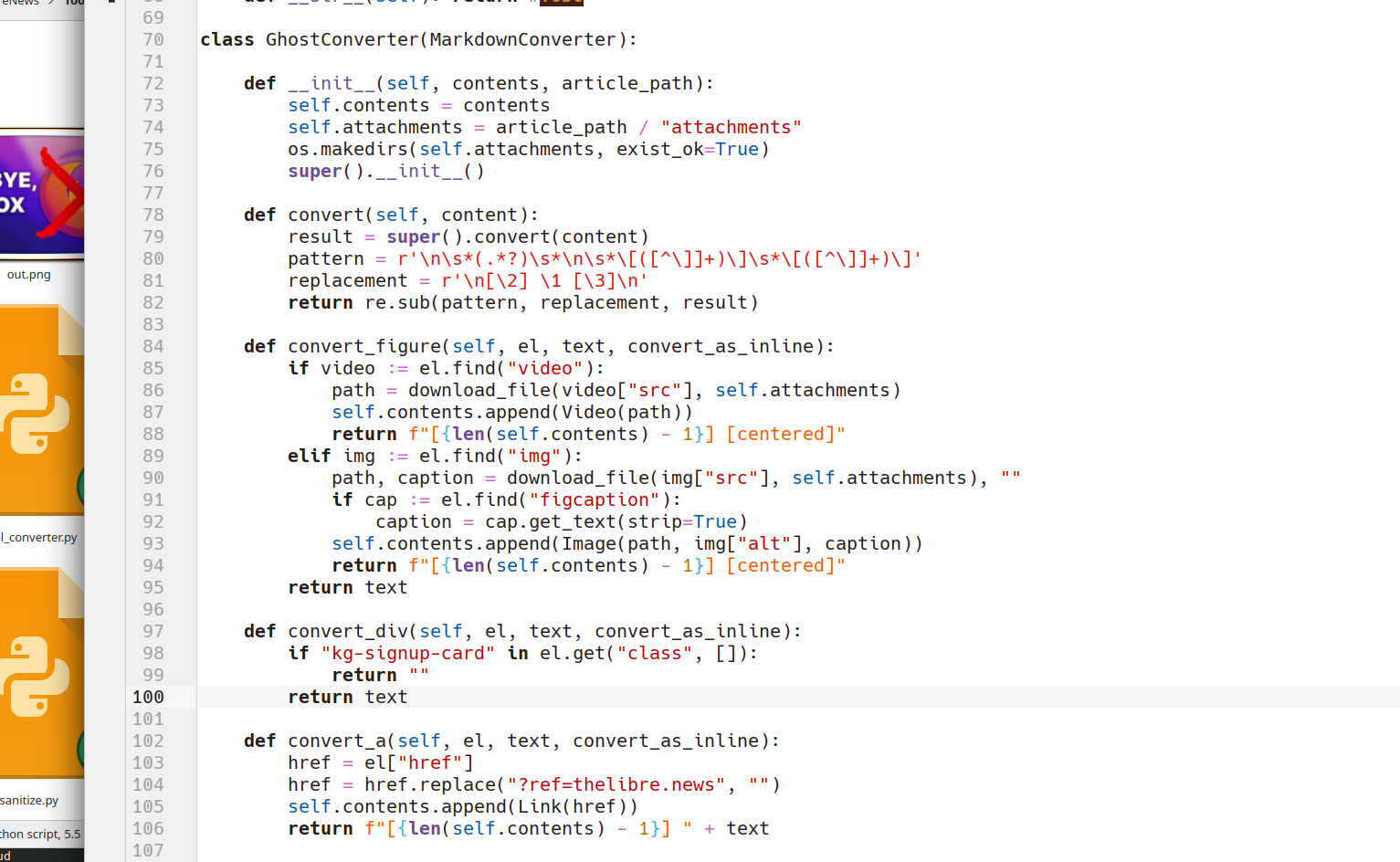

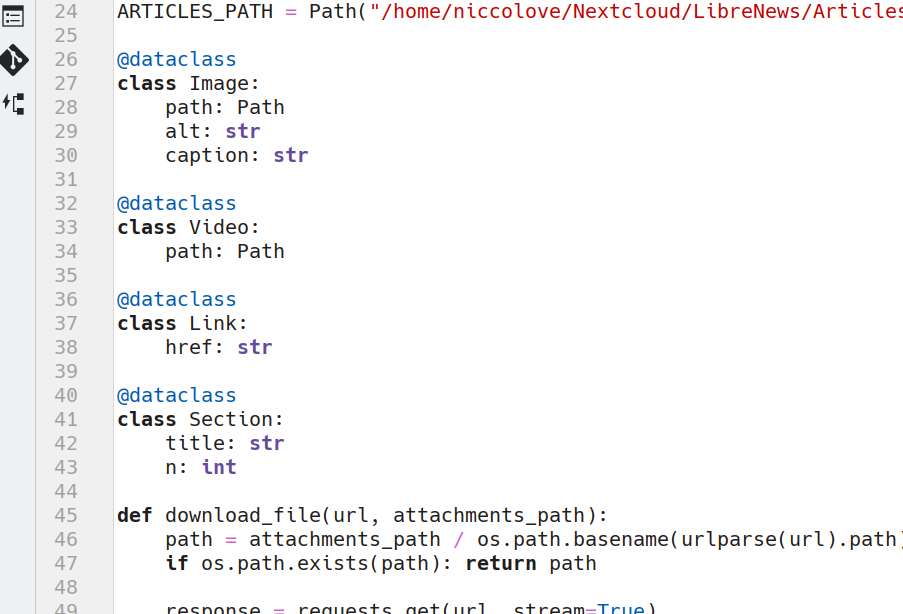

As soon as the article is done, I need to transform it to a markdown. I wrote a python script that fetches this webpage and uses a custom MarkdownConverter to, well, convert the article to a markdown-ish thing.

I say markdown-ish because the script actually builds various Python classes, each representing a possible content of the script, and inserts in the text of the script some blocks that specify which Python class goes where. Of course, it also downloads everything locally.

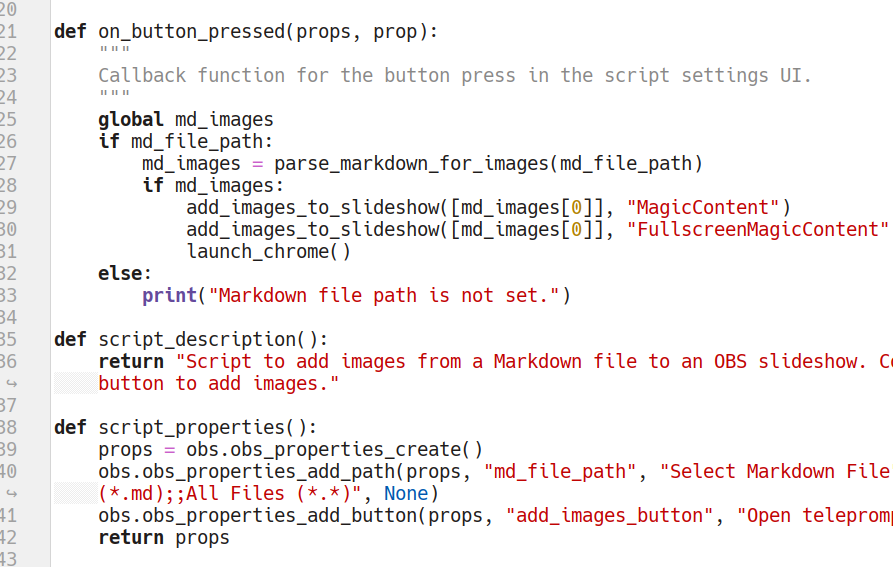

Now, for the recording part, I use OBS. (I'll get to hardware later). It has various scenes for the various looks that are needed during the video: just my face, or the sidebar with the content, or the fullscreen content, and so on.

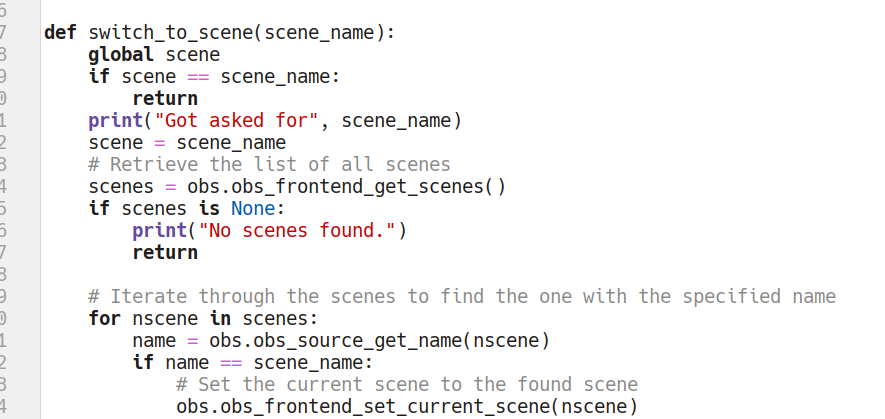

The above-mentioned script is exposed as an OBS script, meaning that I can execute it through OBS directly, providing a link to the script.

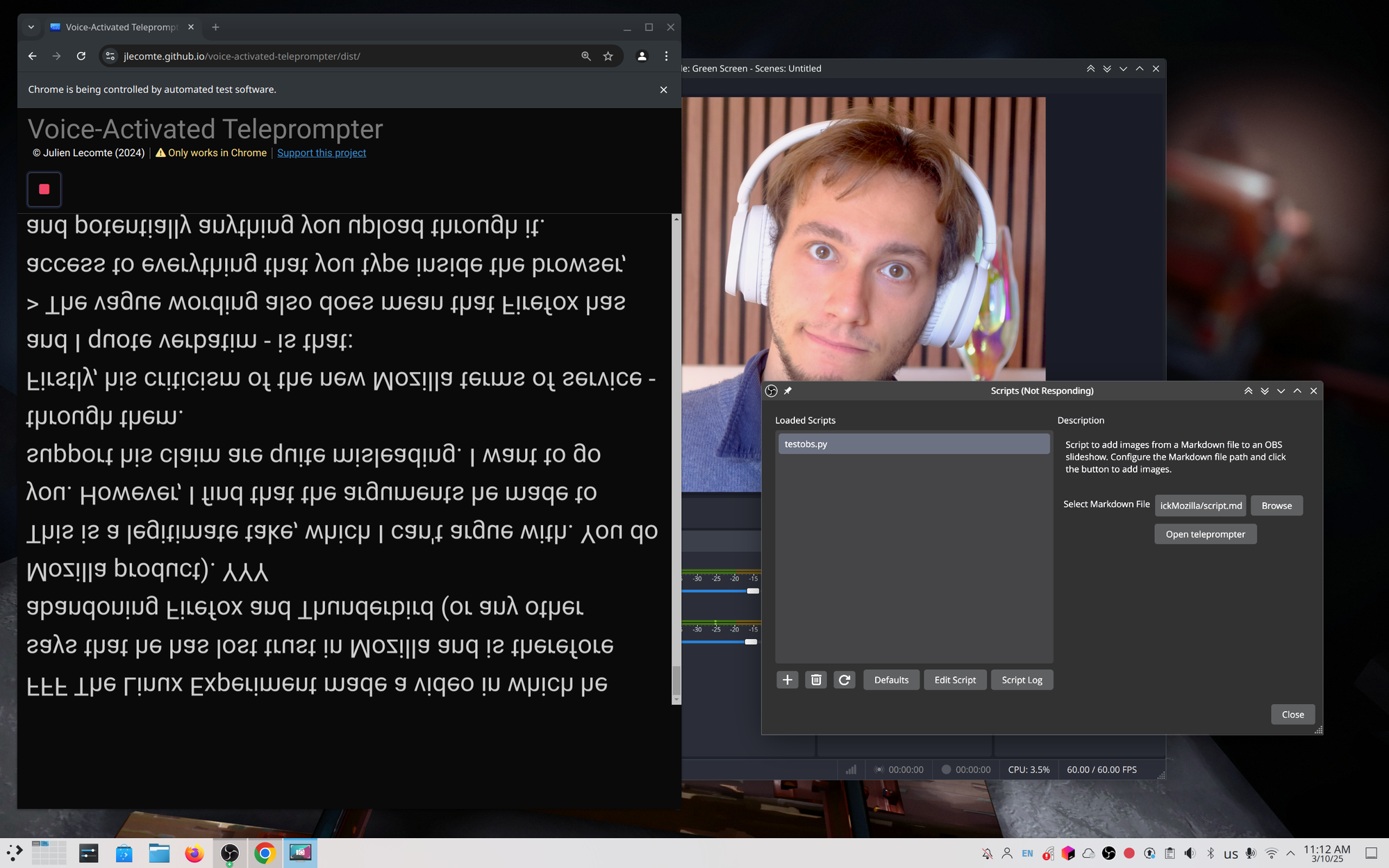

The script will also open a Chromium instance, controlled via Selenium, to the voice-activated teleprompter built by Julien Lecomte. It follows my voice as I speak, it simply runs in the browser, and the script can control it fully.

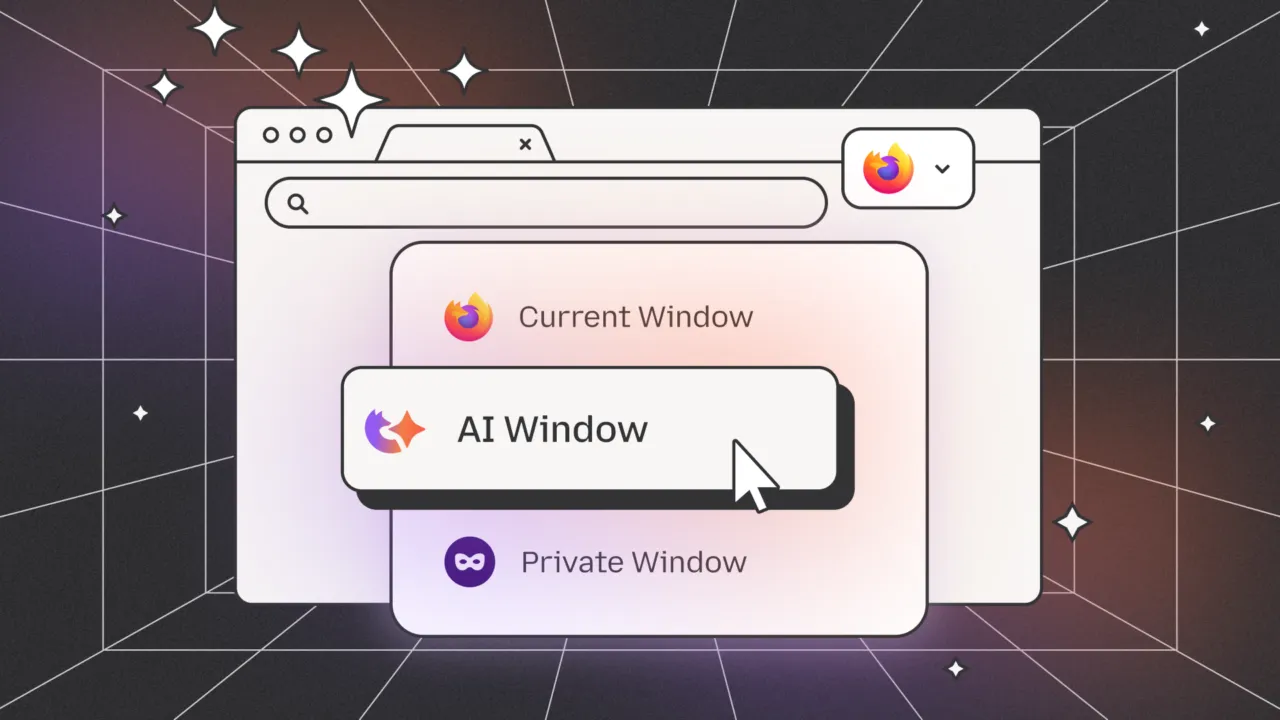

Now, the script automatically loads the script on this teleprompter page, along with the image and video markers in square brackets (which are ignored by the teleprompter app). The cool thing is, the script will track the word I'm currently reading, and as soon as I hit a image/video marker, it will switch to the corresponding scene and show the linked image or video.

It will also automatically switch back to me talking when the paragraph ends, or the video ends. This way, the video will already be fully edited as soon as I stop recording, as the entire graphical part is handled directly by OBS and this script.

Now, this has a few flaws. Firstly, the teleprompter application sometimes just stops. It does this every five minutes or so. I don't have any clue why. This means I have to stop reading, re-set the teleprompter (which takes around 30 seconds), and then start again. This isn't very pleasant, but whatever.

I also can't really repeat sentences too much. I could probably try repeating the last sentence I said, and maybe the teleprompter would pick that up and go back, but if it doesn't, I'm sort of lost in the script, and I don't even have a way to know which parts I have repeated to cut out later. This is why I usually keep mispronounced words in the video: I don't yet have a workflow to edit them out.

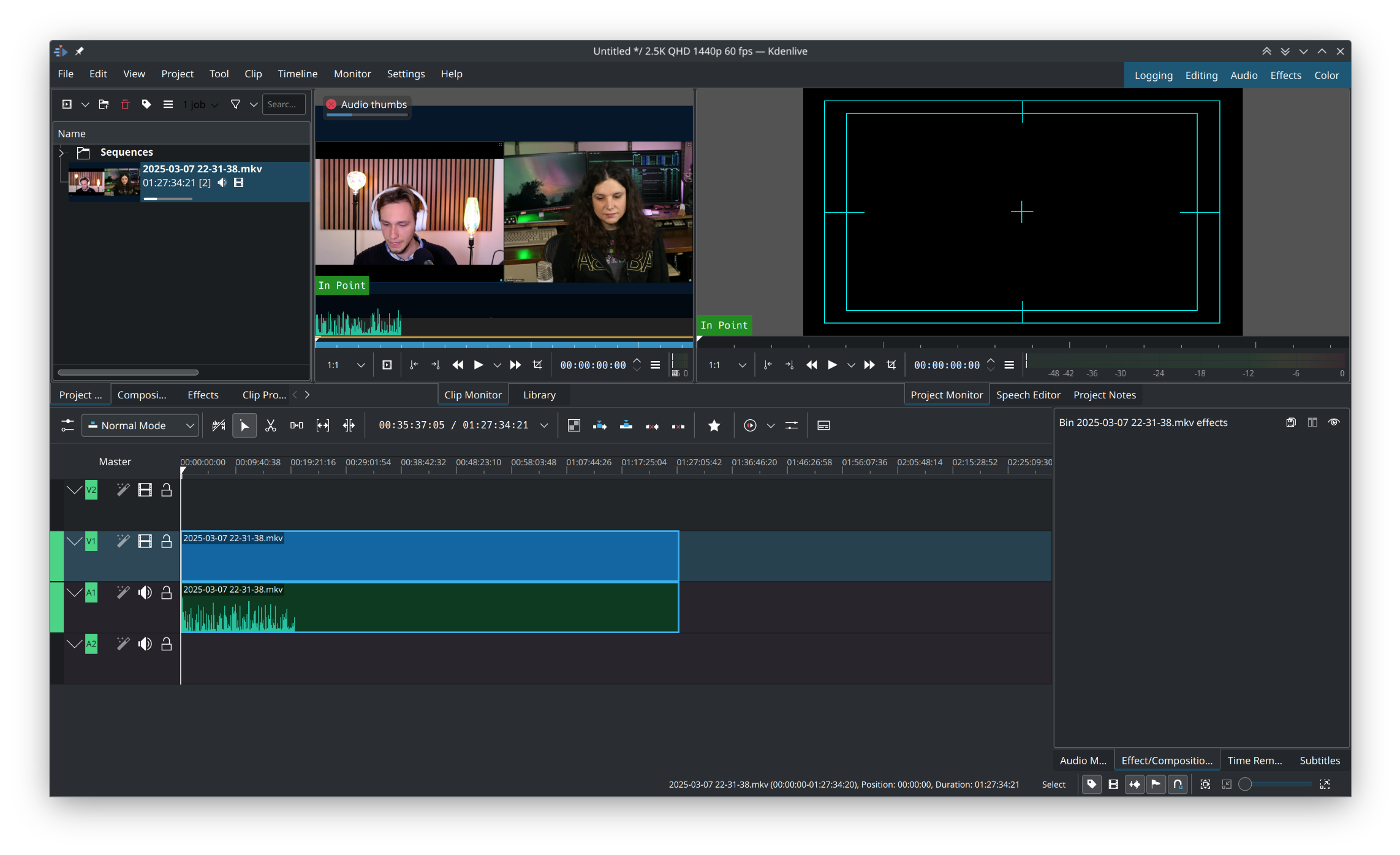

That said, there is a brief editing step. Firstly, I cut out the thirty seconds of silence when the teleprompter stops, and I have to reset it, obviously. I also add some background music. This editing part is done in Kdenlive, and I personally consider it to be the best Linux editing application.

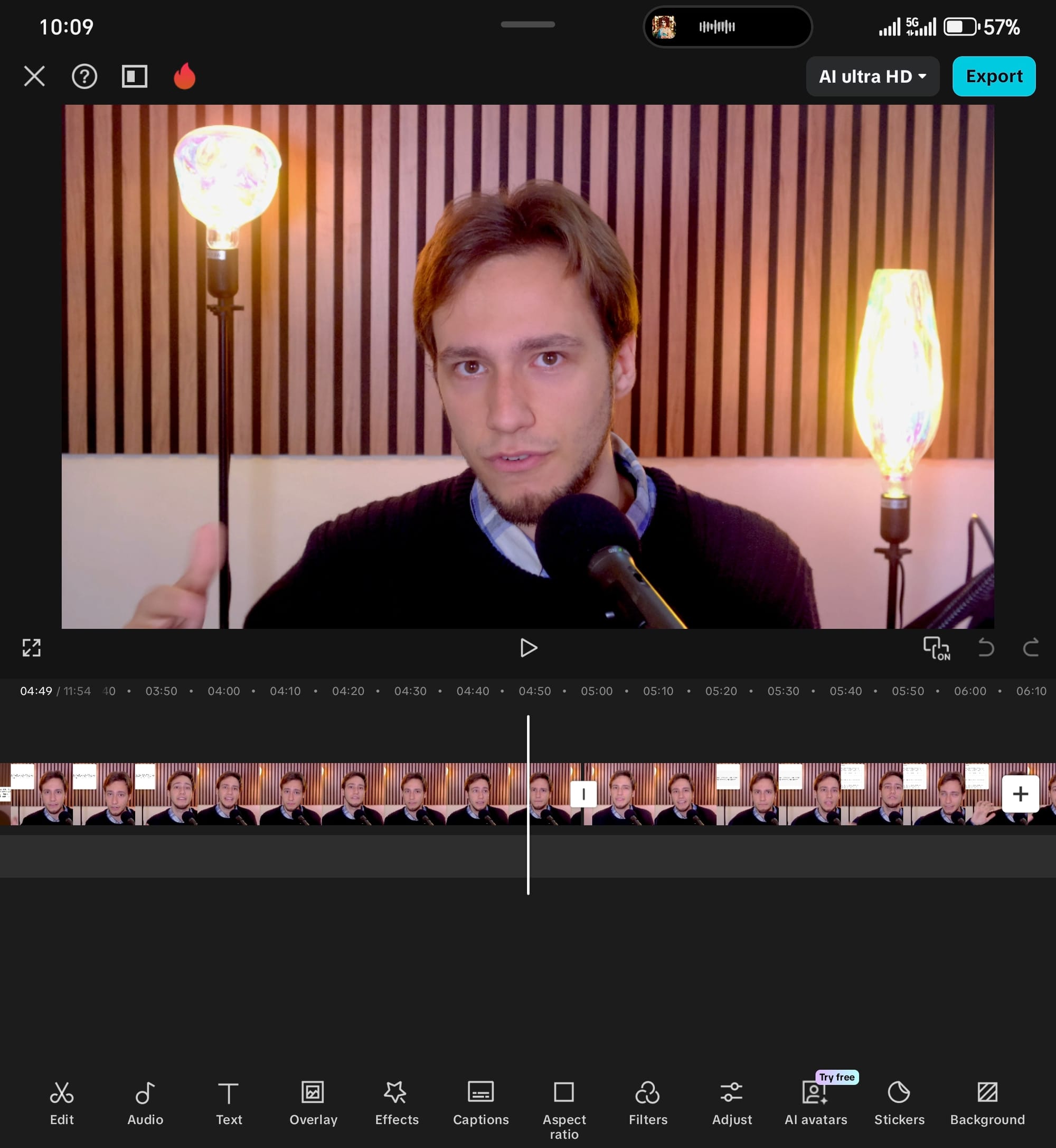

Though, I have to admit that one time - one time - I also edited the recording on the go via capcut. I had to run, and it's really easy to get the final file from just the raw recording, so I just did it on my phone. And yes, I'm listening to Chappell Roan.

In the future, I'll be able to improve the script to provide better graphics, more animations, whooshing sounds when switching scenes, and more.

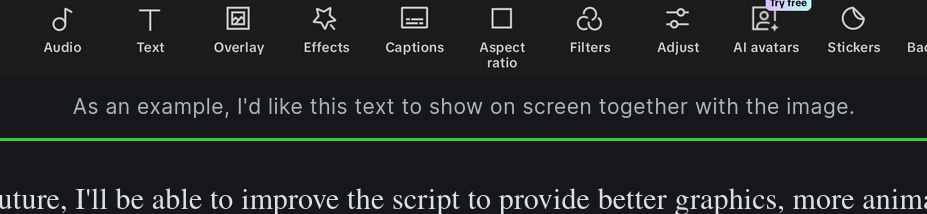

As an example, I'd like to make sure that the caption of images appear on screen, so that - if I screenshot an article - I can put the source in the description and it will appear automatically.

Or, I would also like the script to recognize when I reach a certain sub-title in the original script and output the chapters to copy and paste in the video description, along with links to sources. There's a lot of room for improvement, which is what I like the most about this.

Now, I haven't talked at all about the hardware I use. Firstly, I'm a proud owner of a Fujifilm XT3 which I use both for YouTube videos, but also personal photograpy. It's a great camera, with some limitation - such as, no stabilization - but very versatile.

I own various lenses for it, but the one I use for recording in the kit lens is a 18-55 millimeters, usually set at 35mm, which is the APS-C equivalent of 52mm full frame. I absolutely hate this lens for photography, but it's also my only lens with autofocus, so that's what I use for videos.

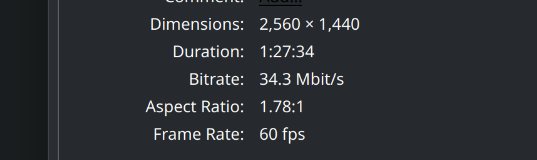

I don't record in-camera, because - again - I don't have the time to edit gigabytes of videos, I have to be quick. I instead capture the 4k output from the camera, using the amazing capture card that I was sent from Slimbook. I can't recommend this capture card enough – it has never failed me, and it's 4k!

Now, I don't actually record 4k videos, as that would kill my CPU and slow the editing and uploading steps too much right now. Instead, I decided to settle on the more reasonable 1440p – still a lot of pixels, but much easier to handle. This means that I can zoom in on my camera input without losing too much quality.

The microphone that you see here is a Samson Q2U. You can find it for less than a hundred bucks, and it's dynamic, so it does not pick up much background noise. It has both XLR output and a USB cable, and I use the latter; sure, I could go with XLR, but again, I like to keep things reasonably simple.

I picked up the boom arm on Facebook Marketplace. The same goes for the teleprompter mirror. Fun fact: I used to have a monitor attached with duct tape on the bottom of the teleprompter, but I managed to break it during transportation. So, now I just position my laptop itself under the mirror, with the keyboard folded flat. It works; don't judge me.

If you want to hear something even weirder, I don't actually use my laptop for recording or editing; I instead use my KFocus NX computer. However, I don't want to have a monitor and keyboard on my desk either.

The solution? Well, my laptop supports display inputs. Not outputs, inputs. If I turn it off and connect it to my desktop, it acts as a monitor and keyboard. So when I'm done writing, I turn off my laptop, connect the desktop, and turn it on. It's weird, but it works.

If you were wondering, my laptop runs Arch Linux and contains my KDE development session, whereas the desktop runs Ubuntu and is stuck back at KDE Plasma 5, simply because I'm too scared to touch it and break something. I'll probably update it, one day. One day.

Right, lights. I currently use four of them.

The main one is an Elgato Key Light Air (what a terrible name!). It's supposed to be customizable in both brightness and temperature, but the application sucks so I just keep it to whatever I set it to, years ago. It's bright-ish.

On the other side, there's a rather diffused LED light. My brother broke it (he claims it broke by itself, but of course I have to blame him), so it emanates a very blue light. It's also customizable, but the application also sucks. Maybe the problem is me?

Finally, there's the light behind me. It's a plasticky thing that I found in a house construction store near me, called "Briccoman". By the way, thank god that this is some strong plastic instead of glass, because I managed to knock them down multiple times – and they don't have a single scratch.

I really like them because they also reflect on my hair and act as a fill light. It wasn't intended, but it's nice that it works.

Every illustration or graphic in my channel is done using Inkscape. I love it.

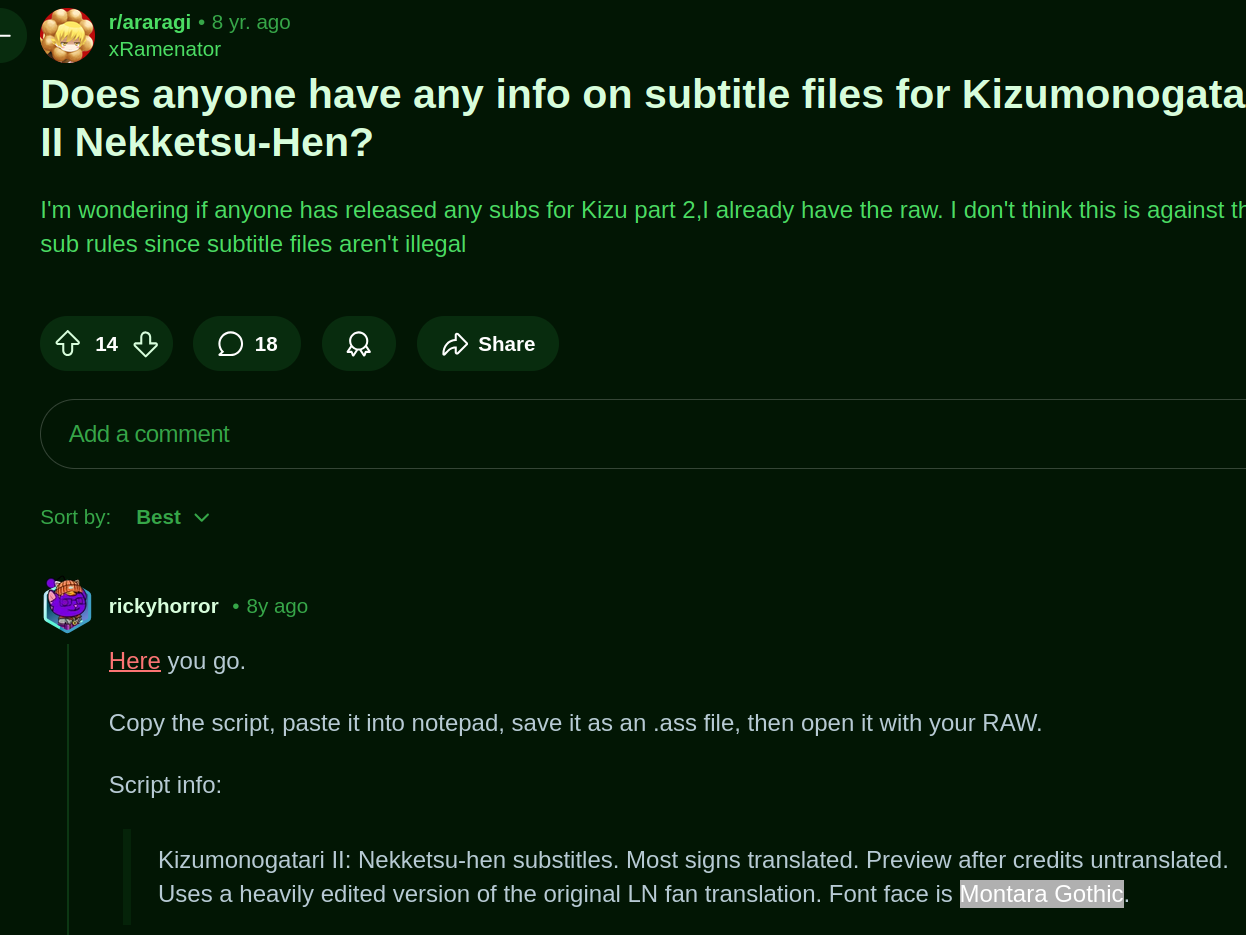

The font is some open-source alternative to Montara Gothic, which - funnily enough - I discovered about since it was used for the subtitles of my favorite anime. I would like to move away from it, though I have yet to pick a new one.

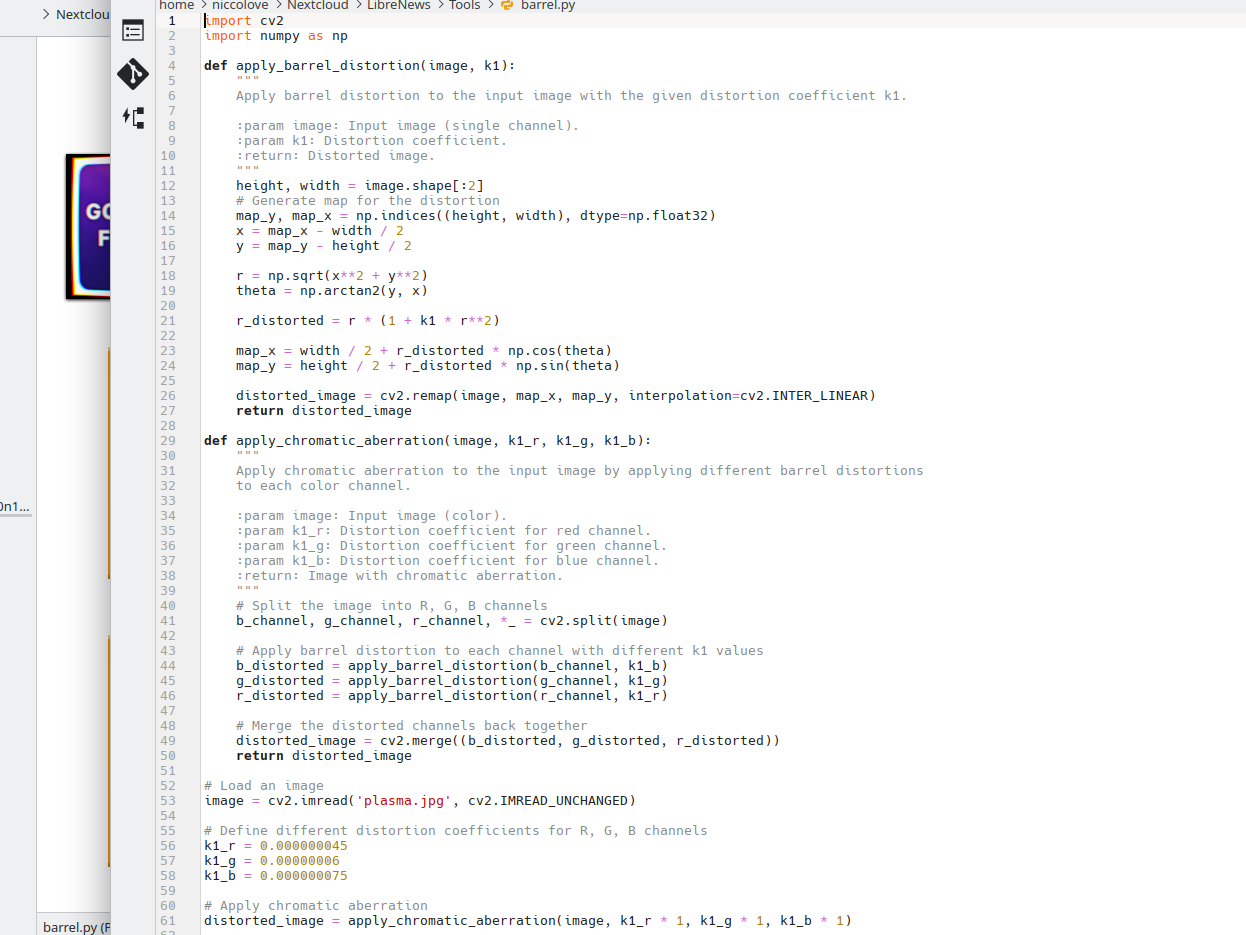

Finally, the graphics on the LibreNews website. You can see that all pictures have chromic aberration and barrel distortion effects applied. These are provided by a Python script that uses some numpy math. The only issue is that they only work on dark backgrounds, which makes it a bit harder to provide a light version of the website.

Now, let's talk about what's next for the channel.

Firstly, I'd like to buy the Elgato Teleprompter or some alternative. I just like the idea of having the monitor built-in to the teleprompter, instead of having to figure it out myself.

Secondly, I have to finish the script to provide the new transitions and graphical things. I would also like to figure out why the teleprompter dies down every five minutes and fix that, so that I can put the background musing in OBS and not even have to use Kdenlive at all. Or - why not? - make the script automatically upload the video on YouTube on my behalf.

Thirdly, I want to improve the recording background somewhat. I find it a bit boring, and it only works with a rather small view, which means you don't get to see much of me. Most other YouTubers use a wider view.